Gesture Recognition

Introduction

Gesture

- a movement usually of the body or limbs that expresses or emphasizes an idea, sentiment, or attitude

- the use of motions of the limbs or body as a means of expression

Automatic Gesture Recognition

- A gesture recognition system generates a semantic description for certain body motions

- Gesture recognition exploits the power of non-verbal communication, which is very common in human-human interaction

- Gesture recognition is often built on top of a human motion tracker

Applications

- Multimodal Interaction

- Gestures + Speech recognition

- Gestures + gaze

- Human-Robot Interaction

- Interaction with Smart Environments

- Understanding Human Interaction

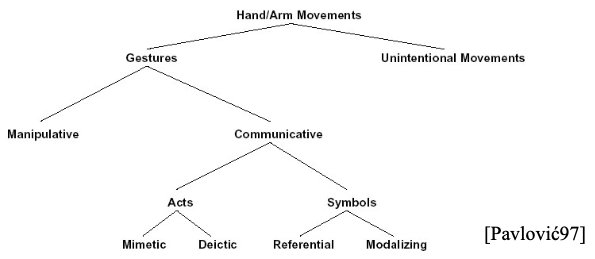

Types of Gestures

Hand & arm gestures

- Pointing Gestures

- Sign Language

Head gestures

- Nodding, head shaking, turning, pointing

Body gestures

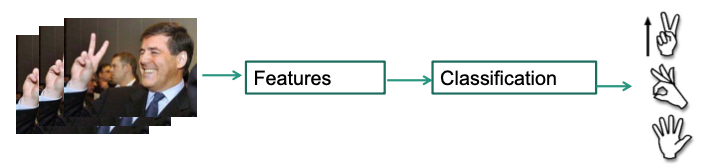

Automatic Gesture Recognition

- Feature Acquisition

- Appearances: Markers, color, motion, shape, segementation, stereo, local descriptors, space-time interest points, …

- Model based: body- or hand-models

- Classifiers

- SVM, ANN, HMMs, Adaboost, Dec. Trees, Deep Learning …

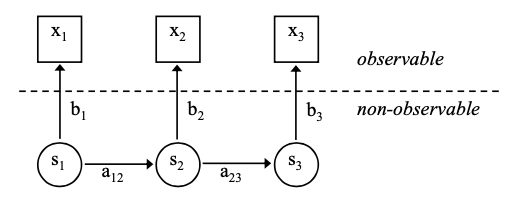

Hidden Markov Models (HMMs) for Gesture Recognition

“hidden”: comes from observing observations and drawing conclusions WITHOUT knowing the hidden sequence of states

Markov assumption (1st order): the next state depends ONLY on the current state (not on the complete state history)

A Hidden Markov Model is a five-tuple $$ (S, \pi, \mathbf{A}, B, V) $$

- $S = \{s_1, s_2, \dots, s_n\}$: set of states

- $\pi$: the initial probability distribution

- $\pi(s_i)$ = probability of $s_i$ being the first state of a state sequence

- $\mathbf{A} = (a_{ij})$: the matrix of state transition probabilities

- $(a_{ij})$: probability of state $s_j$ following $s_i$

- $B = \{b_1, b_2, \dots, b_n\}$: the set of emission probability distributions/densities

- $b_i(x)$: probability of observing $x$ when the system is in state $s_i$

- $V$: the observable feature space

- Can be discrete ($V = \{x_1, x_2, \dots, x_v\}$) or continuous ($V = \mathbb{R}^d$)

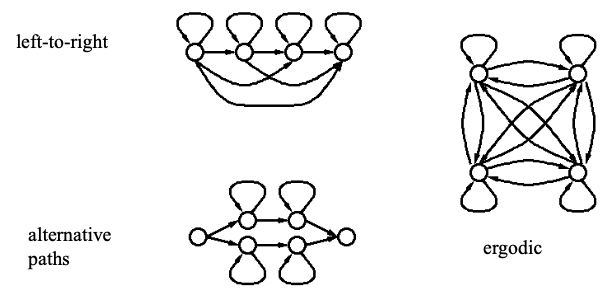

Properties of HMMs

For the initial probabilities: $$ \sum_i \pi(s_i) = 1 $$

- Often simplified by $$ \pi(s_1) = 1, \quad \pi(s_i > 1) = 0 $$

For state transition probabilities: $$ \forall i: \sum_j a_{ij} = 1 $$

- Often: $a_{ij} = 0$ for most $j$ except for a few states

When $V = \{x_1, x_2, \dots, x_v\}$ then $b_i$ are discrete probability distributions, the HMMs are called discrete HMMs

When $V = \mathbb{R}^d$ then $b_i$ are continuous probability density functions, the HMMs are called continuous (density) HMMs

HMM Topologies

The Observation Model

Most popular: Gaussian mixture models $$ P\left(x_{t} \mid s_{j}\right)=\sum_{k=1}^{n_{j}} c_{j k} \cdot \frac{1}{\sqrt{(2 \pi)^{n}\left|\Sigma_{j k}\right|}} e^{-\frac{1}{2}\left(x_{t}-\mu_{j k}\right)^{\mathrm{T}} \Sigma_{j k}^{-1}\left(x_{t}-\mu_{j k}\right)} $$

- $n_j$: number of Gaussians (in state $j$)

- $c_{jk}$: mixture weight for $k$-th Gaussian (in state $j$)

- $\mu_{jk}$: means of $k$-th Gaussian (in state $j$)

- $\Sigma_{jk}$: covariane matrix of $k$-th Gaussian (in state $j$)

Three Main Tasks with HMMs

Given an HMM $\lambda$ and an observation $x_1, x_2, \dots, x_T$

The evaluation problem

compute the probability of the observation $p(x_1, x_2, \dots, x_T | \lambda)$

$\rightarrow$ “Forward Algorithm”

The decoding problem

compute the most likely state sequence $s_{q1}, s_{q2}, \dots, s_{qT}$, i.e. $$ \operatorname{argmax}_{q 1, \ldots, q \tau} p\left(q_{1}, . ., q_{T} \mid x_{1}, x_{2}, \ldots, x_{T}, \lambda\right) $$ $\rightarrow$ “Viterbi-Algorithm”

The learning/optimization problem

Find an HMM $\lambda^\prime$ s.t. $p\left(x_{1}, x_{2}, \ldots, x_{T} \mid \lambda^{\prime}\right)>p\left(x_{1}, x_{2}, \ldots, x_{T} \mid \lambda\right)$

$\rightarrow$ “Baum-Welch-Algo”, “Viterbi-Learning”

Sign Language Recognition

- American Sign Language (ASL)

- 6000 gesture describe persons, places and things

- Exact meaning and strong rules of context and grammar for each

- Sign recognition

- HMM ideal for complex and structured hand gestures of ASL

Feature extraction

- Camera either located as a 1st-person and a 2nd-person view

- Segment hand blobs by a skin color model

HMM for American Sign Language

Four-State HMM for each word

Training

- Automatic segmentation of sentences in five portions

- Initial estimates by iterative Viterbi-alignment

- Then Baum-Welch re-estimation

- No context used

Recognition

- With and without part-of-speech grammar

- All features / only relative features used

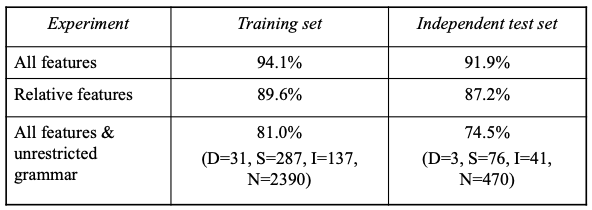

ASL Results

Desk-based

348 training and 94 testing sentences without contexts

Accuracy: $$ Acc = \frac{N-D-S-I}{N} $$

- $N$: #Words

- $D$: #Deletions

- $S$: #Substituitions

- $I$: #Insertions

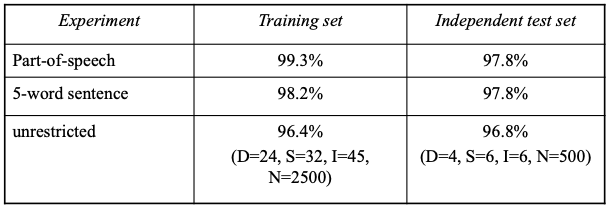

Wearable-based

- 400 training sentences and 100 for testing

- Test 5-word sentences

- Restricted and unrestricted similar!

Pointing Gesture Recognition

Pointing gestures

are used to specify objects and locations

can be needful to resolve ambiguities in verbal statements

Definition: Pointing gesture = movement of the arm towards a pointing target

Tasks

- Detect occurrence of human pointing gestures in natural arm movements

- Extract the 3D pointing direction