Long Short-Term Memory (LSTM)

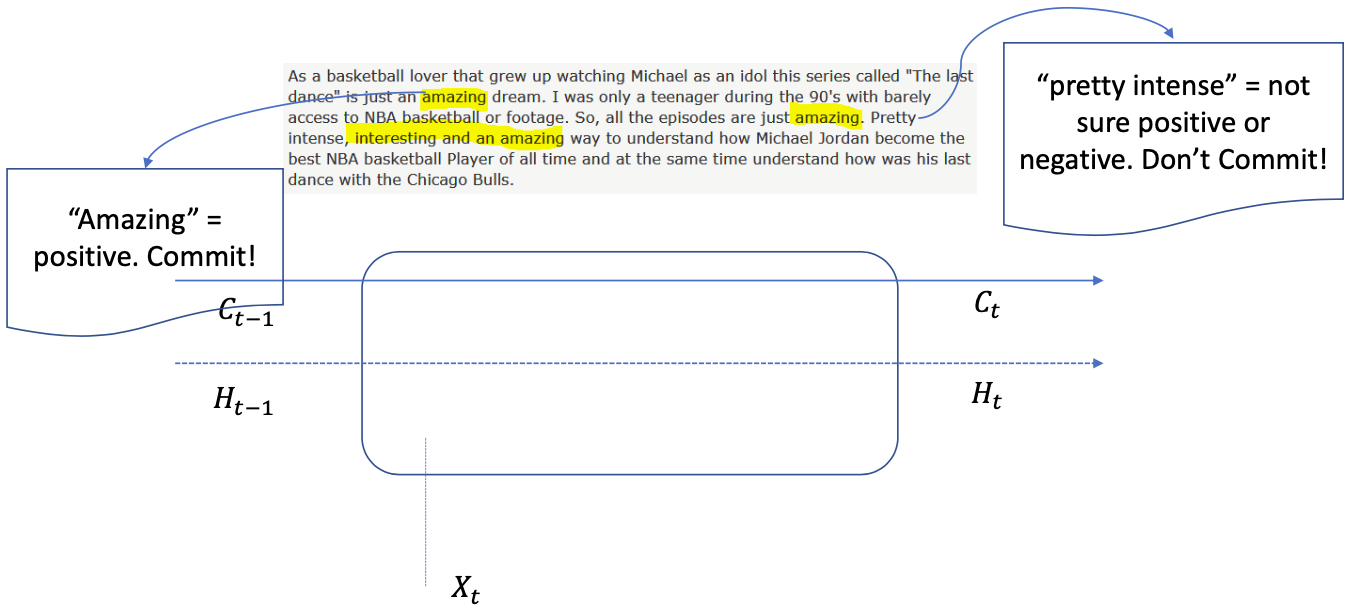

Motivation

- Memory cell

- Inputs are “commited” into memory. Later inputs “erase” early inputs

- An additional memory “cell” for long term memory

- Also being read and write from the current step, but less affected like 𝐻

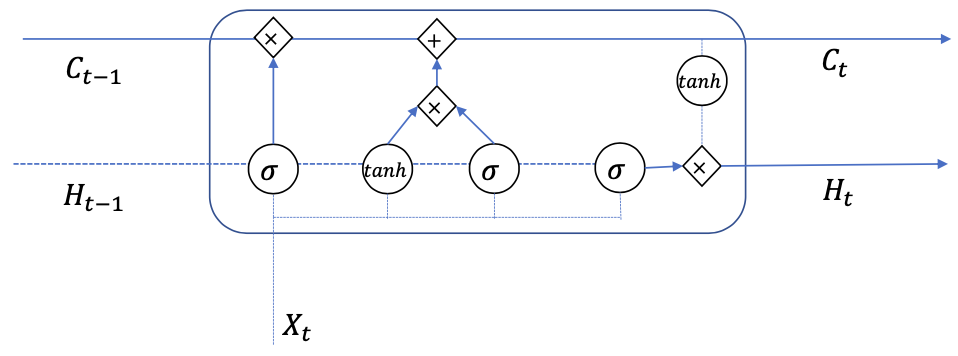

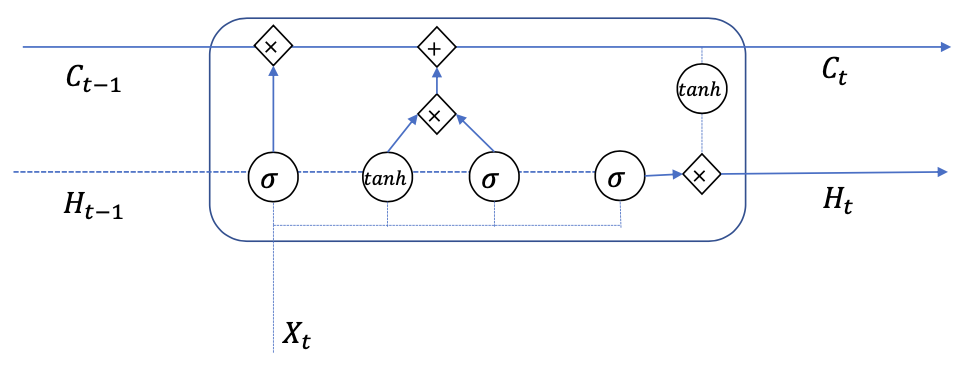

LSTM Operations

- Forget gate

- Input Gate

- Candidate Content

- Output Gate

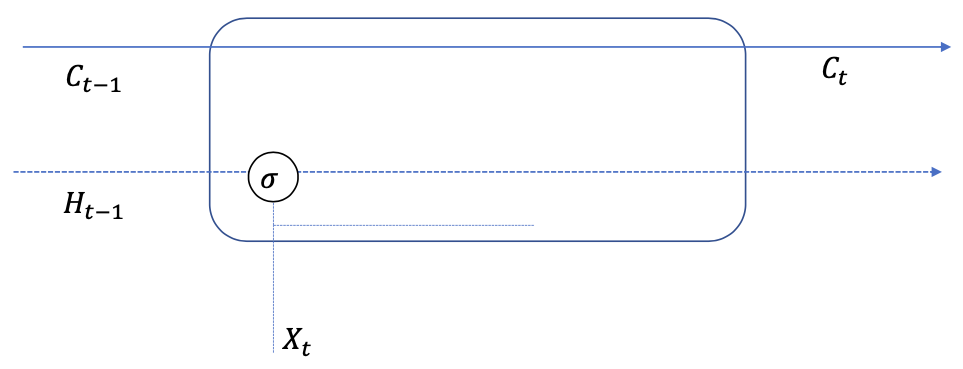

Forget

Forget: remove information from cell $C$

What to forget depends on:

- the current input $X_t$

- the previous memory $H_{t-1}$

Forget gate: controls what should be forgotten $$ F_{t}=\operatorname{sigmoid}\left(W^{F X} X_{t}+W^{F H} H_{t-1}+b^{F}\right) $$

Content to forget: $$ C = F_t * C_{t-1} $$

- $F_{t,j}$ near 0: Forgetting the content stored in $C_j$

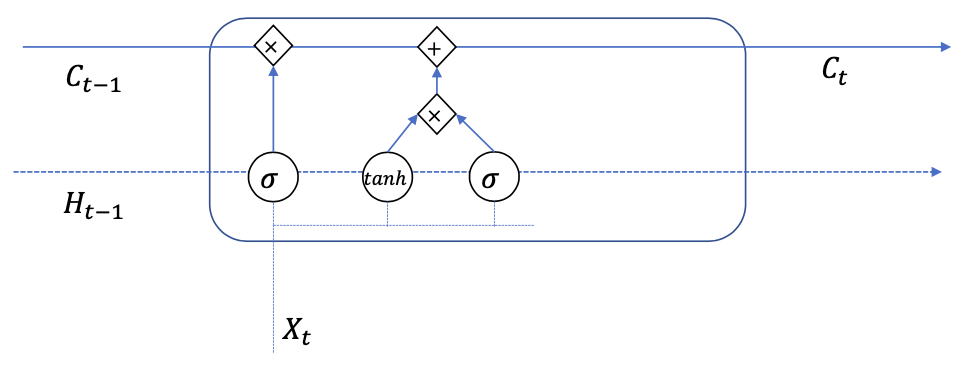

Write

Write: Adding new information for cell $C$

What to forget depends on:

- the current input $X_t$

- the previous memory $H_{t-1}$

Input gate: controls what should be add $$ I_{t}=\operatorname{sigmoid}\left(W^{I X} X_{t}+W^{I H} H_{t-1}+b^{I}\right) $$

Content to write: $$ \tilde{C}_{t}=\tanh \left(W^{C X} X_{t}+W^{C H} H_{t-1}+b^{I}\right) $$

Write content: $$ C=C_{t-1}+I_{t} * \tilde{C}_{t} $$

Output

Output: Reading information from cell 𝐶 (to store in the current state $H$)

How much to write depends on:

- the current input $X_t$

- the previous memory $H_{t-1}$

Forget gate: controls what should be output $$ O_{t}=\operatorname{sigmoid}\left(W^{OX} X_{t}+W^{OH} H_{t-1}+b^{O}\right) $$

New state $$ H_t = O_t * \operatorname{tanh}(C_t) $$

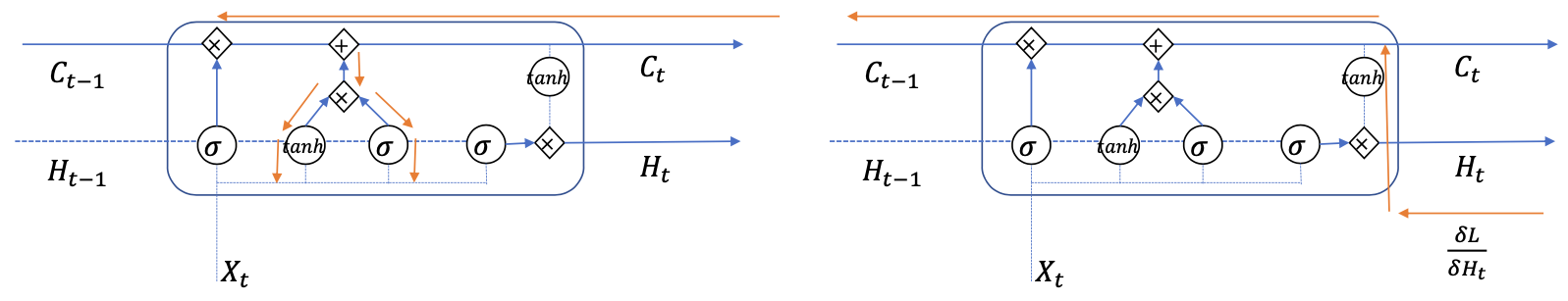

LSTM Gradients

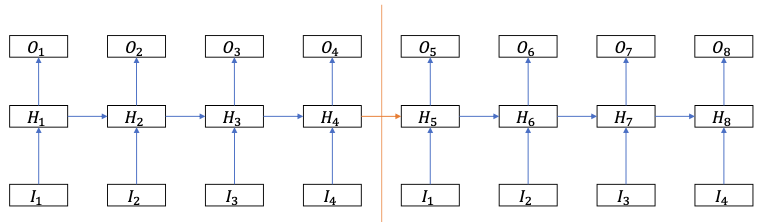

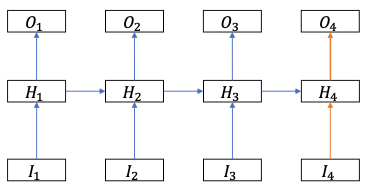

Truncated Backpropagation

What happens if the sequence is really long (E.g. Character sequences, DNA sequences, video frame sequences …)? $\to$ Back-propagation through time becomes exorbitant at large $T$

Solution: Truncated Backpropagation

- Divide the sequences into segments and truncate between segments

- However the memory is kept to remain some information about the past (rather than resetting)

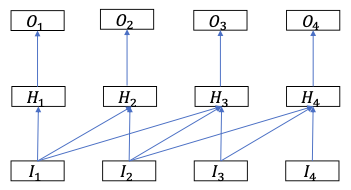

TDNN vs. LSTM

| TDNN | LSTM | |

|---|---|---|

|  | |

| For $t = 1,\dots, T$: $H_{t}=\mathrm{f}\left(\mathrm{W}_{1} \mathrm{I}_{\mathrm{t}-\mathrm{D}}+\mathrm{W}_{2} \mathrm{I}_{\mathrm{t}-\mathrm{D}+1}+\mathrm{W}_{3} \mathrm{I}_{\mathrm{t}-\mathrm{D}+2}+\cdots+W_{D} I_{t}\right)$ | For $t = 1,\dots, T$: $H_{t}=f\left(W^{I} I_{t}+W^{H} H_{t-1}+b\right)$ | |

| Weights are shared over time? | Yes | Yes |

| Handle variable length sequences | Can flexibly adapt to variable length sequences without changing structures | |

| Gradient vanishing or exploding problem? | No | Yes |

| Parallelism? | Can be parallelized into 𝑂(1) (Assuming Matrix multiplication cost is O(1) thanks to GPUs…) | Sequential computation (because $𝐻_𝑇$ cannot be computed before $𝐻_{𝑇−1}$) |