Tracking 2

Multi-Camera Systems

Type of multi-camera systems

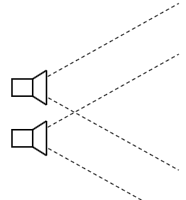

Stereo-camera system (narrow baseline)

- Close distance and equal orientation

- An object’s appearance is almost the same in both cameras

- Allows for calculation of a dense disparity map

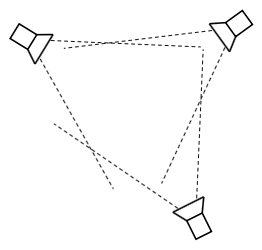

Wide-baseline multi-camera system

Arbitrary distance and orientation, overlapping field of view

An object’s appearance is different in each of the cameras

Allows for 3D localization of objects in the joint field of view

Multi-camera network

- Non-overlapping field of view

- An object’s appearance differs strongly from one camera to another

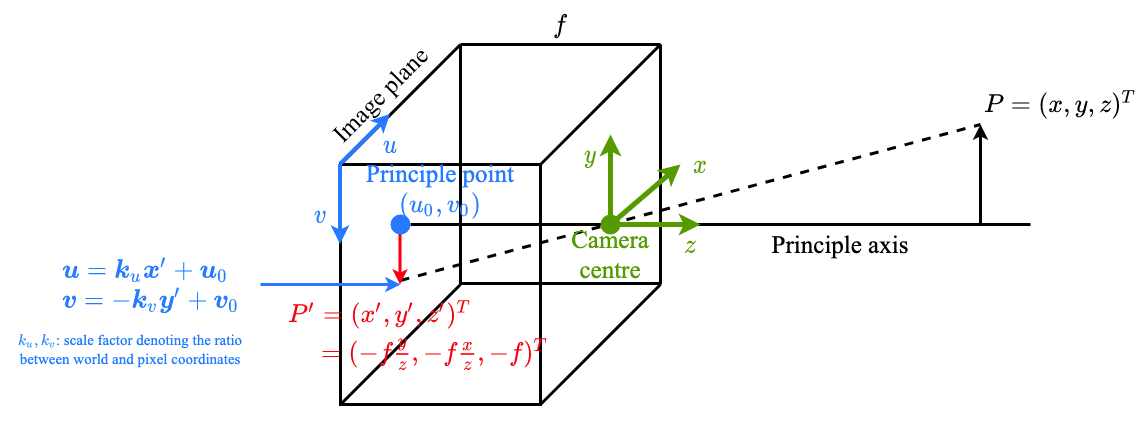

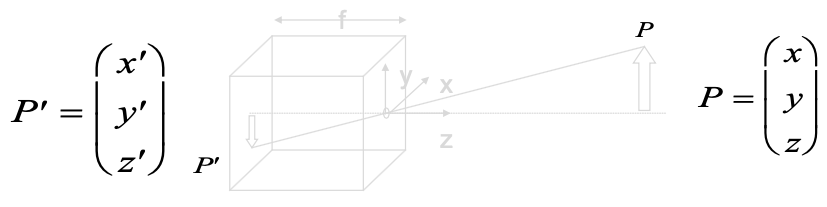

3D to 2D projection: Pinhole Camera Model

Summary:

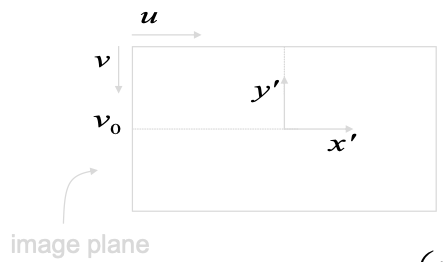

Pixel coordinates of the projected points on image plane

where and are scaling factors which denote the ratio between world and pixel coordinates.

In matrix formulation:

Perspective Projection

internal camera parameters

- have to be known to perform the projection

- they depend on the camera only

- Perform calibration to estimate

Calibration

Intrinsics parameters: describe the optical properties of each camera (“the camera model”)

- : focal length

- : the principal point (“optical center”), sometimes also denoted as

- : distortion parameters (radial and tangential)

Extrinsic parameters: describe the location of each camera with respect to a global coordinate system

- : translation vector

- : rotation matrix

Transformation of world coordinate of point to camera coordinate :

Calibration steps

- For each camera: A calibration target with a known geometry is captured from multiple views

- The corner points are extracted (semi-)automatically

- The locations of the corner points are used to estimate the intrinsics iteratively

- Once the intrinsics are known, a fixed calibration target is captured from all of the camerasextrinsics

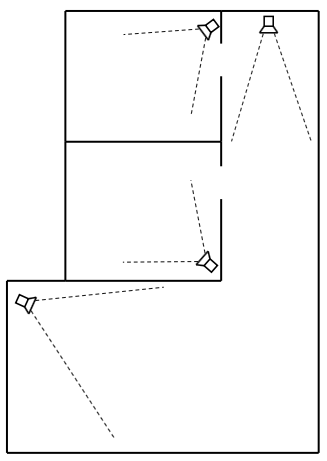

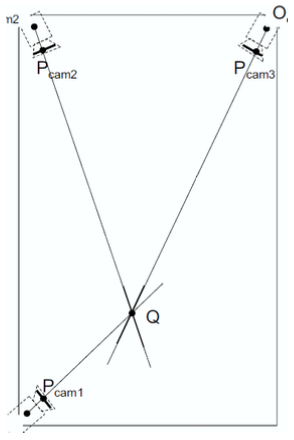

Triangulation

- Assumption: the object location is known in multiple views

- Ideally: The intersection of the lines-of-view determines the 3D location

- Practically: least-squares approximation