Gesture Recognition

Introduction

Gesture

- a movement usually of the body or limbs that expresses or emphasizes an idea, sentiment, or attitude

- the use of motions of the limbs or body as a means of expression

Automatic Gesture Recognition

- A gesture recognition system generates a semantic description for certain body motions

- Gesture recognition exploits the power of non-verbal communication, which is very common in human-human interaction

- Gesture recognition is often built on top of a human motion tracker

Applications

- Multimodal Interaction

- Gestures + Speech recognition

- Gestures + gaze

- Human-Robot Interaction

- Interaction with Smart Environments

- Understanding Human Interaction

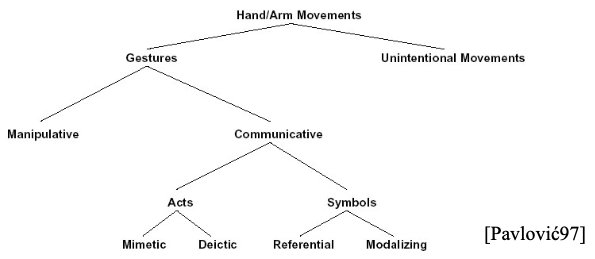

Types of Gestures

Hand & arm gestures

- Pointing Gestures

- Sign Language

Head gestures

- Nodding, head shaking, turning, pointing

Body gestures

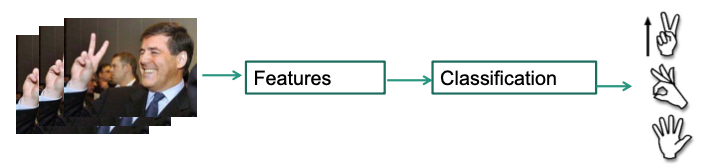

Automatic Gesture Recognition

- Feature Acquisition

- Appearances: Markers, color, motion, shape, segementation, stereo, local descriptors, space-time interest points, …

- Model based: body- or hand-models

- Classifiers

- SVM, ANN, HMMs, Adaboost, Dec. Trees, Deep Learning …

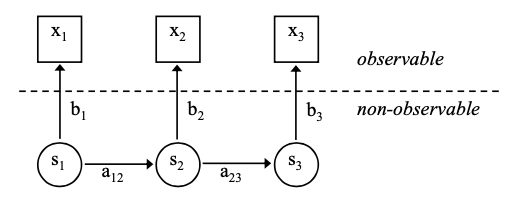

Hidden Markov Models (HMMs) for Gesture Recognition

“hidden”: comes from observing observations and drawing conclusions WITHOUT knowing the hidden sequence of states

Markov assumption (1st order): the next state depends ONLY on the current state (not on the complete state history)

A Hidden Markov Model is a five-tuple

- : set of states

- : the initial probability distribution

- = probability of being the first state of a state sequence

- : the matrix of state transition probabilities

- : probability of state following

- : the set of emission probability distributions/densities

- : probability of observing when the system is in state

- : the observable feature space

- Can be discrete () or continuous ()

Properties of HMMs

For the initial probabilities:

- Often simplified by

For state transition probabilities:

- Often: for most except for a few states

When then are discrete probability distributions, the HMMs are called discrete HMMs

When then are continuous probability density functions, the HMMs are called continuous (density) HMMs

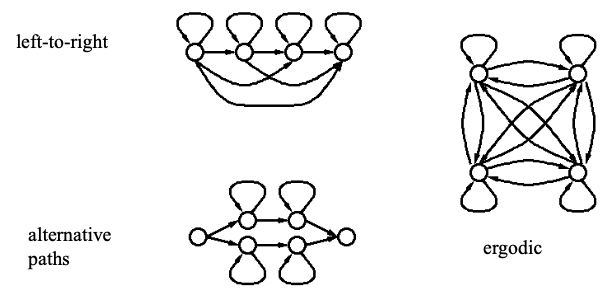

HMM Topologies

The Observation Model

Most popular: Gaussian mixture models

- : number of Gaussians (in state )

- : mixture weight for -th Gaussian (in state )

- : means of -th Gaussian (in state )

- : covariane matrix of -th Gaussian (in state )

Three Main Tasks with HMMs

Given an HMM and an observation

The evaluation problem

compute the probability of the observation

“Forward Algorithm”

The decoding problem

compute the most likely state sequence , i.e.

“Viterbi-Algorithm”

The learning/optimization problem

Find an HMM s.t.

“Baum-Welch-Algo”, “Viterbi-Learning”

Sign Language Recognition

- American Sign Language (ASL)

- 6000 gesture describe persons, places and things

- Exact meaning and strong rules of context and grammar for each

- Sign recognition

- HMM ideal for complex and structured hand gestures of ASL

Feature extraction

- Camera either located as a 1st-person and a 2nd-person view

- Segment hand blobs by a skin color model

HMM for American Sign Language

Four-State HMM for each word

Training

- Automatic segmentation of sentences in five portions

- Initial estimates by iterative Viterbi-alignment

- Then Baum-Welch re-estimation

- No context used

Recognition

- With and without part-of-speech grammar

- All features / only relative features used

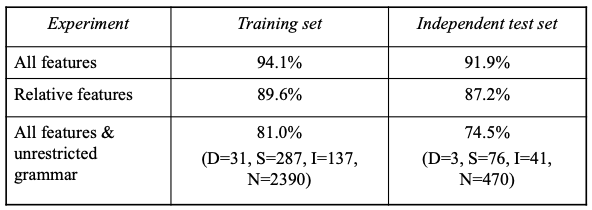

ASL Results

Desk-based

348 training and 94 testing sentences without contexts

Accuracy:

- : #Words

- : #Deletions

- : #Substituitions

- : #Insertions

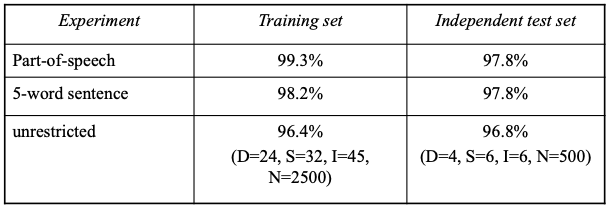

Wearable-based

- 400 training sentences and 100 for testing

- Test 5-word sentences

- Restricted and unrestricted similar!

Pointing Gesture Recognition

Pointing gestures

are used to specify objects and locations

can be needful to resolve ambiguities in verbal statements

Definition: Pointing gesture = movement of the arm towards a pointing target

Tasks

- Detect occurrence of human pointing gestures in natural arm movements

- Extract the 3D pointing direction