Sequence to Sequence

Language Modeling

Language model is a particular model calculating the probability of a sequence

Softmax layer

After linear mapping the hidden layer , a “score” vector is obtained

The softmax function normalizes to get probabilities

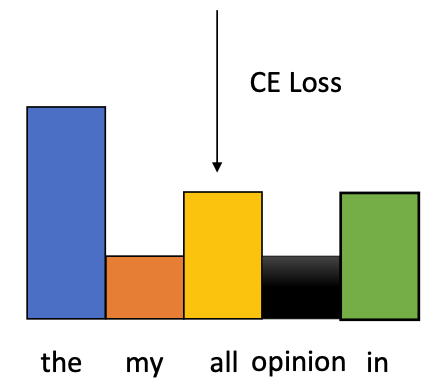

Cross-Entropy Loss

Use one-hot-vector as the label to train the model

Cross-entropy loss: difference between predicted probability and label

When 𝑌 is an one-hot vector:

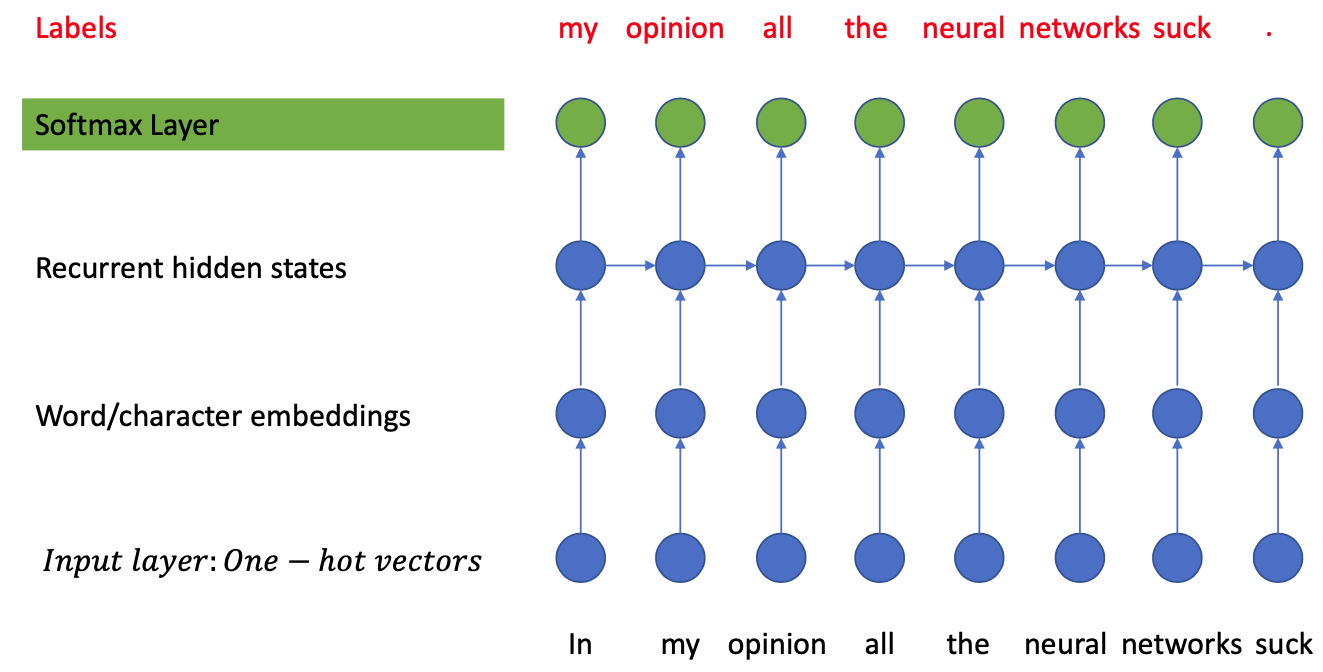

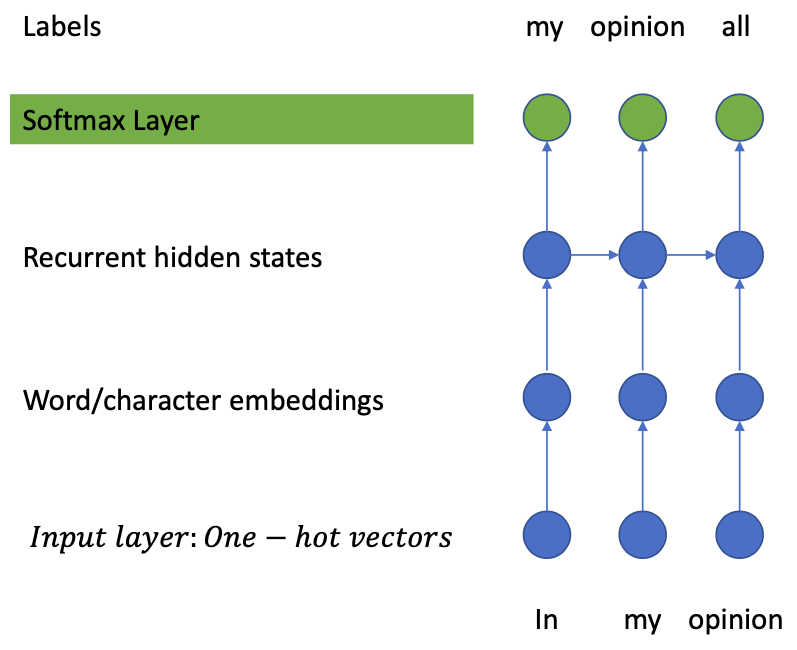

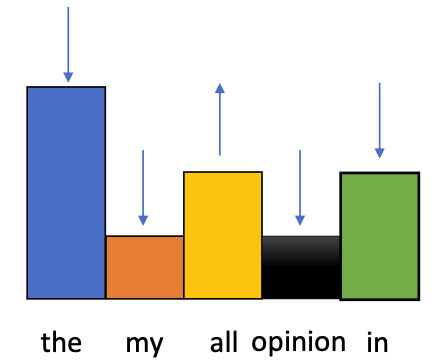

Training

Force the model to “fit” known text sequences (teacher forcing / memorization)

- Input:

- Output:

In generation: this model uses its own output at time step as input for time step (Auto regressive, or sampling mode)

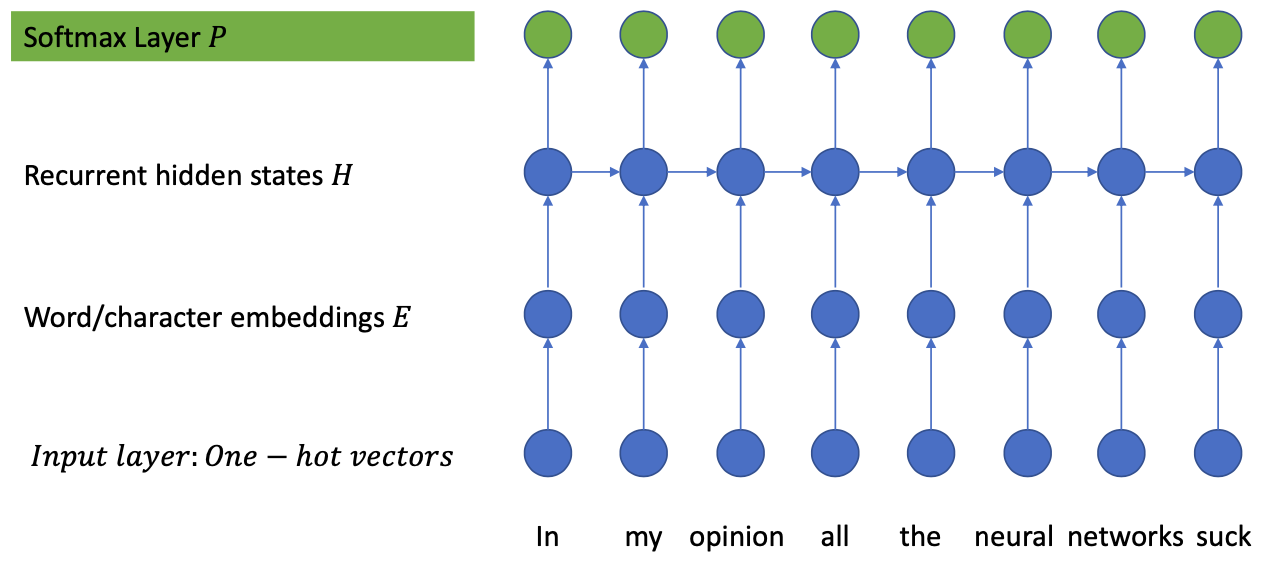

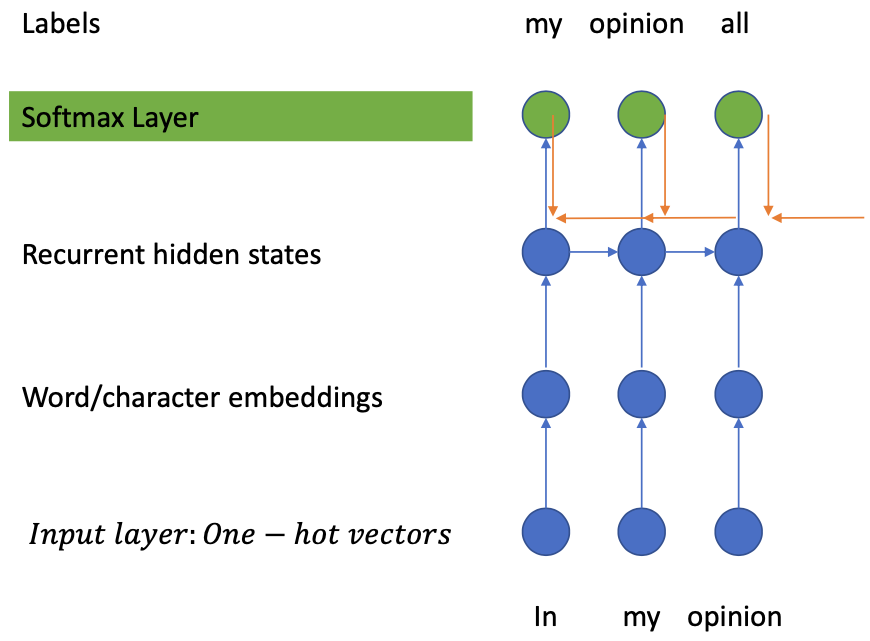

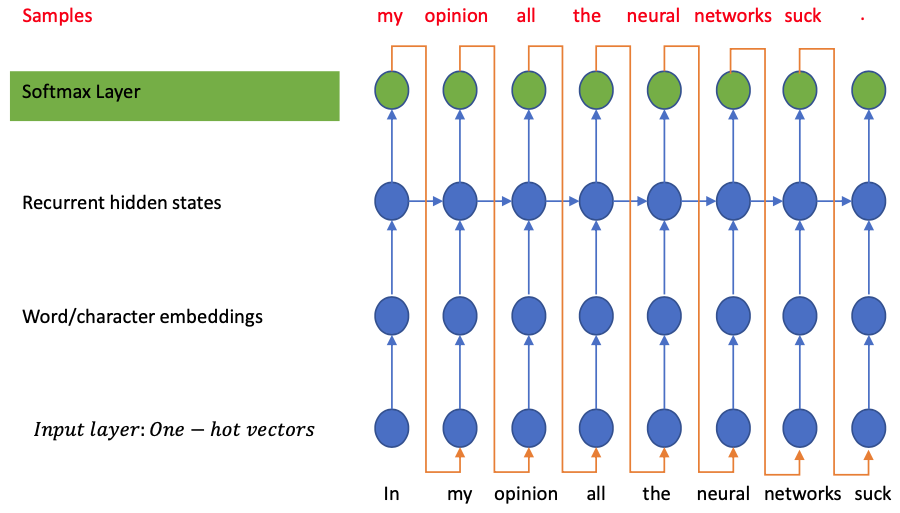

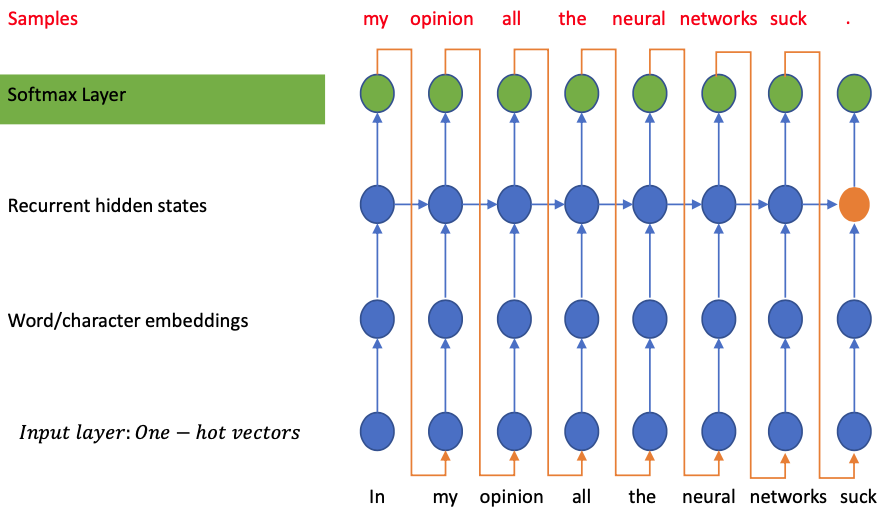

RNN Language Modeling

Basic step-wise operation

Input:

Label:

Input to word embeddings:

Hidden layer:

Output:

Softmax:

Loss: Cross Entropy

(: the -th element of )

Output layer

Because of the softmax, the output layer is a distribution over the vocabulary (The probability of each item given the context)

“Teacher-Forcing” method

- We do not really tell the model to generate “all”

- But Applying the Cross Entropy Loss forces the distribution to favour “all”

Backpropagation in the model

Gradient of the loss function

in Char-Language-Model is an one-hot-vector

The gradient is positive everywhere and negative in the label position

Assume the label is in the -th position.

In non-label position:

In label position:

Gradient at the hidden layer:

- The hidden layer receives two sources of gradients:

- Coming from the loss of the current state

- Coming from the gradient carried over from the future

- Similary the memory cell :

- Receives the gradient from the

- Summing up that gradient with the gradient coming from the future

- The hidden layer receives two sources of gradients:

- Coming from the loss of the current state

- Coming from the gradient carried over from the future

- Similary the memory cell :

- Receives the gradient from the

- Summing up that gradient with the gradient coming from the future

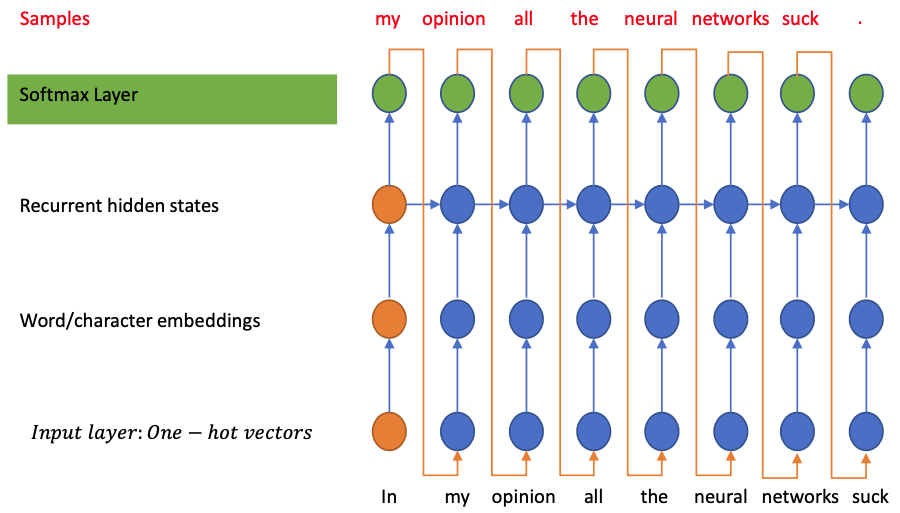

Generate a New Sequence

Start from a memory state of the RNN (often initialized as zeros)

Run the network through a seed

Generate the probability distribution given the seed and the memory state

“Sample” a new token from the distribution

Use the new token as the seed and carry the new memory over to keep generating

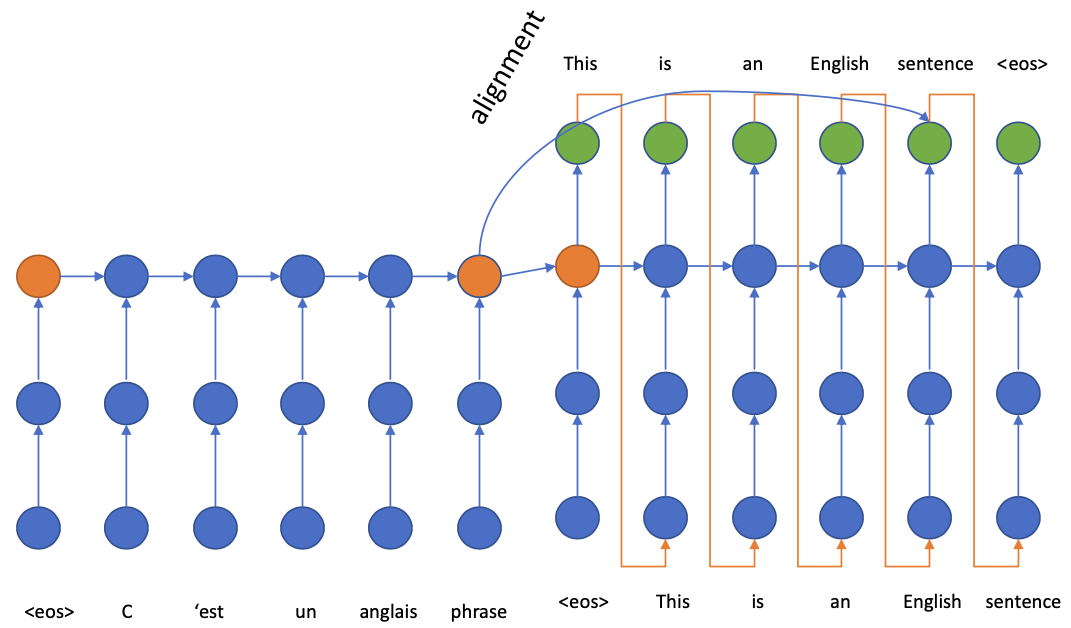

Sequence-to-Sequence Models

💡 Main idea: from to with being a context

Controlling Generation with RNN

Hints:

The generated sequence depends on

The first input(s)

The first recurrent hidden state

The final hidden state of the network after rolling contains the compressed information about the sequence

Sequence-to-Sequence problem

Given: sequence of variable length (source sequence)

Task: generate a new sequence that has the same content

Training:

Given the dataset containing pairs of parallel sentences

Training objective

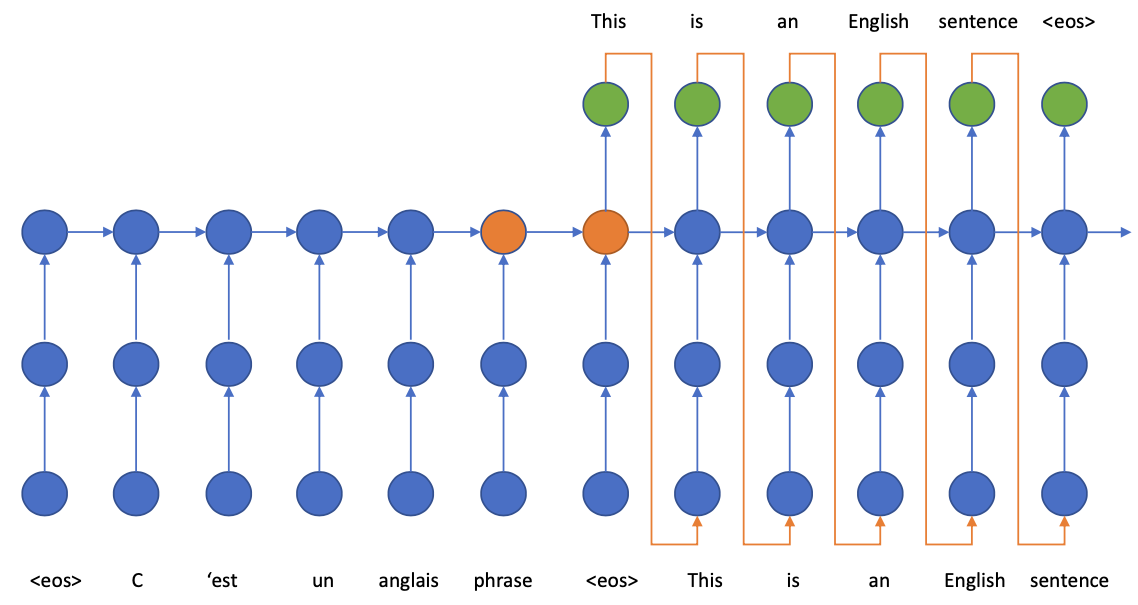

Encoder-Decoder model

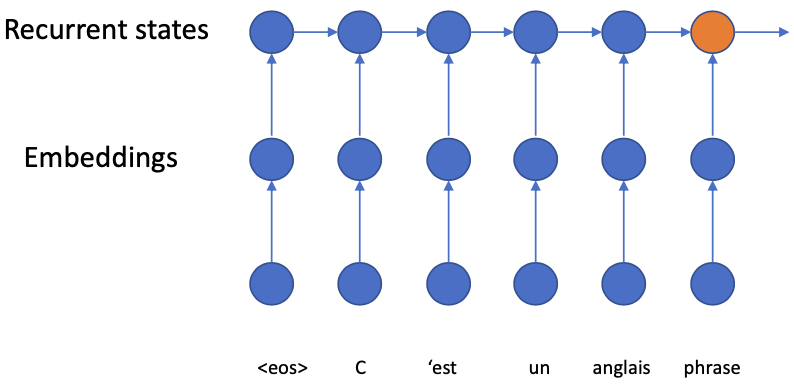

Encoder

Transforms the input into neural representation

Input

- Discret variables: require using embedding to be “compatible” with neural networks

- Continuous variables: can be “raw features” of the input

- Speech signals

- Image pixels

The encoder represents the part

The generative chain does not incline any generation from the encoder

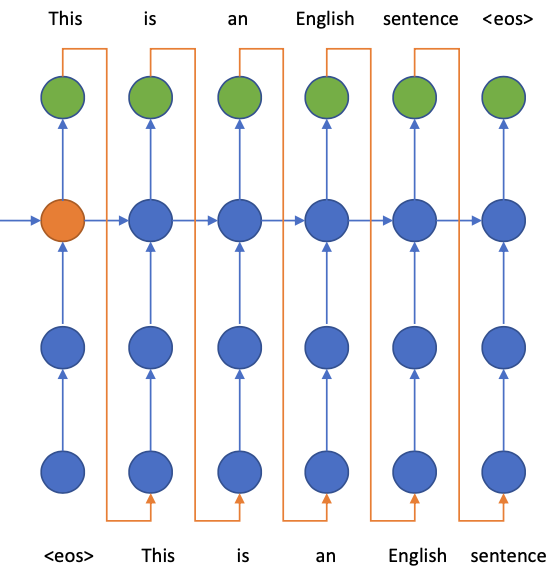

Decoder

- The encoder gives the decoder a representation of the source input

- The decoder would try to “decode” the information from that representation

- Key idea: the encoder representation is the of the decoder network

- The operation is identical to the character-based language model

- Back-propagation through time provides the gradient w.r.t any hidden state

- For the decoder: the BPTT is identical to the language model

- For the encoder: At each step the encoder hidden state only has 1 source of gradient

- Back-propagation through time provides the gradient w.r.t any hidden state

Problem with Encoder-Decoder Model

The model worked well to translate short sentences. But long sentences (more than 10 words) suffered greatly 😢

🔴 Main problem:

- Long-range dependency

- Gradient starving

Funny trick Solution: Reversing the source sentence to make the sentence starting words closer. (pretty “hacky” 😈)

Observation for solving this problem

Each word in the source sentence can be aligned with some words in the target sentence. (“alignment” in Machine Translation)

We can try to establish a connection between the aligned words.

Seq2Seq with Attention

As mentioned, we want to find the alignment between decoder-encoder. However, Our decoder only looks at the final and compressed encoder state to find the information.

Therefore we have to modify the decoder to do better! 💪

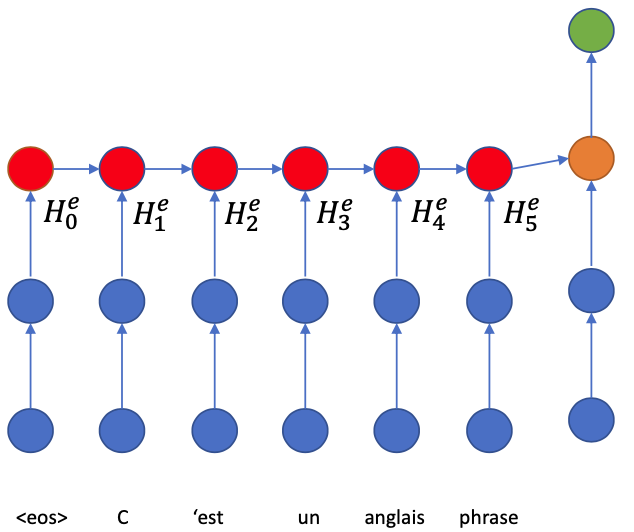

We will use superscript and to distinguish Encoder and Decoder.

E.g.:

- :-th Hidden state of Encoder

- :-th Hidden state of Decoder

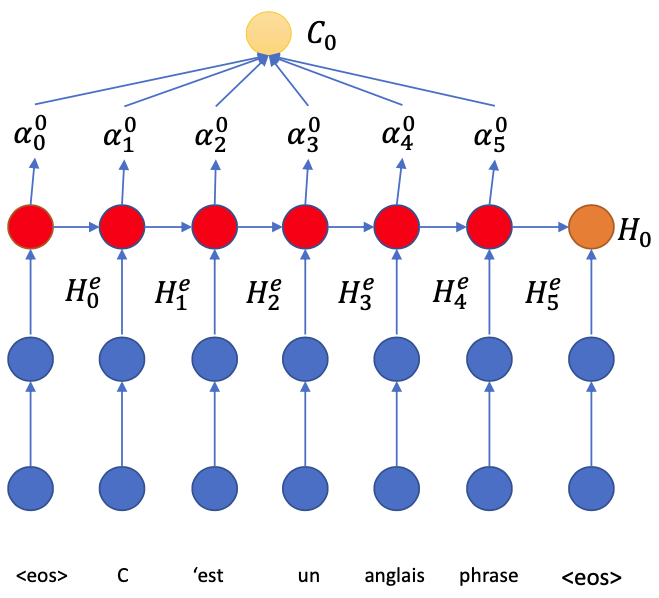

- Run the encoder LSTM through the input sentence (Read the sentence and encode it into states

- The LSTM operation gives us some assumption about

- The state contains information about

- the word (because of input gate)

- some information about the surrounding (because of memory))

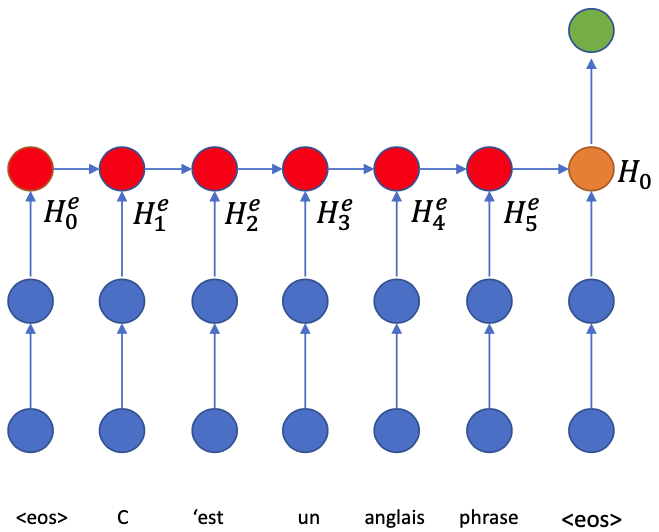

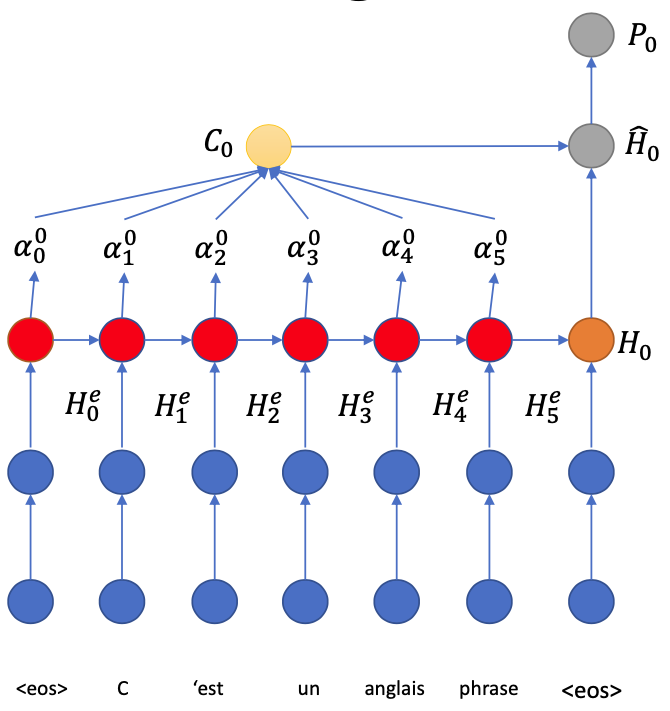

Now we start generating the translation with the decoder.

The LSTM consumes the

EOStoken (always) and the hidden states copied over to get the first hidden stateFrom we have to generate the first word, and we need to look back to the encoder.

Here we ask the question:

“Which word is responsible to generate the first word?”

However, it’s not easy to answer this question

- First we don’t know 😭

- Second there might be more than one relevant word (like when we translate phrases or compound words) 🤪

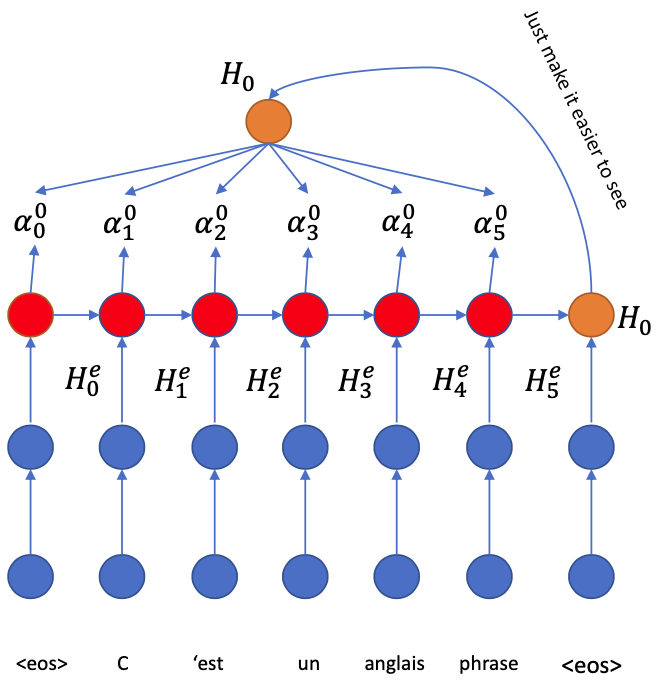

The best way to find out is: to check all of the words! 💪

has to connect all in the encoder side for querying

Each “connection” will return a score indicating how relevant is to generate the translation from

Generate

- A feed forward neural network with nonlinearity

- Use Softmax to get probabilities

The higher is, the more relevant the state is

After asks “Everyone” in the encoder, it needs to sum up the information

( is the summarization of the information in the encoder that is the most relevant to to generate the first word in the decoder)

- Now we can answer the question “Which word is responsible to generate the first word?”

The answer is: the words with highest coefficients

- Combine with

- Generate the softmax output from

- Go to the next step

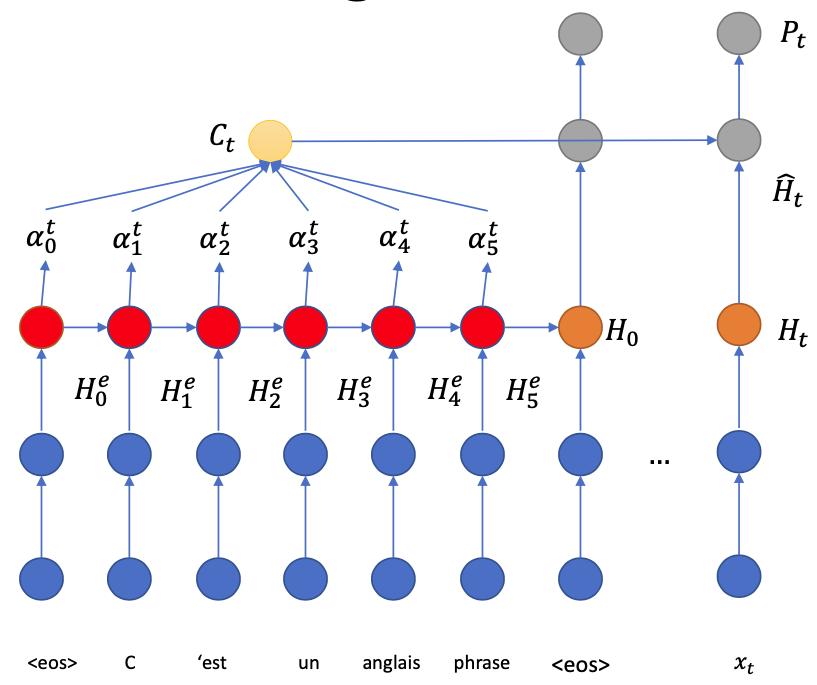

In general, at time step :

Decoder LSTM generates the hidden state from the memory

“pays attention” to the encoder states to know which source information is relevant

Generate from each

Weighted sum for “context vector”

Combine and then generates for output distribution

Use a feed-forward neural network

Or use a RNN

Training

- Very similar to basic Encoder-Decoder

- Since the scoring neural network is continuous, we can use backpropagation

- No loner gradient starving on the encoder side

Pratical Suggestions for Training

Loss is too high and generation is garbarge

- Try to match your implementation with the LSTM equations

- Check the gradients by gradcheck

- Note that the gradcheck can still return some weights not passing the relative error check, but acceptable if only several (1 or 2% of the weights) cannot pass

Loss looks decreasing and the generated text looks correct but not readable

- In the sampling process, you can encourage the model to generate with higher certainty by using “argmax” (taking the char with highest probability)

- But “always using argmax” will look terrible

- A mixture of argmax and sampling can be used to ensure spelling correctness and exploration

Large network size is normally needed for character based models