👍 Activation Functions

Activation functions should be

- non-linear

- differentiable (since training with Backpropagation)

Q: Why can’t the mapping between layers be linear?

A: Compositions of linear functions is still linear, whole network collapses to regression.

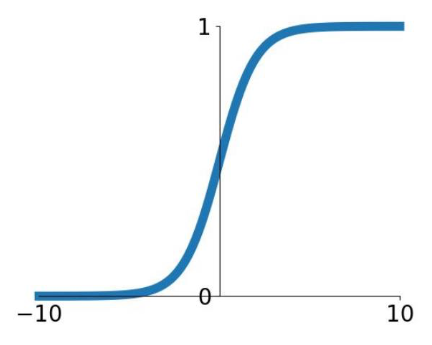

Sigmoid

Squashes numbers to range

✅ Historically popular since they have nice interpretation as a saturating “firing rate” of a neuron

⛔️ Problems

- Vanishing gradients: functions gradient at either tail of or is almost zero

- Sigmoid outputs are not zero-centered (important for initialization)

- is a bit compute expensive

Derivative

Python implementation

def sigmoid(x): return 1 / (1 + np.exp(-x))Derivative

def dsigmoid(y): return y * (1 - y)

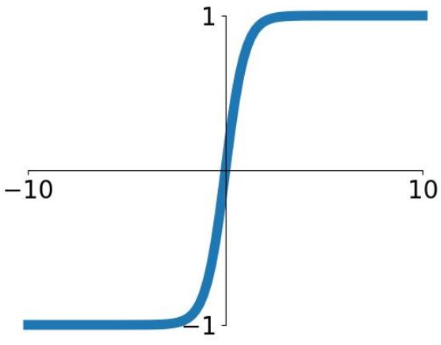

Tanh

Squashes numbers to range

✅ zero centered (nice) 👏

⛔️ Vanishing gradients: still kills gradients when saturated

Derivative:

def dtanh(y): return 1 - y * y

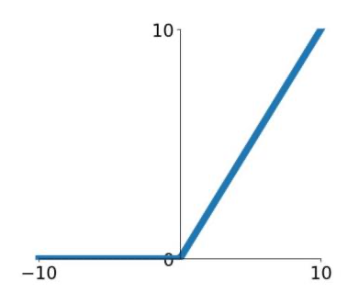

Rectified Linear Unit (ReLU)

✅ Advantages

- Does not saturate (in )

- Very computationally efficient

- Converges much faster than sigmoid/tanh in practice

⛔️ Problems

- Not zero-centred output

- No gradient for (dying ReLU)

Python implementation

import numpy as np def ReLU(x): return np.maximum(0, x)

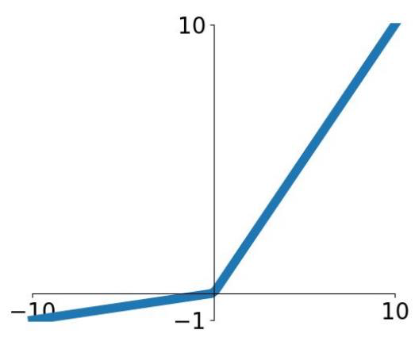

Leaky ReLU

Parametric Rectifier (PReLu)

- Also learn

✅ Advantages

- Does not saturate

- Computationally efficient

- Converges much faster than sigmoid/tanh in practice!

- will not “die”

Python implementation

import numpy as np def ReLU(x): return np.maximum(0.1 * x, x)

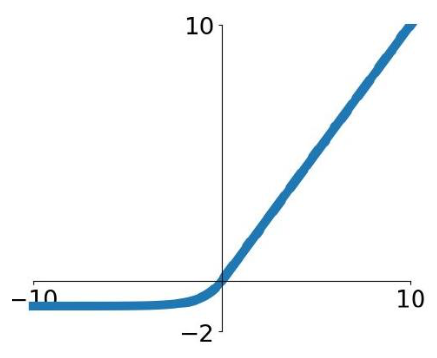

Exponential Linear Units (ELU)

- ✅ Advantages

- All benefits of ReLU

- Closer to zero mean outputs

- Negative saturation regime compared with Leaky ReLU (adds some robustness to noise)

- ⛔️ Problems

- Computation requires

Maxout

- Generalizes ReLU and Leaky ReLU

- ReLU is Maxout with and

- ✅ Fixes the dying ReLU problem

- ⛔️ Doubles the number of parameters

Softmax

- Softmax: probability that feature belongs to class

- Derivative:

Advice in Practice

- Use ReLU

- Be careful with your learning rates / initialization

- Try out Leaky ReLU / ELU / Maxout

- Try out tanh but don’t expect much

- Don’t use sigmoid