Dropout

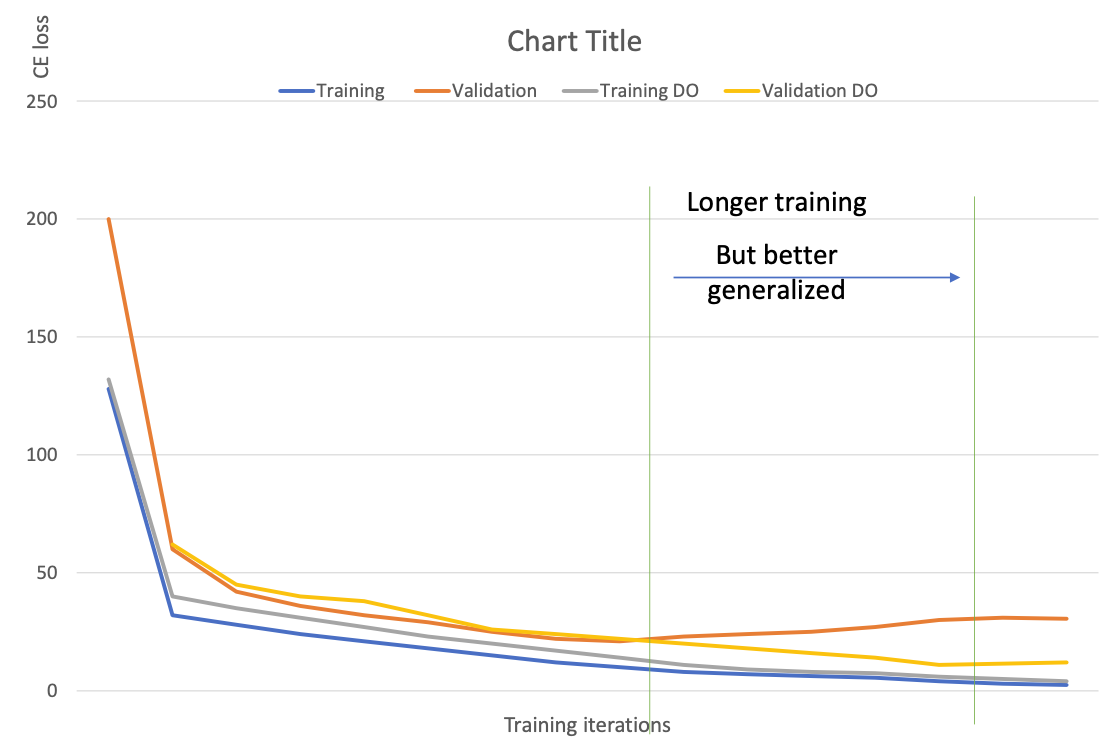

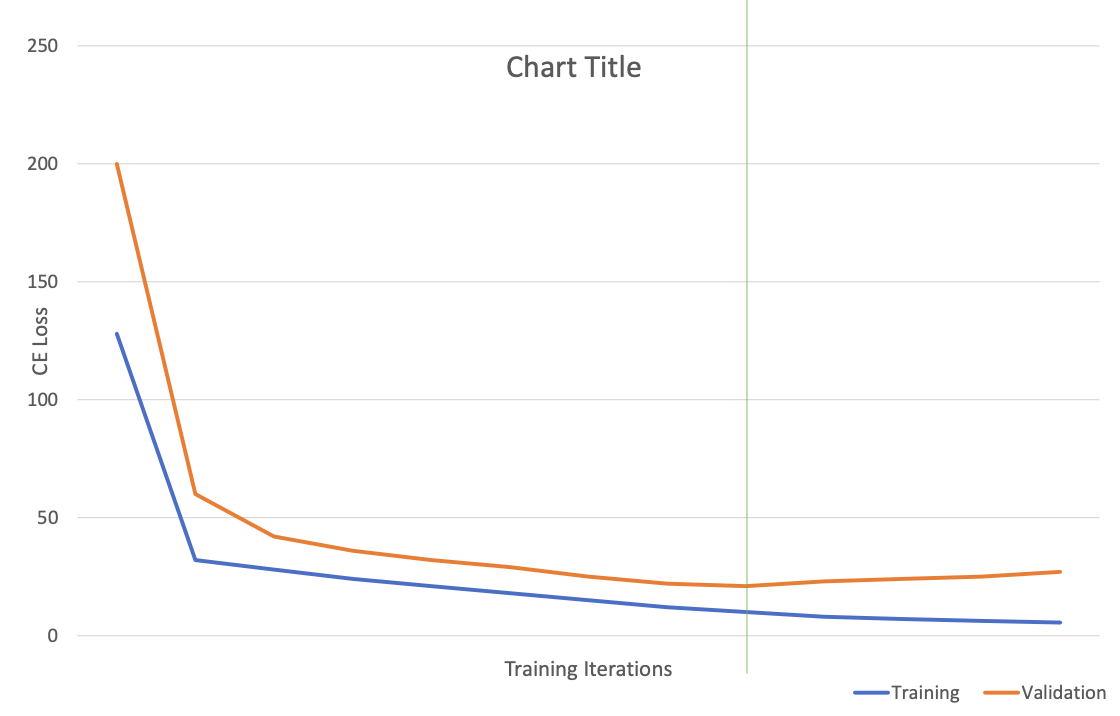

Model Overfitting

In order to give more “capacity” to capture different features, we give neural nets a lot of neurons. But this can cause overfitting.

Reason: Co-adaptation

- Neurons become dependent on others

- Imagination: neuron captures a particular feature which however, is very frequenly seen with some inputs.

- If receives bad inputs (partial of the combination), then there is a chance that the feature is ignored 🤪

Solution: Dropout! 💪

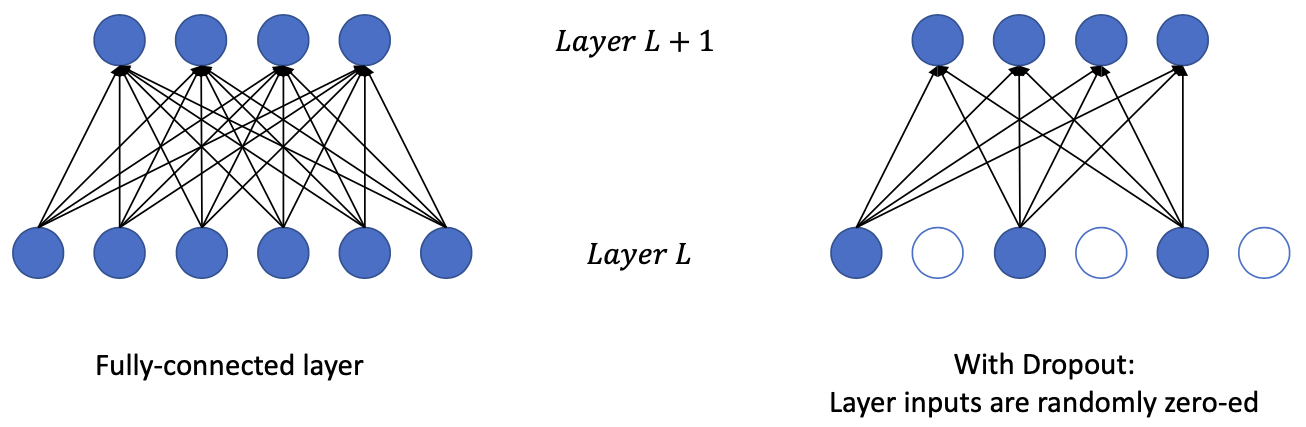

Dropout

With dropout the layer inputs become more sparse, forcing the network weights to become more robust.

Dropout a neuron = all the inputs and outputs to this neuron will be disabled at the current iteration.

Training

Given

- input

- weights

- survival rate

- Usually

Sample mask with

Dropped input:

Perform backward pass and mask the gradients:

Evaluation/Testing/Inference

ALL input neurons are presented WITHOUT masking

Because each neuron appears with probability in training

So we have to scale with (or scale with during training) to match its expectation

Why Dropout works?

- Intuition: Dropout prevents the network to be too dependent on a small number of neurons, and forces every neuron to be able to operate independently.

- Each of the “dropped” instance is a different network configuration

- different networks sharing weights

- The inference process can be understood as an ensemble of different configuration

- This interpretation is in-line with Bayesian Neural Networks