Generalization

Generalization: Ability to Apply what was learned during Training to new (Test) Data

Reasons for bad generalization

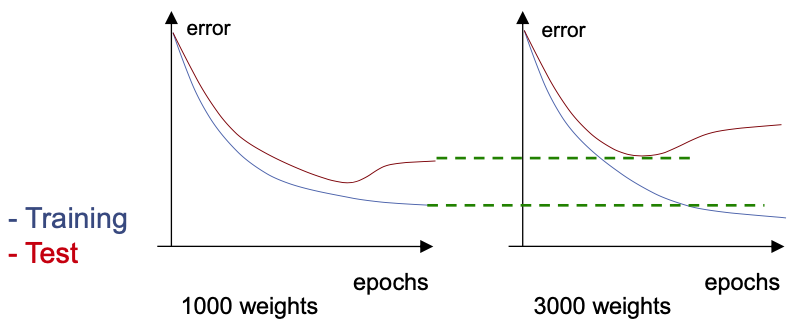

- Overfitting/Overtraining (trained too long)

- Too little training material

- Too many Parameters (weights) or inappropriate network architecture error

Prevent Overfitting

- The obviously best approach: Collect More Data! 💪

- If Data is Limited

- Simplest Method for Best Generalization: Early Stopping

- Optimize Parameters/Arcitecture

- Architectural Learning

- Choose Best Architecture by Repeated Experimentation on Cross Validation Set

- Reduce Architecture Starting from Large

- Grow Architecture Starting from Small

Destructive Methods

Reduce Complexity of Network through Regularization

Weight Decay

Weight Elimination

Optimal Brain Surgeon

Optimal Brain Damage

- 💡Idea: Certain connections are removed from the network to reduce complexity and to avoide overfitting

- Remove those connections that have the least effect on the Error (MSE, ..), i.e. are the least important.

- But this is time consuming (difficult) 🤪

Constructive Methods

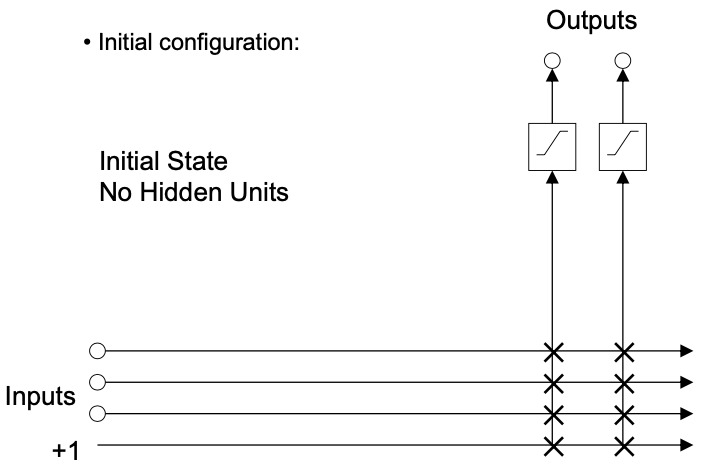

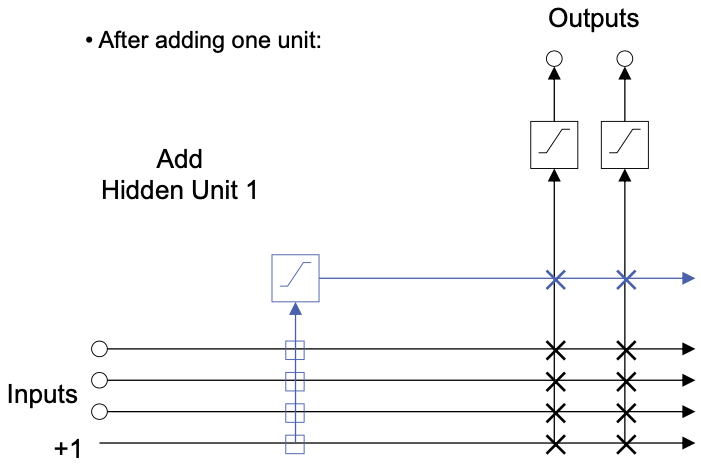

Iteratively Increasing/Growing a Network (construktive) starting from a very small one

- Cascade Correlation

- Meiosis Netzwerke

- ASO (Automativ Structure Optimization)

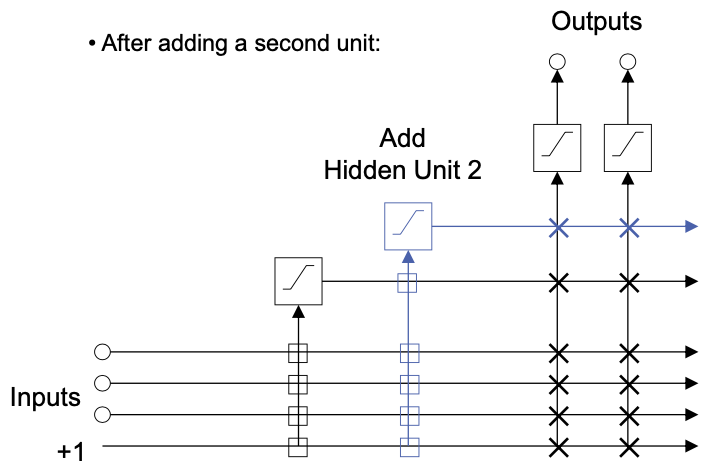

Cascade Correlation

Adding a hidden unit

- Input connections from all input units and from all already existing hidden units

- First only these connections are adapted

- Maximize the correlation between the activation of the candidate units and the residual error of the net

Not necessary to determine the number of hidden units empirically

Can produce deep networks without dramatic slowdown (bottom up, constructive learning)

At each point only one layer of connections is trained

Learning is fast

Learning is incremental

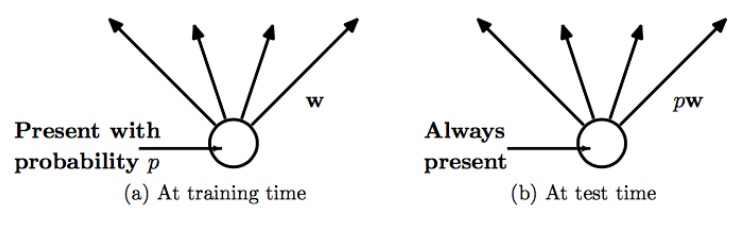

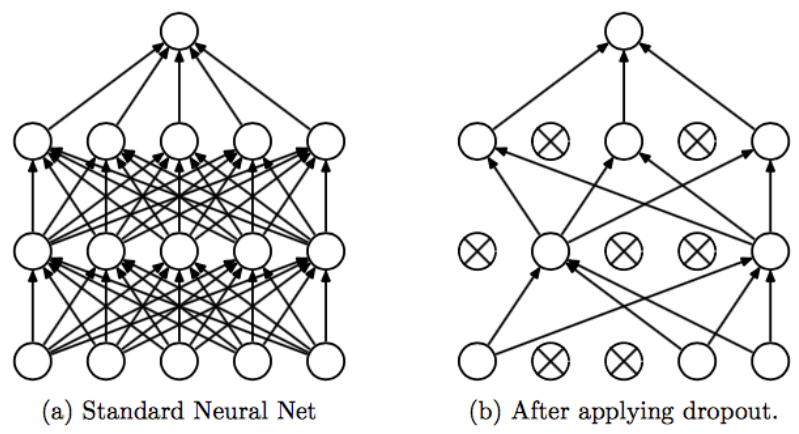

Dropout

Popular and very effective method for generalization

💡Idea

- Randomly drop out (zero) hidden units and input features during training

- Prevents feature co-adaptation

Illustration

Dropout training & test