Softmax and Its Derivative Softmax

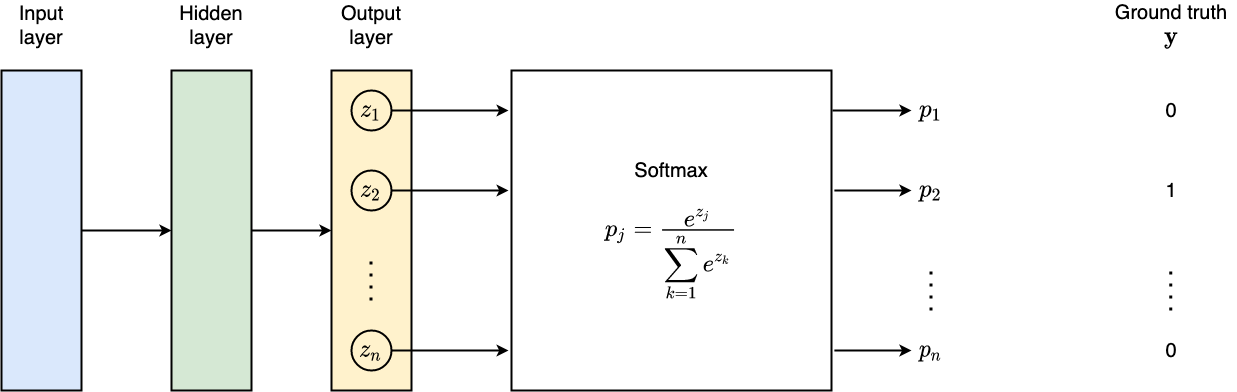

We use softmax activation function to predict the probability assigned to n n n j j j

p _ j = softmax ( z _ j ) = e z _ j ∑ _ k = 1 n e z _ k

p\_j = \operatorname{softmax}(z\_j) = \frac{e^{z\_j}}{\sum\_{k=1}^n e^{z\_k}}

p _ j = softmax ( z _ j ) = ∑ _ k = 1 n e z _ k e z _ j Furthermore, we use One-Hot encoding to represent the groundtruth y y y

∑ _ k = 1 n y _ k = 1

\sum\_{k=1}^n y\_k = 1

∑ _ k = 1 n y _ k = 1 Loss function (Cross-Entropy):

L = − ∑ _ k = 1 n y _ k log ( p _ k ) = − ( y _ j log ( p _ j ) + ∑ _ k ≠ j y _ k log ( p _ k ) )

\begin{aligned}

L &= -\sum\_{k=1}^n y\_k \log(p\_k) \\\\

&= - \left(y\_j \log(p\_j) + \sum\_{k \neq j}y\_k \log(p\_k)\right)

\end{aligned}

L = − ∑ _ k = 1 n y _ k log ( p _ k ) = − ( y _ j log ( p _ j ) + ∑ _ k = j y _ k log ( p _ k ) ) Gradient w.r.t z _ j z\_j z _ j

∂ ∂ z _ j L = ∂ ∂ z _ j ( − ∑ _ k = 1 n y _ k log ( p _ k ) ) = − ∂ ∂ z _ j ( y _ j log ( p _ j ) + ∑ _ k ≠ j y _ k log ( p _ k ) ) = − ( ∂ ∂ z _ j y _ j log ( p _ j ) + ∂ ∂ z _ j ∑ _ k ≠ j y _ k log ( p _ k ) )

\begin{aligned}

\frac{\partial}{\partial z\_j}L

&= \frac{\partial}{\partial z\_j} \left(-\sum\_{k=1}^n y\_k \log(p\_k)\right) \\\\

&= -\frac{\partial}{\partial z\_j} \left(y\_j \log(p\_j) + \sum\_{k \neq j}y\_k \log(p\_k)\right) \\\\

&= -\left(\frac{\partial}{\partial z\_j} y\_j \log(p\_j) + \frac{\partial}{\partial z\_j}\sum\_{k \neq j}y\_k \log(p\_k)\right)

\end{aligned}

∂ z _ j ∂ L = ∂ z _ j ∂ ( − ∑ _ k = 1 n y _ k log ( p _ k ) ) = − ∂ z _ j ∂ ( y _ j log ( p _ j ) + ∑ _ k = j y _ k log ( p _ k ) ) = − ( ∂ z _ j ∂ y _ j log ( p _ j ) + ∂ z _ j ∂ ∑ _ k = j y _ k log ( p _ k ) ) Therefore,

∂ ∂ z _ j L = − ( ∂ ∂ z _ j y _ j log ( p _ j ) + ∂ ∂ z _ j ∑ _ k ≠ j y _ k log ( p _ k ) ) = − ( y _ j ( 1 − p _ j ) − ∑ _ k ≠ j y _ k p _ j ) = − ( y _ j − y _ j p _ j − ∑ _ k ≠ j y _ k p _ j ) = − ( y _ j − ( y _ j p _ j + ∑ _ k ≠ j y _ k p _ j ) ) = − ( y _ j − ∑ _ k = 1 n y _ k p _ j ) = ∑ _ k = 1 n y _ k = 1 − ( y _ j − p _ j ) = p _ j − y _ j

\begin{aligned}

\frac{\partial}{\partial z\_j}L

&= -\left(\frac{\partial}{\partial z\_j} y\_j \log(p\_j) + \frac{\partial}{\partial z\_j}\sum\_{k \neq j}y\_k \log(p\_k)\right) \\\\

&= -\left(y\_j(1-p\_j) - \sum\_{k \neq j}y\_kp\_j\right) \\\\

&= -\left(y\_j-y\_jp\_j - \sum\_{k \neq j}y\_kp\_j\right) \\\\

&= -\left(y\_j- (y\_jp\_j + \sum\_{k \neq j}y\_kp\_j)\right) \\\\

&= -\left(y\_j- \sum\_{k=1}^ny\_kp\_j\right)\\\\

&\overset{\sum\_{k=1}^{n} y\_k = 1}{=} -\left(y\_j- p\_j\right) \\\\

&= p\_j - y\_j

\end{aligned}

∂ z _ j ∂ L = − ( ∂ z _ j ∂ y _ j log ( p _ j ) + ∂ z _ j ∂ ∑ _ k = j y _ k log ( p _ k ) ) = − ( y _ j ( 1 − p _ j ) − ∑ _ k = j y _ k p _ j ) = − ( y _ j − y _ j p _ j − ∑ _ k = j y _ k p _ j ) = − ( y _ j − ( y _ j p _ j + ∑ _ k = j y _ k p _ j ) ) = − ( y _ j − ∑ _ k = 1 n y _ k p _ j ) = ∑ _ k = 1 n y _ k = 1 − ( y _ j − p _ j ) = p _ j − y _ j Useful resources