Bolzmann Machine

Boltzmann Machine

- Stochastic recurrent neural network

- Introduced by Hinton and Sejnowski

- Learn internal representations

- Problem: unconstrained connectivity

Representation

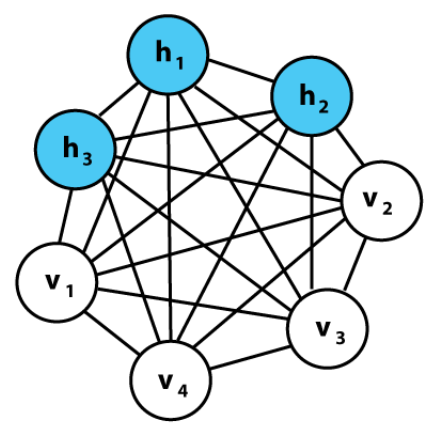

Model can be represented by Graph:

Undirected graph

Nodes: States

States

Types:

- Visible states

- Represent observed data

- Can be input/output data

- Hidden states

- Latent variable we want to learn

- Bias states

- Always one to encode the bias

Binary states

- unit value

Stochastic

Decision of whether state is active or not is stochastically

Depend on the input

- : Bias

- : State

- : Weight between state and state

Connections

Graph can be fully connected (no restrictions)

Unidircted:

No self connections:

Energy

Energy of the network

$$ \begin{aligned} E &= -S^TWS - b^TS \\\\ &= -\sum\_{iUpdating the nodes

decrease the Energy of the network in average

reach Local Minimum (Equilibrium)

Stochastic process will avoid local minima

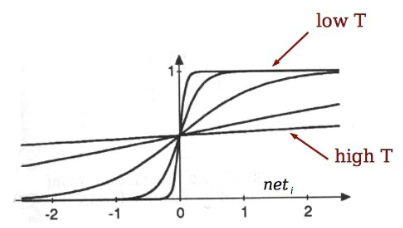

Simulated Annealing

Use Temperature to allow for more changes in the beginning

Start with high temperature

“anneal” by slowing lowering T

Can escape from local minima 👏

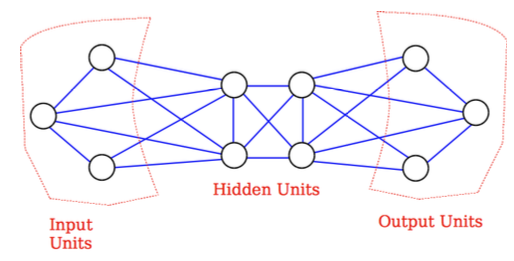

Search Problem

Input is set and fixed (clamped)

Annealing is done

Answer is presented at the output

Hidden units add extra representational power

Learning problem

Situations

- Present data vectors to the network

Problem

- Learn weights that generate these data with high probability

Approach

- Perform small updates on the weights

- Each time perform search problem

Pros & Cons

✅ Pros

- Boltzmann machine with enough hidden units can compute any function

⛔️ Cons

- Training is very slow and computational expensive 😢

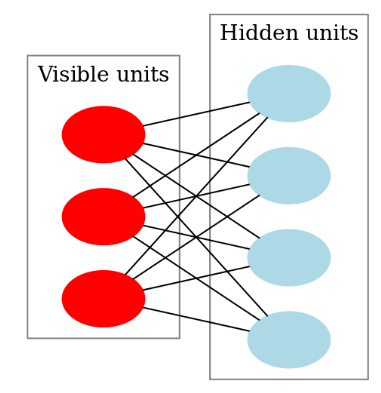

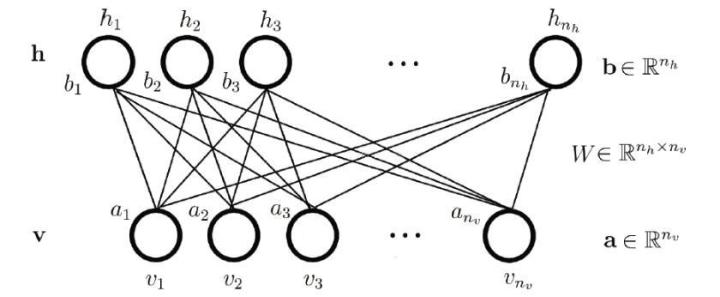

Restricted Boltzmann Machine

Boltzmann machine with restriction

Graph must be bipartite

Set of visible units

Set of hidden units

✅ Advantage

- No connection between hidden units

- Efficient training

Energy

Energy:

Probability of hidden unit:

Probability of input vector:

Free Energy: