SVM: Basics

🎯 Goal of SVM

To find the optimal separating hyperplane which maximizes the margin of the training data

- it correctly classifies the training data

- it is the one which will generalize better with unseen data (as far as possible from data points from each category)

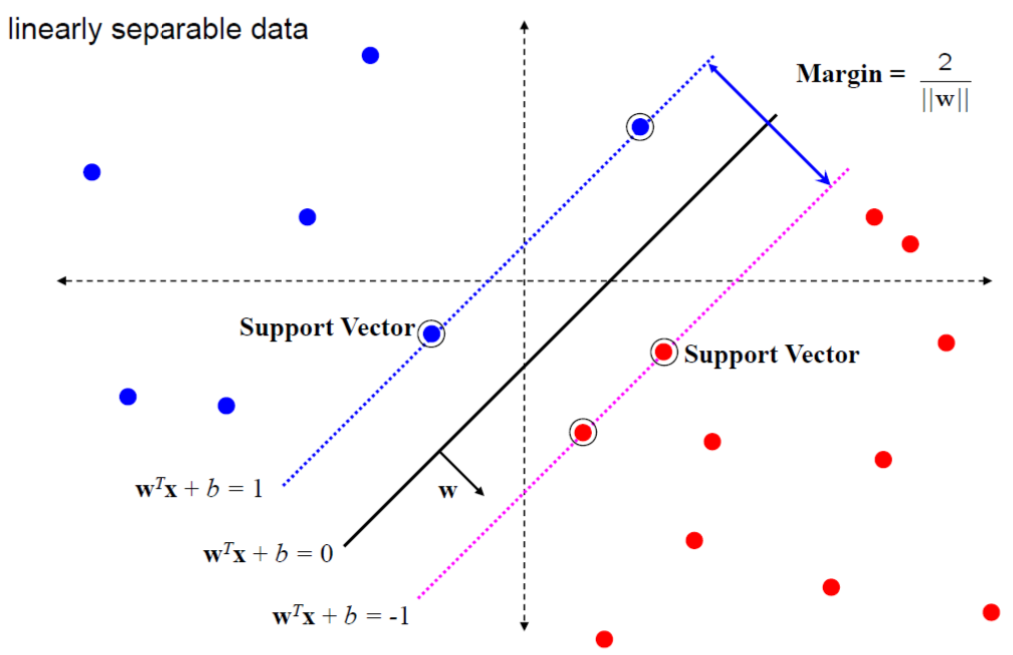

SVM math formulation

Assuming data is linear separable

Decision boundary: Hyperplane

Support Vectors: Data points closes to the decision boundary (Other examples can be ignored)

- Positive support vectors:

- negative support vectors:

Why do we use 1 and -1 as class labels?

- This makes the math manageable, because -1 and 1 are only different by the sign. We can write a single equation to describe the margin or how close a data point is to our separating hyperplane and not have to worry if the data is in the -1 or +1 class.

- If a point is far away from the separating plane on the positive side, then will be a large positive number, and will give us a large number. If it’s far from the negative side and has a negative label, will also give us a large positive number.

Margin : distance between the support vectors and the decision boundary and should be maximized

SVM optimization problem

Requirement:

- Maximal margin

- Correct classification

Based on these requirements, we have:

Reformulation:

This is the hard margin SVM.

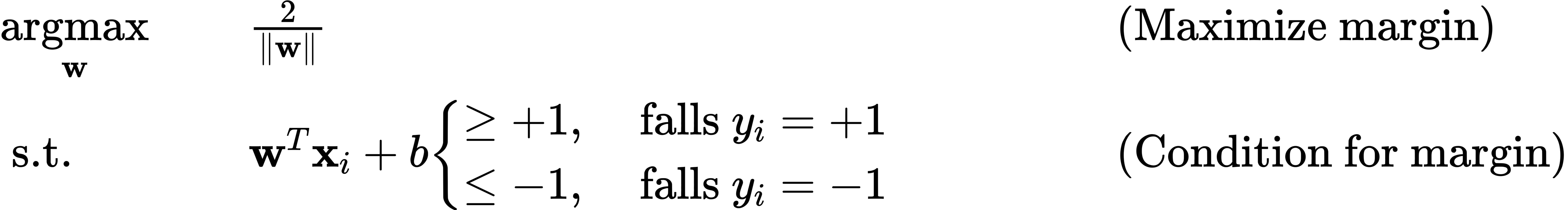

Soft margin SVM

💡 Idea

“Allow the classifier to make some mistakes” (Soft margin)

➡️ Trade-off between margin and classification accuracy

Slack-variables:

💡Allows violating the margin conditions

- : sample is between margin and decision boundary (margin violation)

- : sample is on the wrong side of the decision boundary (misclassified)

Soft Max-Margin

Optimization problem

- : regularization parameter, determines how important should be

- Small : Constraints have little influence ➡️ large margin

- Large : Constraints have large influence ➡️ small margin

- infinite: Constraints are enforced ➡️ hard margin

Soft SVM Optimization

Reformulate into an unconstrained optimization problem

- Rewrite constraints:

- Together with

Unconstrained optimization (over ):

Points are in 3 categories:

: Point outside margin, no contribution to loss

: Point is on the margin, no contribution to loss as in hard margin

: Point violates the margin, contributes to loss

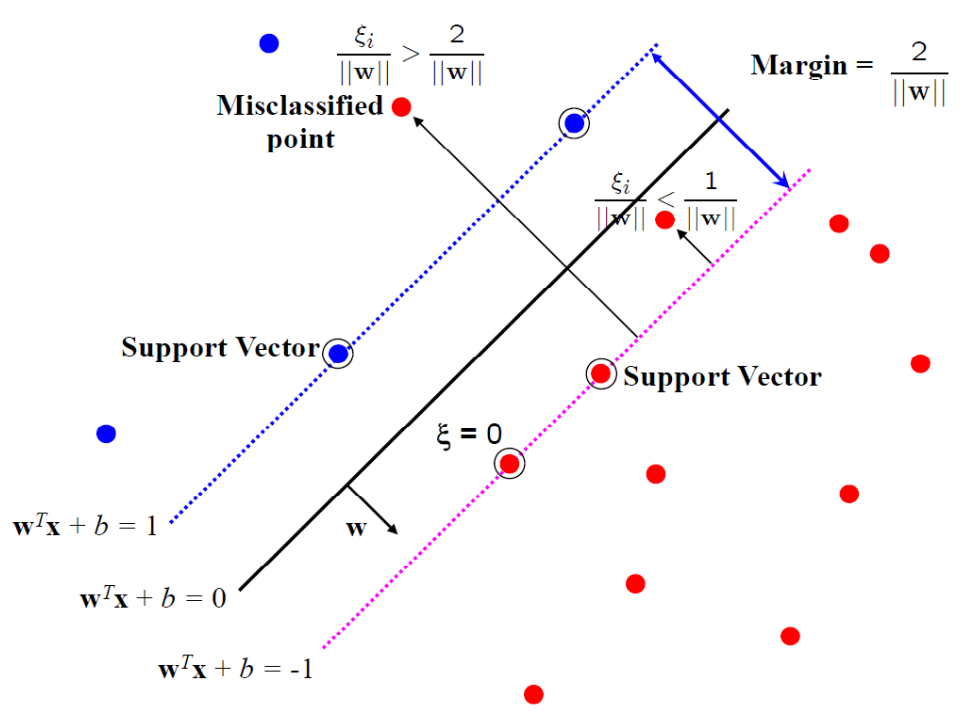

Loss function

SVMs uses “hinge” loss (approximation of 0-1 loss)

For an intended output and a classifier score , the hinge loss of the prediction is defined as

Note that should be the “raw” output of the classifier’s decision function, not the predicted class label. For instance, in linear SVMs, , where are the parameters of the hyperplane and is the input variable(s).

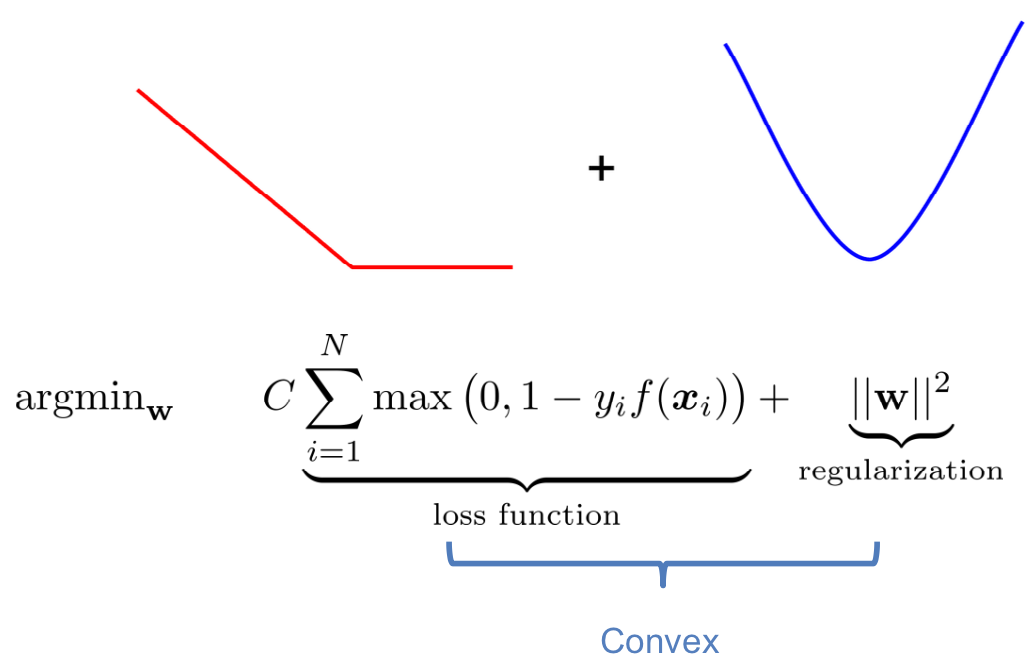

The loss function of SVM is convex:

I.e.,

- There is only one minimum

- We can find it with gradient descent

- However: Hinge loss is not differentiable! 🤪

Sub-gradients

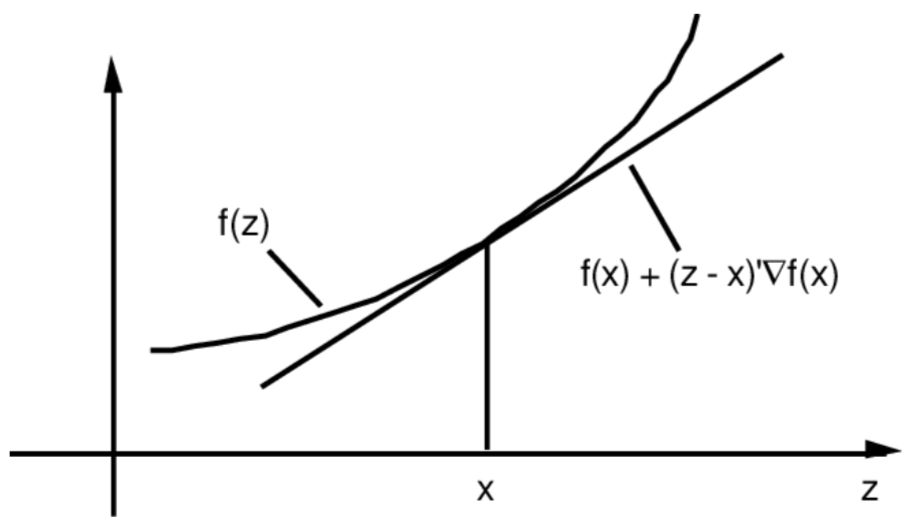

For convex function :

(Linear approximation underestimates function)

A subgradient of a convex function at point is any such that

- Always exists (even is not differentiable)

- If is differentiable at , then:

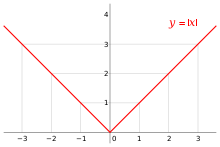

Example

- : unique sub-gradient is

- :

Sub-gradient Method

Sub-gradient Descent

- Given convex , not necessarily differentiable

- Initialize

- Repeat: , where is any sub-gradient of at point

‼️ Notes:

- Sub-gradients do not necessarily decrease at every step (no real descent method)

- Need to keep track of the best iterate

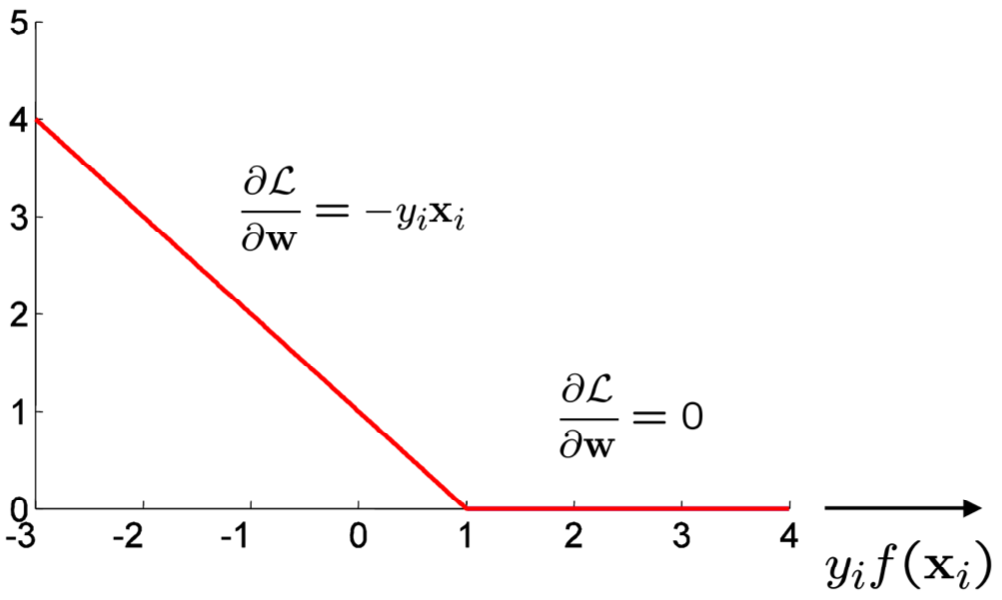

Sub-gradients for hinge loss

Sub-gradient descent for SVMs

Recall the Unconstrained optimization for SVMs:

At each iteration, pick random training sample

If :

Otherwise:

Application of SVMs

- Pedestrian Tracking

- text (and hypertext) categorization

- image classification

- bioinformatics (Protein classification, cancer classification)

- hand-written character recognition

Yet, in the last 5-8 years, neural networks have outperformed SVMs on most applications.🤪☹️😭