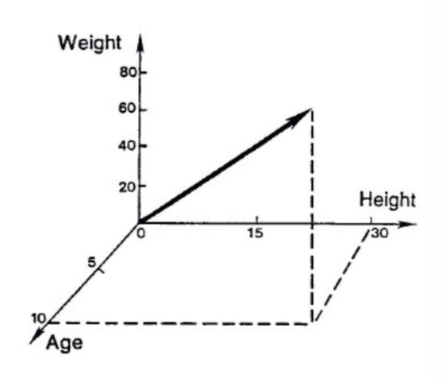

Math Basics Linear Algebra Vectors Vector : multi-dimensional quantity

Each dimension contains different information (e.g.: Age, Weight, Height…)

represented as bold symbols

A vector x \boldsymbol{x} x column vector

x = [ 1 2 4 ]

\boldsymbol{x}=\left[\begin{array}{l}

{1} \\\\

{2} \\\\

{4}

\end{array}\right]

x = 1 2 4 A transposed vector x T \boldsymbol{x}^T x T row vector

x T = [ 1 2 4 ]

\boldsymbol{x}^{T}=\left[\begin{array}{lll}

{1} & {2} & {4}

\end{array}\right]

x T = [ 1 2 4 ] Vector Operations ⟨ v , w ⟩ = 1 ⋅ 2 + 2 ⋅ 4 + 4 ⋅ 8 = 42

\langle v, w\rangle= 1 \cdot 2+2 \cdot 4+4 \cdot 8=42

⟨ v , w ⟩ = 1 ⋅ 2 + 2 ⋅ 4 + 4 ⋅ 8 = 42 Length of a vector : Square root of the inner product with itself

∥ v ∥ = ⟨ v , v ⟩ 1 2 = ( 1 2 + 2 2 + 4 2 ) 1 2 = 21

\|\boldsymbol{v}\|=\langle\boldsymbol{v}, \boldsymbol{v}\rangle^{\frac{1}{2}}=\left(1^{2}+2^{2}+4^{2}\right)^{\frac{1}{2}}=\sqrt{21}

∥ v ∥ = ⟨ v , v ⟩ 2 1 = ( 1 2 + 2 2 + 4 2 ) 2 1 = 21 Matrices Matrix: rectangular array of numbers arranged in rows and columns

denoted with bold upper-case letters

X = [ 1 3 2 3 4 7 ]

\boldsymbol{X}=\left[\begin{array}{ll}{1} & {3} \\\\ {2} & {3} \\\\ {4} & {7}\end{array}\right]

X = 1 2 4 3 3 7 Dimension: \\#rows \\times \\#columns X ∈ R 3 × 2 X \in \mathbb{R}^{3 \times 2} X ∈ R 3 × 2

Vectors are special cases of matrices

x T = [ 1 2 4 ] ⏟ 1 × 3 matrix

\boldsymbol{x}^{T}=\underbrace{\left[\begin{array}{ccc}{1} & {2} & {4}\end{array}\right]}_{1 \times 3 \text { matrix }}

x T = 1 × 3 matrix [ 1 2 4 ] ####Matrices in ML

Matrice Operations Multiplication with scalar

3 M = 3 [ 3 4 5 1 0 1 ] = [ 9 12 15 3 0 3 ]

3 \boldsymbol{M}=3\left[\begin{array}{lll}{3} & {4} & {5} \\\\ {1} & {0} & {1}\end{array}\right]=\left[\begin{array}{ccc}{9} & {12} & {15} \\\\ {3} & {0} & {3}\end{array}\right]

3 M = 3 3 1 4 0 5 1 = 9 3 12 0 15 3 Addition of matrices

M + N = [ 3 4 5 1 0 1 ] + [ 1 2 1 3 1 1 ] = [ 4 6 6 4 1 2 ]

\boldsymbol{M} + \boldsymbol{N}=\left[\begin{array}{lll}{3} & {4} & {5} \\\\ {1} & {0} & {1}\end{array}\right]+\left[\begin{array}{lll}{1} & {2} & {1} \\\\ {3} & {1} & {1}\end{array}\right]=\left[\begin{array}{lll}{4} & {6} & {6} \\\\ {4} & {1} & {2}\end{array}\right]

M + N = 3 1 4 0 5 1 + 1 3 2 1 1 1 = 4 4 6 1 6 2 Transposed

M = [ 3 4 5 1 0 1 ] , M T = [ 3 1 4 0 5 1 ]

\boldsymbol{M}=\left[\begin{array}{lll}{3} & {4} & {5} \\\\ {1} & {0} & {1}\end{array}\right], \boldsymbol{M}^{T}=\left[\begin{array}{ll}{3} & {1} \\\\ {4} & {0} \\\\ {5} & {1}\end{array}\right]

M = 3 1 4 0 5 1 , M T = 3 4 5 1 0 1 Matrix-Vector product (Vector need to have same dimensionality as number of columns)

[ w _ 1 , … , w _ n ] ⏟ W [ v _ 1 ⋮ v _ n ] ⏟ _ v = [ v _ 1 w _ 1 + ⋯ + v _ n w _ n ] ⏟ _ u

\underbrace{\left[\boldsymbol{w}\_{1}, \ldots, \boldsymbol{w}\_{n}\right]}_{\boldsymbol{W}} \underbrace{\left[\begin{array}{c}{v\_{1}} \\\\ {\vdots} \\\\ {v\_{n}}\end{array}\right]}\_{\boldsymbol{v}}=\underbrace{\left[\begin{array}{c}{v\_{1} \boldsymbol{w}\_{1}+\cdots+v\_{n} \boldsymbol{w}\_{n}}\end{array}\right]}\_{\boldsymbol{u}}

W [ w _ 1 , … , w _ n ] v _ 1 ⋮ v _ n _ v = [ v _ 1 w _ 1 + ⋯ + v _ n w _ n ] _ u E.g.:

u = W v = [ 3 4 5 1 0 1 ] [ 1 0 2 ] = [ 3 ⋅ 1 + 4 ⋅ 0 + 5 ⋅ 2 1 ⋅ 1 + 0 ⋅ 0 + 1 ⋅ 2 ] = [ 13 3 ]

\boldsymbol{u}=\boldsymbol{W} \boldsymbol{v}=\left[\begin{array}{ccc}{3} & {4} & {5} \\\\ {1} & {0} & {1}\end{array}\right]\left[\begin{array}{l}{1} \\\\ {0} \\\\ {2}\end{array}\right]=\left[\begin{array}{l}{3 \cdot 1+4 \cdot 0+5 \cdot 2} \\\\ {1 \cdot 1+0 \cdot 0+1 \cdot 2}\end{array}\right]=\left[\begin{array}{c}{13} \\\\ {3}\end{array}\right]

u = W v = 3 1 4 0 5 1 1 0 2 = 3 ⋅ 1 + 4 ⋅ 0 + 5 ⋅ 2 1 ⋅ 1 + 0 ⋅ 0 + 1 ⋅ 2 = 13 3 💡 Think as: We sum over the columns w i \boldsymbol{w}_i w i W \boldsymbol{W} W v i v_i v i

u = v _ 1 w _ 1 + ⋯ + v _ n w _ n = 1 [ 3 1 ] + 0 [ 4 0 ] + 2 [ 5 1 ] = [ 13 3 ]

u=v\_{1} w\_{1}+\cdots+v\_{n} w\_{n}=1\left[\begin{array}{l}{3} \\\\ {1}\end{array}\right]+0\left[\begin{array}{l}{4} \\\\ {0}\end{array}\right]+2\left[\begin{array}{l}{5} \\\\ {1}\end{array}\right]=\left[\begin{array}{c}{13} \\\\ {3}\end{array}\right]

u = v _ 1 w _ 1 + ⋯ + v _ n w _ n = 1 3 1 + 0 4 0 + 2 5 1 = 13 3 Important Special Cases Calculus “The derivative of a function of a real variable measures the sensitivity to change of a quantity (a function value or dependent variable) which is determined by another quantity (the independent variable)”

Scalar Vector Function f ( x ) f(x) f ( x ) f ( x ) f(\boldsymbol{x}) f ( x ) Derivative ∂ f ( x ) ∂ x = g \frac{\partial f(x)}{\partial x}=g ∂ x ∂ f ( x ) = g ∂ f ( x ) ∂ x = [ ∂ f ( x ) ∂ x _ 1 , … , ∂ f ( x ) ∂ x _ d ] T = : ∇ f ( x ) \frac{\partial f(\boldsymbol{x})}{\partial \boldsymbol{x}}=\left[\frac{\partial f(\boldsymbol{x})}{\partial x\_{1}}, \ldots, \frac{\partial f(\boldsymbol{x})}{\partial x\_{d}}\right]^{T} =: \nabla f(x)\quad ∂ x ∂ f ( x ) = [ ∂ x _ 1 ∂ f ( x ) , … , ∂ x _ d ∂ f ( x ) ] T =: ∇ f ( x ) f f f x \boldsymbol{x} x Min/Max ∂ f ( x ) ∂ x = 0 \frac{\partial f(x)}{\partial x}=0 ∂ x ∂ f ( x ) = 0 ∂ f ( x ) ∂ x = [ 0 , … , 0 ] T = 0 \frac{\partial f(\boldsymbol{x})}{\partial \boldsymbol{x}}=[0, \ldots, 0]^{T}=\mathbf{0} ∂ x ∂ f ( x ) = [ 0 , … , 0 ] T = 0

Matrix Calculus Scalar Vector Linear ∂ a x ∂ x = a \frac{\partial a x}{\partial x}=a ∂ x ∂ a x = a ∇ _ x A x = A T \nabla\_{\boldsymbol{x}} \boldsymbol{A} \boldsymbol{x}=\boldsymbol{A}^{T} ∇_ x A x = A T Quadratic ∂ x 2 ∂ x = 2 x \frac{\partial x^{2}}{\partial x}=2 x ∂ x ∂ x 2 = 2 x ∇ _ x x T x = 2 x ∇ _ x x T A x = 2 A x \begin{array}{l}{\nabla\_{\boldsymbol{x}} \boldsymbol{x}^{T} \boldsymbol{x}=2 \boldsymbol{x}} \\\\ {\nabla\_{\boldsymbol{x}} \boldsymbol{x}^{T} \boldsymbol{A} \boldsymbol{x}=2 \boldsymbol{A} \boldsymbol{x}}\end{array} ∇_ x x T x = 2 x ∇_ x x T A x = 2 A x