Cross Validation

| How it works? | Illustration | |

|---|---|---|

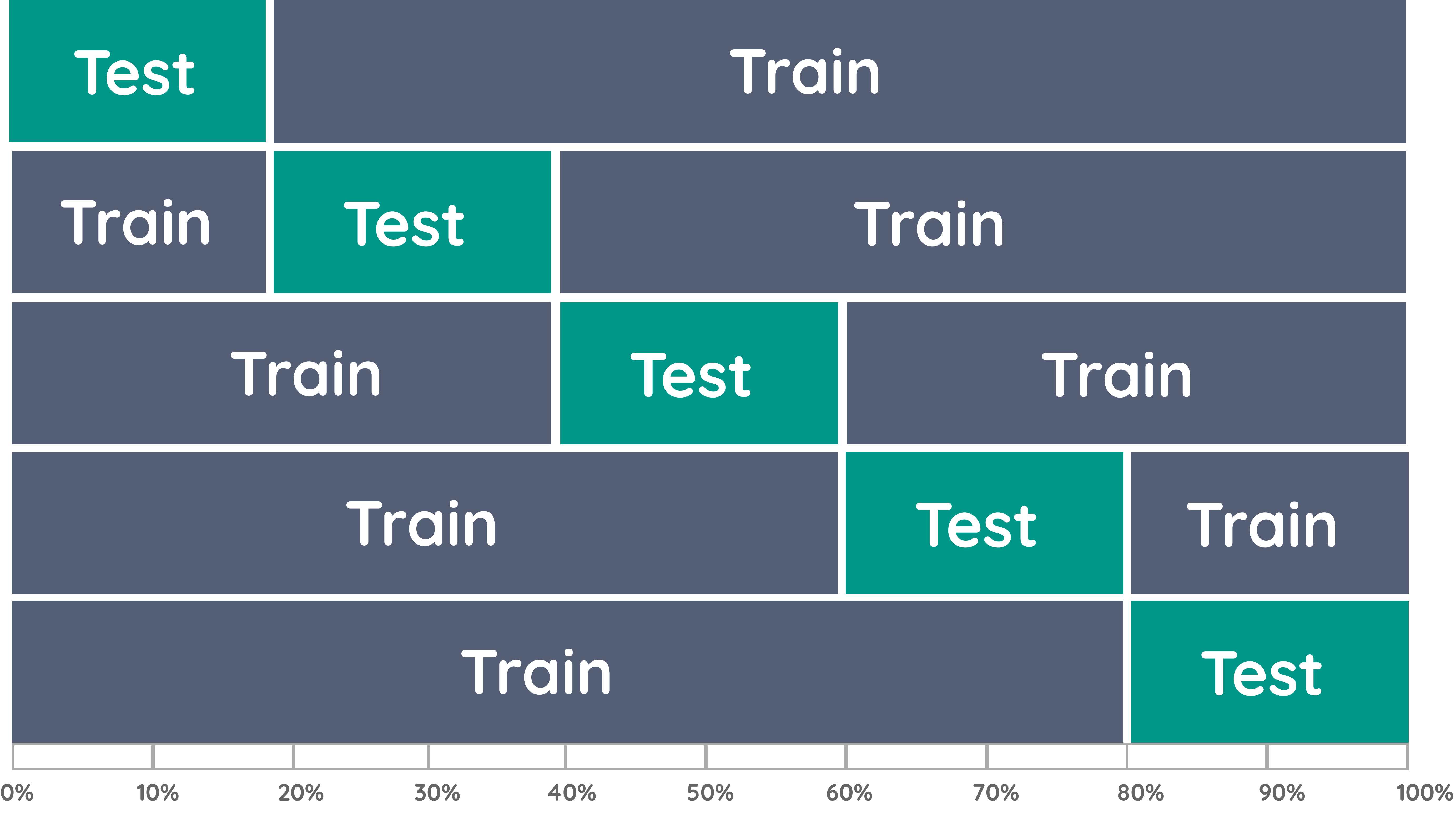

| K-fold | 1. Create -fold partition of the dataset 2. Estimate hold-out predictors using partition as validation and partition as training set |  |

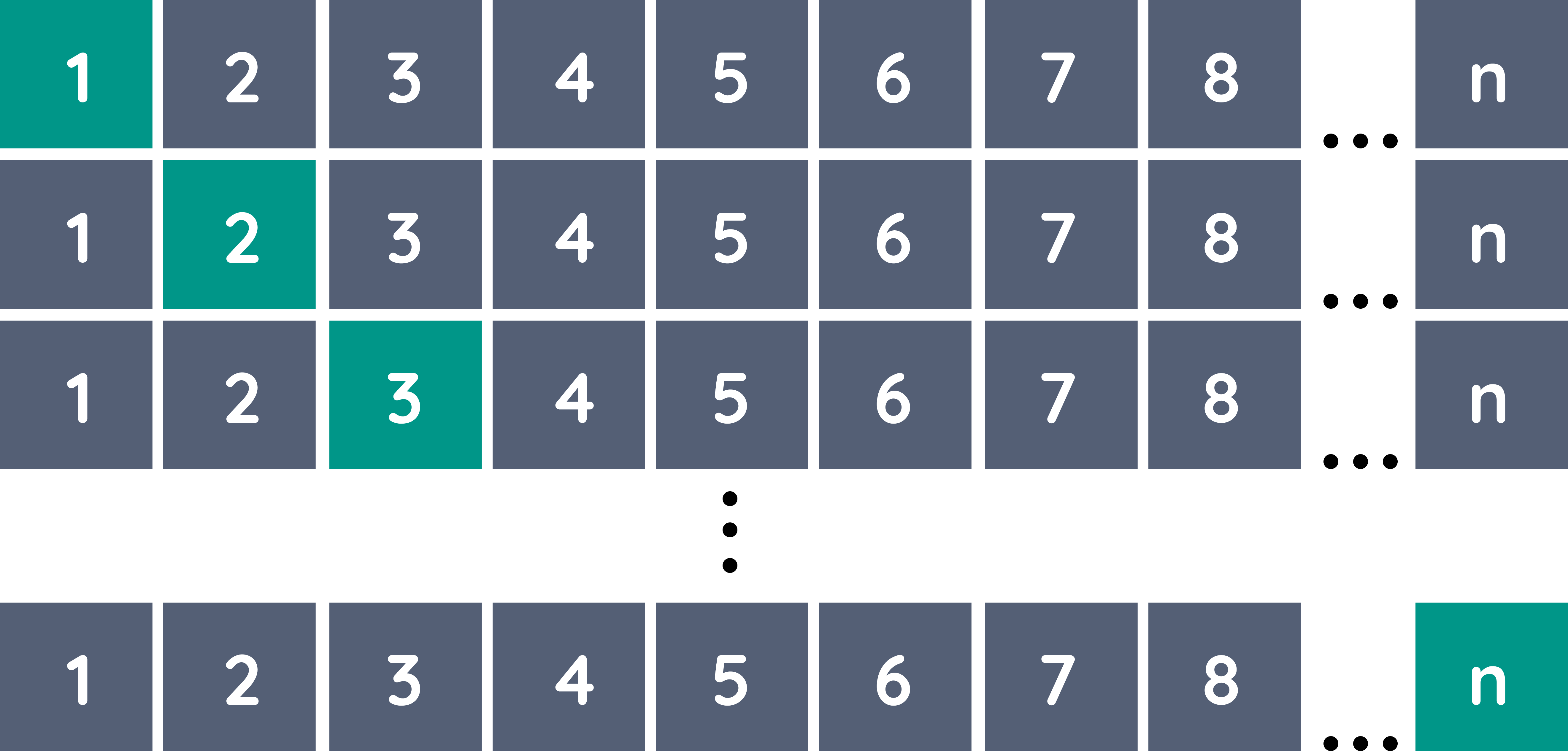

| Leave-One-Out (LOO) | (Special case with ) Consequently estimate hold-out predictors using partition as validation and partition as training set |  |

| Random sub-sampling | 1. Randomly sample a fraction of data points for validation 2. Train on remaining points and validate, repeat times |