Natural/Spoken Language Understanding

Definition

Natural language understanding

- Representing the semantics of natural language

- Possible view: Translation from natural language to representation of meaning

Difficulties

- Ambiguities

Lexical

Syntax

Referential

- Vagueness

- E.g., “I had a late lunch.”

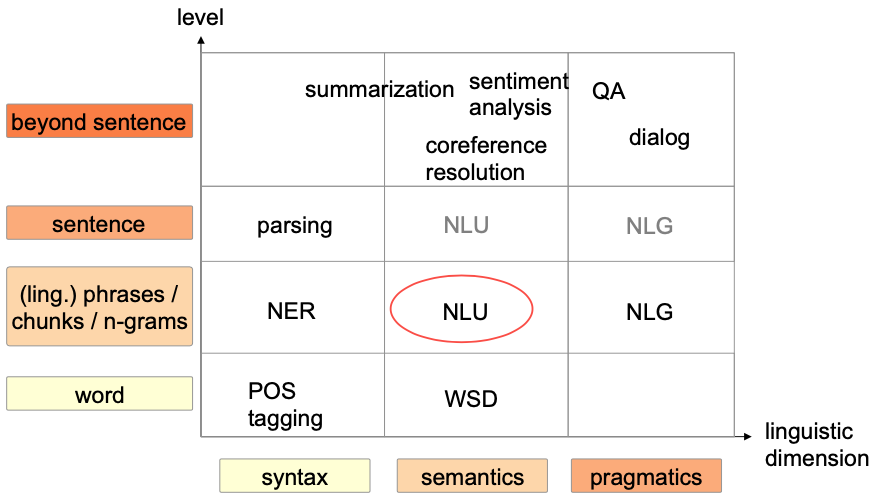

- Dimensons

- Depth: Shallow vs Deep

- Domain: Narrow vs Open

Examples

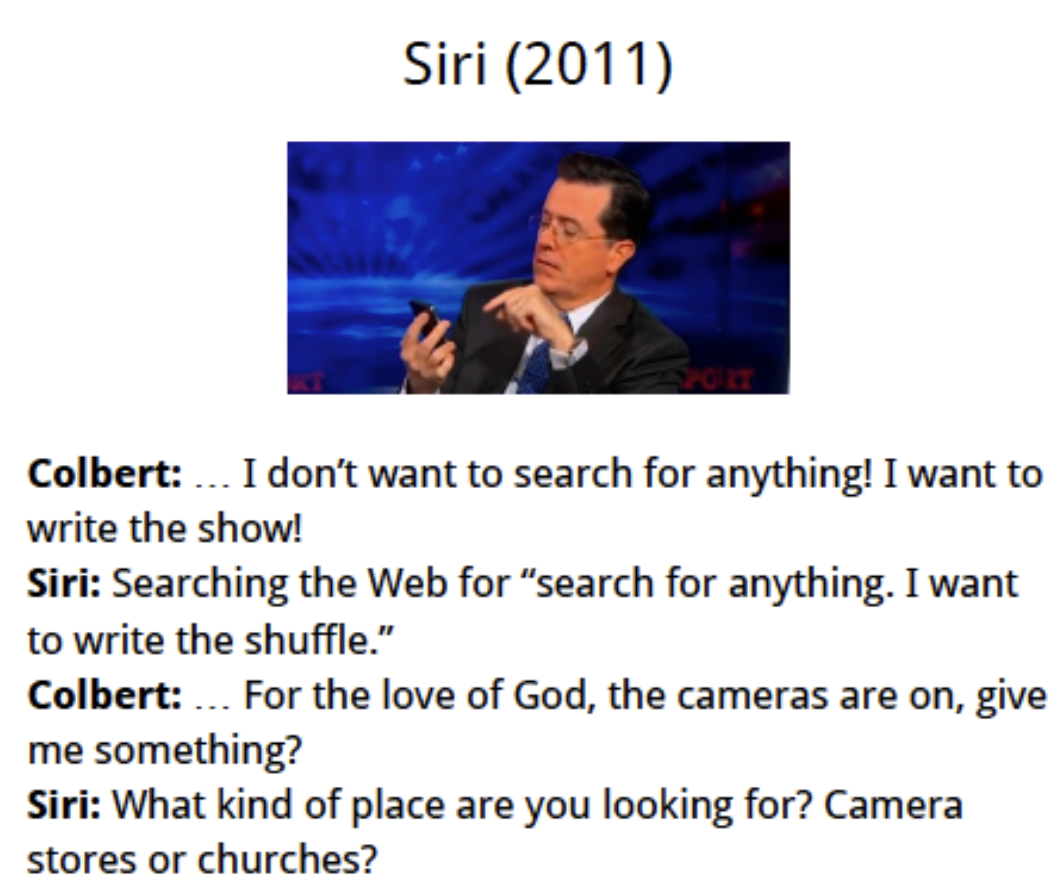

Siri (2011)

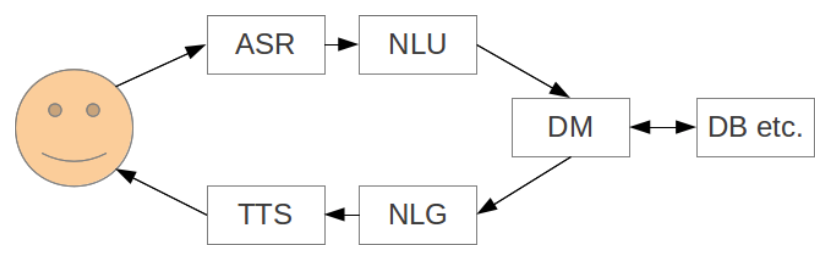

Dialog Modeling

Dialog system / Conversational agent

Computer system that converse with a human

Coherent structure

Different modalities:

- Text, speech, graphics, haptics, gestures

Components

Input recognition

Different modalities

- Automatic speech recognition (ASR)

- Gesture recognition

Transformation

Input modality (e.g. speech) –> text

May introduce first errors

High influence on the performance of an dialog system

Natural language understanding (NLU)

Semantic interpretation of written text

Transformation from natural language to semantic representation

Representations:

Deep vs Shallow

Domain-dependent vs. domain independent

Dialog manager (DM)

- Manage flow of conversation

- Input: Semantic representation of the input

- Output: Semantic representation of the output

- Utilize additional knowledge

User information

Dialog History

Task-specific information

Natural language generation (NLG)

Generate natural language from semantic representation

- Input: Semantic output representation of the dialog manager

- Output: Natural language text for the user

Output rendering

Generate correct output

- e.g. Text-to-Speech (TTS) for Spoken Dialog Systems

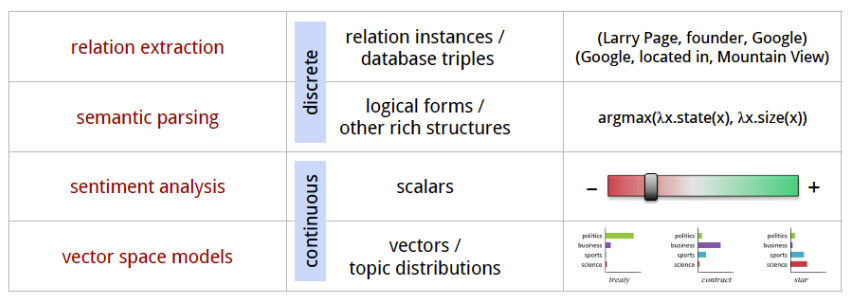

Natural Language understanding

Approaches

Output representation

- Relation instances

- (Larry Page, founder, Google)

- Logical forms

- Love(Mary, John)

- Scalar

- Positive/Negative 0.9

- Vector

- Hidden representation/ Word embeddings

Algorithms

- Rule-based / Template

- Machine learning

- Conditional random fields

- Support Vector Machine

- Neural Networks / Deep learning

Semantic Parsing

Parse natural language sentence into semantic representation

Machine learning approaches most successful 👏

Most common approach:

- Shallow Semantic Parsing / Semantic Role Labeling

Most important resources:

PropBank

Proposition Bank (PropBank)

Labels for all sentence in the English Penn TreeBank

Defines semantic based on the verbs of the sentence

Verbs: Define different senses of the verbs

Sense: Number of Arguments important to this sense (Often only numbers)

Arg0: Proto-Agent

Arg1: Proto-Patient

Arg2: mostly benefactive, instrument, attribute, or end state

Arg3: start point, benefactive, instrument, or attribute

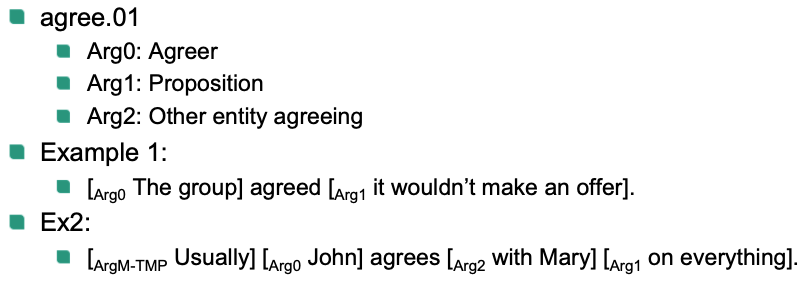

Example: “agree”

Example: “fall”

PropBank ArgM

TMP: when? yesterday evening, nowLOC: where? at the museum, in San FranciscoDIR: where to/from? down, to BangkokMNR: how? clearly, with much enthusiasmPRP/CAU: why? because … , in response to the rulingREC: themselves, each otherADV: miscellaneousPRD: secondary predication …ate the meat raw

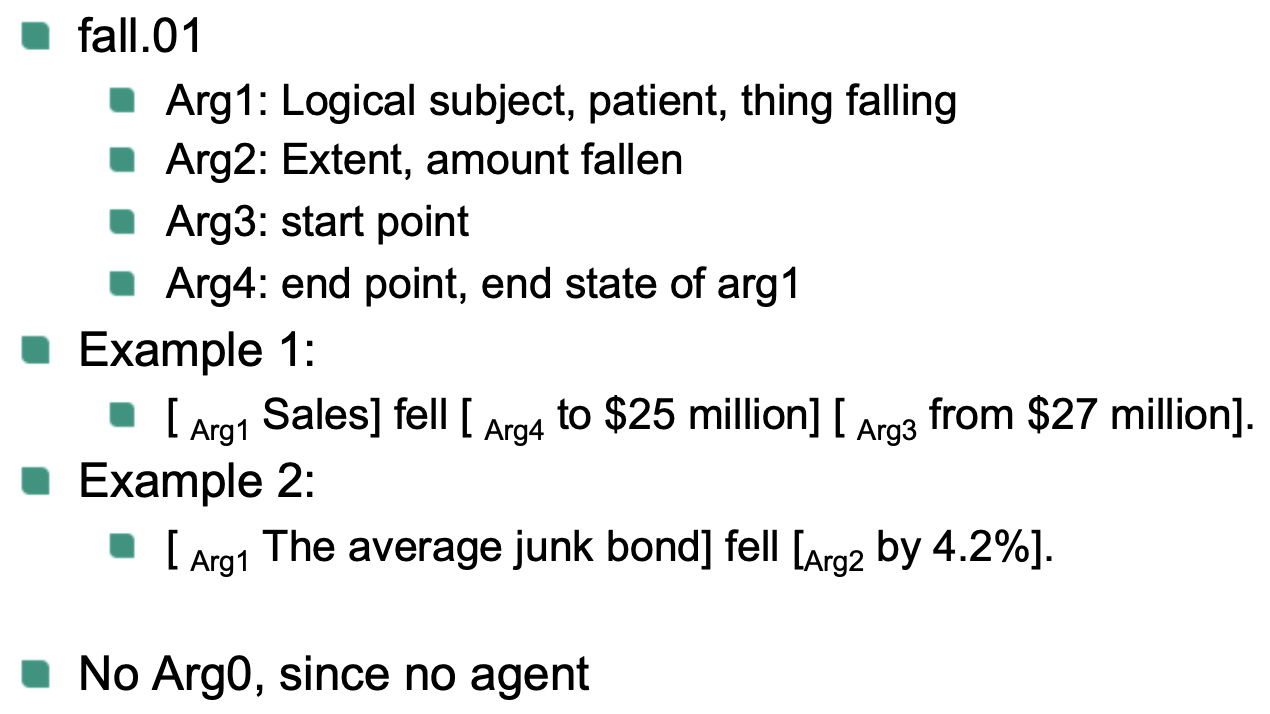

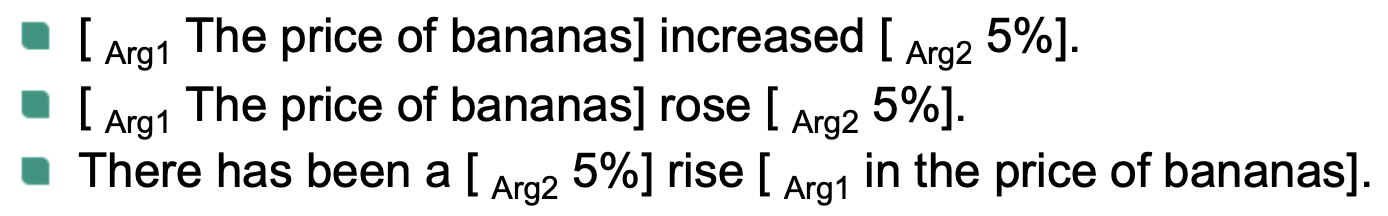

🔴 Problem

Different words, Predicate expressed by noun

Example

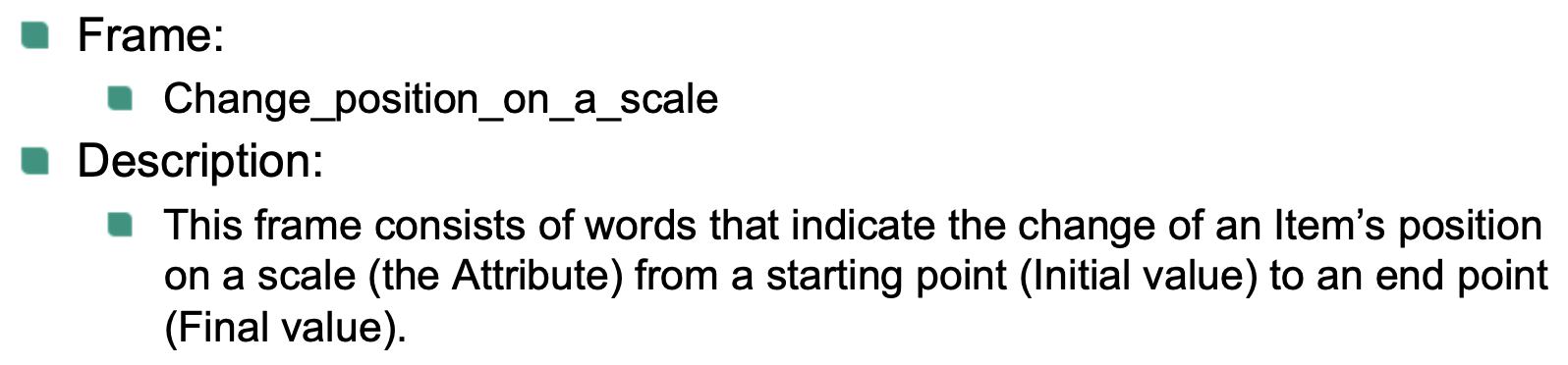

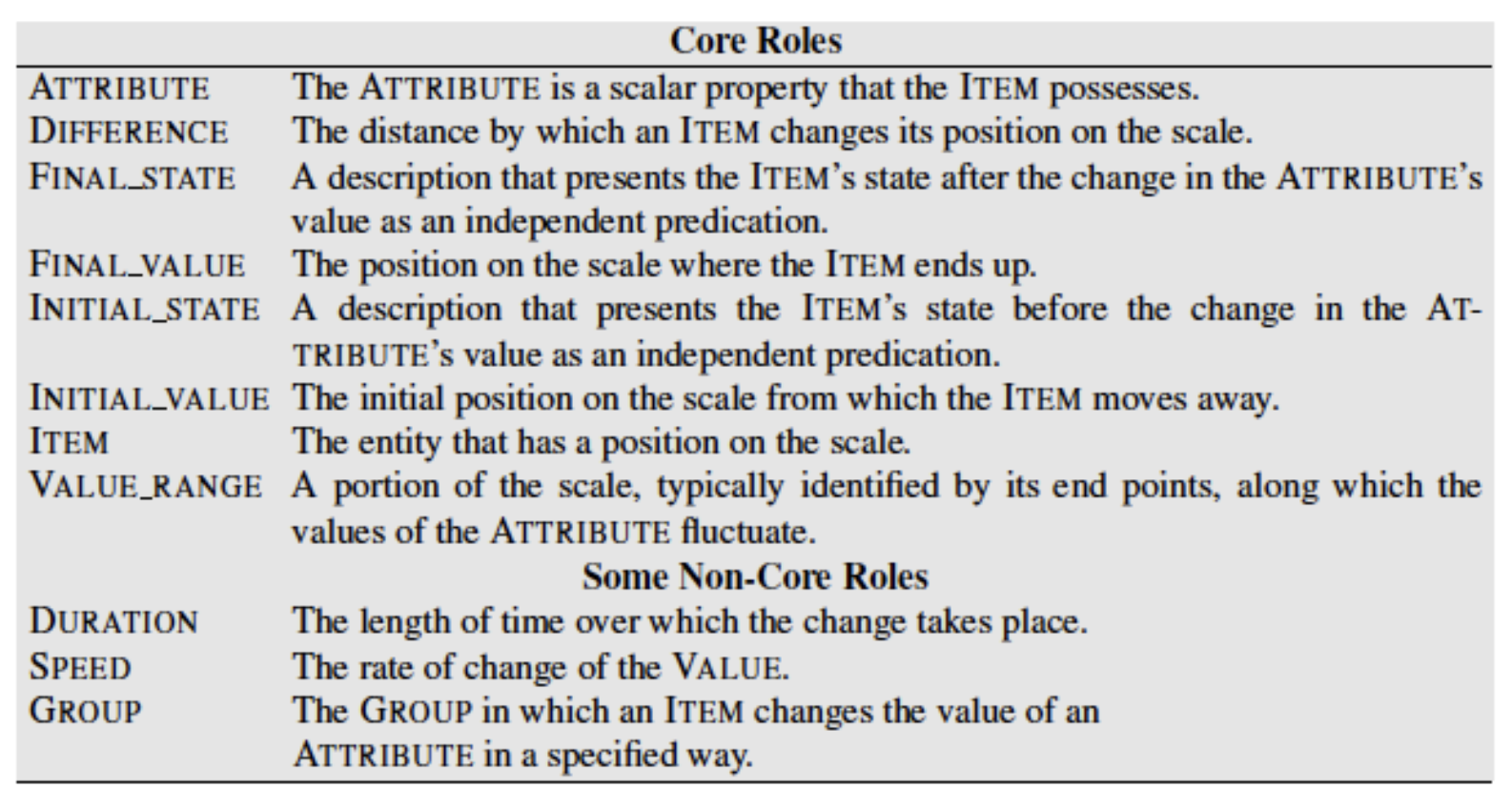

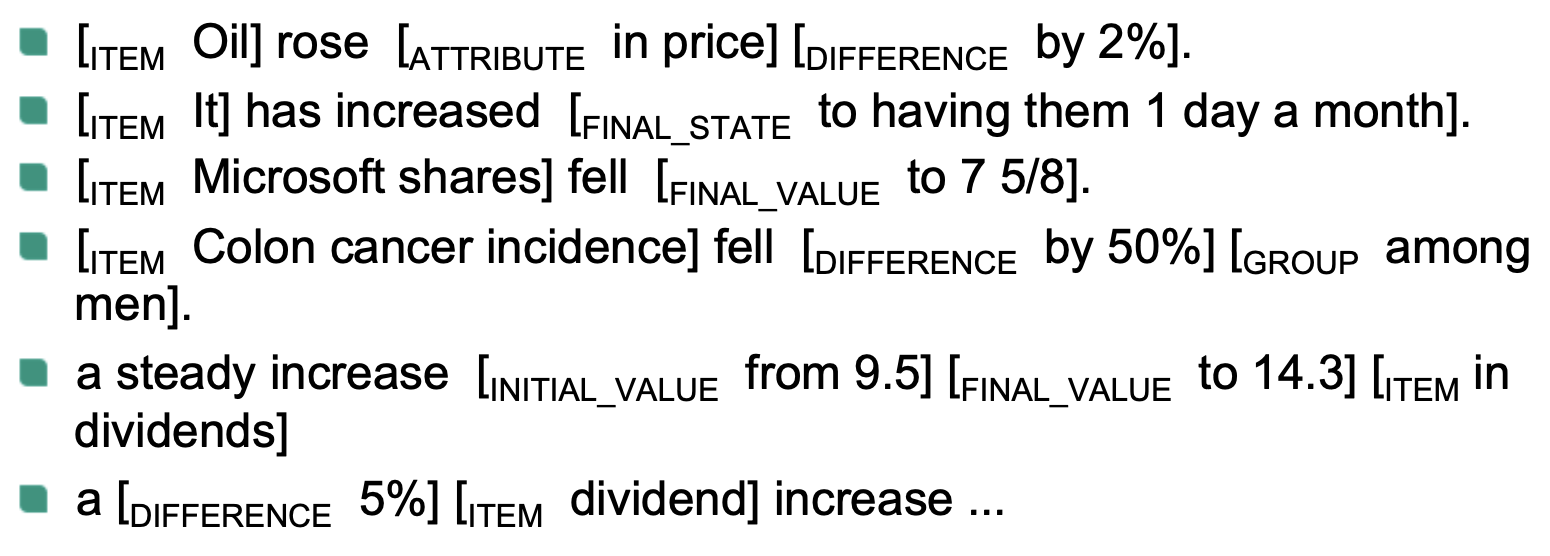

FrameNet

- Roles based on Frames

- Frame: holistic background knowledge that unites these words

- Frame-elements: Frame-specific semantic roles

Example 1

Example 2

Semantic Role labeling

Task: Automatically finding semantic roles for each argument of each predicate

Approach: Maching Learning

High level algorithm:

Parse sentence (Syntax tree)

Find Predicates

For every node in tree: Decide semantic role

Spoken Language understanding

Natural language processing for spoken input

Difficulties

Less grammatically speech

Partial Sentences

Disfluencies (Self correction, hesitations, repetitions)

Robust to noise

ASR errors

Techniques:

- Confidence

- Multiple hypothesis

No Structure information

Punctuation

Text segmentation

Approach

- Transform text into task-specific semantic representation of the user’s intention

- Subtasks

Domain Detection

- Motivated by Call Centers

- Many agents with specialization on one topic (Billing inquiries, technical support requests, sales inquiries, etc.)

- First techniques: Menus to find appropriate agent

- Automatic task:

- Given the utterance find the correct agent Utterance classification task

- Utterance classification task

- Input: Utterance

- Output: Topic

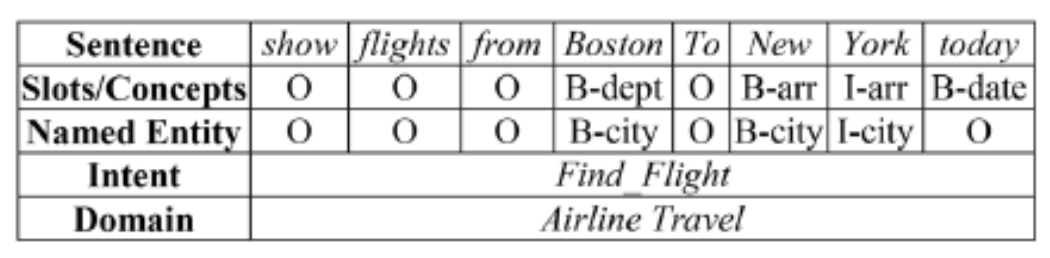

Intention determination

- Domain-dependent utterance classes

- e.g. Find_Flight

- Task: Assign class to Utterance

- Use similar technique

Slot filling

Sequence labeling task: Assign semantic class label to every word and history

- History: previous words and labels

Example:

Success of deep learning in other approaches:

- RNN-based approach

- Find most probable label given word and history