Gradient Descent Overview 🎯 Goal with gradient descent: find the optimal weights that minimize the loss function we’ve defined for the model.

From now on, we’ll explicitly represent the fact that the loss function L L L θ \theta θ θ = ( w , b ) \theta=(w, b) θ = ( w , b )

θ ^ = argmin θ 1 m ∑ _ i = 1 m L C E ( y ( i ) , x ( i ) ; θ )

\hat{\theta}=\underset{\theta}{\operatorname{argmin}} \frac{1}{m} \sum\_{i=1}^{m} L_{C E}\left(y^{(i)}, x^{(i)} ; \theta\right)

θ ^ = θ argmin m 1 ∑ _ i = 1 m L CE ( y ( i ) , x ( i ) ; θ ) Gradient descent finds a minimum of a function by figuring out in which direction (in the space of the parameters θ \theta θ opposite

💡 Intuition

if you are hiking in a canyon and trying to descend most quickly down to the river at the bottom, you might look around yourself 360 degrees, find the direction where the ground is sloping the steepest, and walk downhill in that direction.

For logistic regression, this loss function is conveniently convex

Just one minimum No local minima to get stuck in ⇒ \Rightarrow ⇒

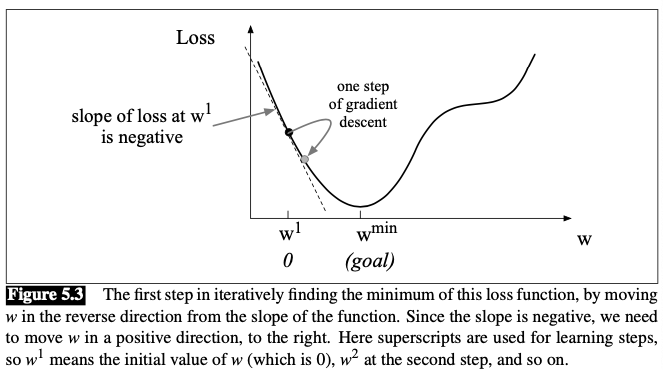

Visualization:

The magnitude of the amount to move in gradient descent is the value of the slope d d w f ( x ; w ) \frac{d}{d w} f(x ; w) d w d f ( x ; w ) learning rate η \eta η w more on each step.

In the single-variable example above, The change we make in our parameter is

w t + 1 = w t − η d d w f ( x ; w )

w^{t+1}=w^{t}-\eta \frac{d}{d w} f(x ; w)

w t + 1 = w t − η d w d f ( x ; w ) In N N N N N N

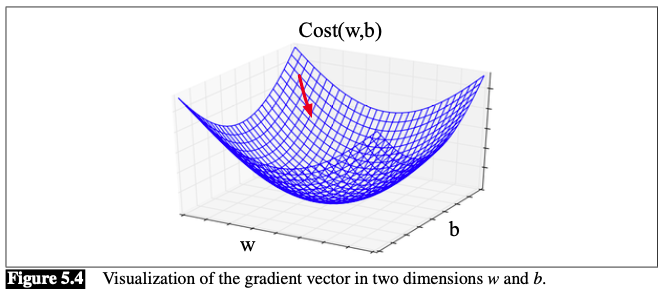

Visualizaion (E.g., N = 2 N=2 N = 2

In each dimension w i w_i w i partial derivative ∂ ∂ w i \frac{\partial}{\partial w_i} ∂ w i ∂

∇ θ L ( f ( x ; θ ) , y ) ) = [ ∂ ∂ w 1 L ( f ( x ; θ ) , y ) ∂ ∂ w 2 L ( f ( x ; θ ) , y ) ⋮ ∂ ∂ w n L ( f ( x ; θ ) , y ) ]

\left.\nabla_{\theta} L(f(x ; \theta), y)\right)=\left[\begin{array}{c}

\frac{\partial}{\partial w_{1}} L(f(x ; \theta), y) \\\\

\frac{\partial}{\partial w_{2}} L(f(x ; \theta), y) \\\\

\vdots \\\\

\frac{\partial}{\partial w_{n}} L(f(x ; \theta), y)

\end{array}\right]

∇ θ L ( f ( x ; θ ) , y ) ) = ∂ w 1 ∂ L ( f ( x ; θ ) , y ) ∂ w 2 ∂ L ( f ( x ; θ ) , y ) ⋮ ∂ w n ∂ L ( f ( x ; θ ) , y ) Thus, the change of θ \theta θ

θ t + 1 = θ t − η ∇ L ( f ( x ; θ ) , y )

\theta_{t+1}=\theta_{t}-\eta \nabla L(f(x ; \theta), y)

θ t + 1 = θ t − η ∇ L ( f ( x ; θ ) , y ) The gradient for Logistic Regression For logistic regression, the cross-entropy loss function is

L C E ( w , b ) = − [ y log σ ( w ⋅ x + b ) + ( 1 − y ) log ( 1 − σ ( w ⋅ x + b ) ) ]

L_{C E}(w, b)=-[y \log \sigma(w \cdot x+b)+(1-y) \log (1-\sigma(w \cdot x+b))]

L CE ( w , b ) = − [ y log σ ( w ⋅ x + b ) + ( 1 − y ) log ( 1 − σ ( w ⋅ x + b ))] The derivative of this loss function is:

∂ L C E ( w , b ) ∂ w j = [ σ ( w ⋅ x + b ) − y ] x j

\frac{\partial L_{C E}(w, b)}{\partial w_{j}}=[\sigma(w \cdot x+b)-y] x_{j}

∂ w j ∂ L CE ( w , b ) = [ σ ( w ⋅ x + b ) − y ] x j For derivation of the derivative above we need:

derivative of ln ( x ) \ln(x) ln ( x )

> d d x ln ( x ) = 1 x >

> \frac{d}{d x} \ln (x)=\frac{1}{x}

> > d x d ln ( x ) = x 1 > derivative of the sigmoid:

> d σ ( z ) d z = σ ( z ) ( 1 − σ ( z ) ) >

> \frac{d \sigma(z)}{d z}=\sigma(z)(1-\sigma(z))

> > d z d σ ( z ) = σ ( z ) ( 1 − σ ( z )) > Chain rule of derivative: for f ( x ) = u ( v ( x ) ) f(x)=u(v(x)) f ( x ) = u ( v ( x ))

> d f d x = d u d v ⋅ d v d x >

> \frac{d f}{d x}=\frac{d u}{d v} \cdot \frac{d v}{d x}

> > d x df = d v d u ⋅ d x d v > Now compute the derivative:

> > ∂ L L ( w , b ) ∂ w j = ∂ ∂ w j − [ y log σ ( w ⋅ x + b ) + ( 1 − y ) log ( 1 − σ ( w ⋅ x + b ) ) ] > = − ∂ ∂ w j y log σ ( w ⋅ x + b ) − ∂ ∂ w j ( 1 − y ) log [ 1 − σ ( w ⋅ x + b ) ] > = chain rule − y σ ( w ⋅ x + b ) ∂ ∂ w j σ ( w ⋅ x + b ) − 1 − y 1 − σ ( w ⋅ x + b ) ∂ ∂ w j 1 − σ ( w ⋅ x + b ) > = − [ y σ ( w ⋅ x + b ) − 1 − y 1 − σ ( w ⋅ x + b ) ] ∂ ∂ w j σ ( w ⋅ x + b ) > >

> \begin{aligned}

> \frac{\partial L L(w, b)}{\partial w_{j}} &=\frac{\partial}{\partial w_{j}}-[y \log \sigma(w \cdot x+b)+(1-y) \log (1-\sigma(w \cdot x+b))] \\\\

> &=-\frac{\partial}{\partial w_{j}} y \log \sigma(w \cdot x+b) - \frac{\partial}{\partial w_{j}}(1-y) \log [1-\sigma(w \cdot x+b)] \\\\

> &\overset{\text{chain rule}}{=} -\frac{y}{\sigma(w \cdot x+b)} \frac{\partial}{\partial w_{j}} \sigma(w \cdot x+b)-\frac{1-y}{1-\sigma(w \cdot x+b)} \frac{\partial}{\partial w_{j}} 1-\sigma(w \cdot x+b)\\\\

> &= -\left[\frac{y}{\sigma(w \cdot x+b)}-\frac{1-y}{1-\sigma(w \cdot x+b)}\right] \frac{\partial}{\partial w_{j}} \sigma(w \cdot x+b) \\\\

> \end{aligned}

> > > ∂ w j ∂ LL ( w , b ) > > > > = ∂ w j ∂ − [ y log σ ( w ⋅ x + b ) + ( 1 − y ) log ( 1 − σ ( w ⋅ x + b ))] = − ∂ w j ∂ y log σ ( w ⋅ x + b ) − ∂ w j ∂ ( 1 − y ) log [ 1 − σ ( w ⋅ x + b )] = chain rule − σ ( w ⋅ x + b ) y ∂ w j ∂ σ ( w ⋅ x + b ) − 1 − σ ( w ⋅ x + b ) 1 − y ∂ w j ∂ 1 − σ ( w ⋅ x + b ) = − [ σ ( w ⋅ x + b ) y − 1 − σ ( w ⋅ x + b ) 1 − y ] ∂ w j ∂ σ ( w ⋅ x + b ) > Now plug in the derivative of the sigmoid, and use the chain rule one more time:

> > ∂ L L ( w , b ) ∂ w j = − [ y − σ ( w ⋅ x + b ) σ ( w ⋅ x + b ) [ 1 − σ ( w ⋅ x + b ) ] ] σ ( w ⋅ x + b ) [ 1 − σ ( w ⋅ x + b ) ] ∂ ( w ⋅ x + b ) ∂ w j > = − [ y − σ ( w ⋅ x + b ) σ ( w ⋅ x + b ) [ 1 − σ ( w ⋅ x + b ) ] ] σ ( w ⋅ x + b ) [ 1 − σ ( w ⋅ x + b ) ] x j > = − [ y − σ ( w ⋅ x + b ) ] x j > = [ σ ( w ⋅ x + b ) − y ] x j > >

> \begin{aligned}

> \frac{\partial L L(w, b)}{\partial w_{j}} &=-\left[\frac{y-\sigma(w \cdot x+b)}{\sigma(w \cdot x+b)[1-\sigma(w \cdot x+b)]}\right] \sigma(w \cdot x+b)[1-\sigma(w \cdot x+b)] \frac{\partial(w \cdot x+b)}{\partial w_{j}} \\\\

> &=-\left[\frac{y-\sigma(w \cdot x+b)}{\sigma(w \cdot x+b)[1-\sigma(w \cdot x+b)]}\right] \sigma(w \cdot x+b)[1-\sigma(w \cdot x+b)] x_{j} \\\\

> &=-[y-\sigma(w \cdot x+b)] x_{j} \\\\

> &=[\sigma(w \cdot x+b)-y] x_{j}

> \end{aligned}

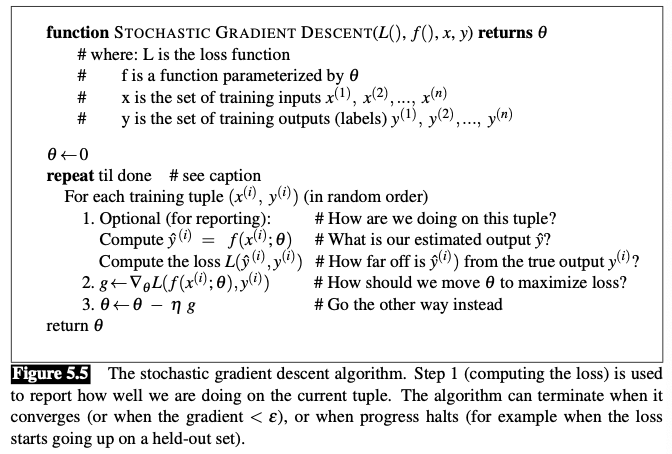

> > > ∂ w j ∂ LL ( w , b ) > > > = − [ σ ( w ⋅ x + b ) [ 1 − σ ( w ⋅ x + b )] y − σ ( w ⋅ x + b ) ] σ ( w ⋅ x + b ) [ 1 − σ ( w ⋅ x + b )] ∂ w j ∂ ( w ⋅ x + b ) = − [ σ ( w ⋅ x + b ) [ 1 − σ ( w ⋅ x + b )] y − σ ( w ⋅ x + b ) ] σ ( w ⋅ x + b ) [ 1 − σ ( w ⋅ x + b )] x j = − [ y − σ ( w ⋅ x + b )] x j = [ σ ( w ⋅ x + b ) − y ] x j > > Stochastic Gradient descent Stochastic gradient descent is an online algorithm that minimizes the loss function by

computing its gradient after each training example, and nudging θ \theta θ The learning rate η is a (hyper-)parameter that must be adjusted.

If it’s too high, the learner will take steps that are too large, overshooting the minimum of the loss function. If it’s too low, the learner will take steps that are too small, and take too long to get to the minimum. It is common to begin the learning rate at a higher value, and then slowly decrease it, so that it is a function of the iteration k k k

Mini-batch training Stochastic gradient descent: chooses a single random example at a time, moving the weights so as to improve performance on that single example.

Can result in very choppy movements Batch gradient descent: compute the gradient over the entire dataset.

Offers a superb estimate of which direction to move the weights Spends a lot of time processing every single example in the training set to compute this perfect direction. Mini-batch gradient descent

we train on a group of m m m Has the advantage of computational efficiencyThe mini-batches can easily be vectorized, choosing the size of the mini-batch based on the computational resources. This allows us to process all the exam- ples in one mini-batch in parallel and then accumulate the loss Define the mini-batch version of the cross-entropy loss function (assuming the training examples are independent):

log p ( training labels ) = log ∏ _ i = 1 p ( y ( i ) ∣ x ( i ) ) = ∑ _ i = 1 m log p ( y ( i ) ∣ x ( i ) ) = − ∑ _ i = 1 m L _ C E ( y ^ ( i ) , y ( i ) )

\begin{aligned}

\log p(\text {training labels}) &=\log \prod\_{i=1} p\left(y^{(i)} | x^{(i)}\right) \\\\

&=\sum\_{i=1}^{m} \log p\left(y^{(i)} | x^{(i)}\right) \\\\

&=-\sum\_{i=1}^{m} L\_{C E}\left(\hat{y}^{(i)}, y^{(i)}\right)

\end{aligned}

log p ( training labels ) = log ∏ _ i = 1 p ( y ( i ) ∣ x ( i ) ) = ∑ _ i = 1 m log p ( y ( i ) ∣ x ( i ) ) = − ∑ _ i = 1 m L _ CE ( y ^ ( i ) , y ( i ) ) The cost function for the mini-batch of m m m average loss for each example:

cost ( w , b ) = 1 m ∑ _ i = 1 m L C E ( y ^ ( i ) , y ( i ) ) = − 1 m ∑ _ i = 1 m y ( i ) log σ ( w ⋅ x ( i ) + b ) + ( 1 − y ( i ) ) log ( 1 − σ ( w ⋅ x ( i ) + b ) )

\begin{aligned}

\operatorname{cost}(w, b) &=\frac{1}{m} \sum\_{i=1}^{m} L_{C E}\left(\hat{y}^{(i)}, y^{(i)}\right) \\\\

&=-\frac{1}{m} \sum\_{i=1}^{m} y^{(i)} \log \sigma\left(w \cdot x^{(i)}+b\right)+\left(1-y^{(i)}\right) \log \left(1-\sigma\left(w \cdot x^{(i)}+b\right)\right)

\end{aligned}

cost ( w , b ) = m 1 ∑ _ i = 1 m L CE ( y ^ ( i ) , y ( i ) ) = − m 1 ∑ _ i = 1 m y ( i ) log σ ( w ⋅ x ( i ) + b ) + ( 1 − y ( i ) ) log ( 1 − σ ( w ⋅ x ( i ) + b ) ) The mini-batch gradient is the average of the individual gradients:

∂ cost ( w , b ) ∂ w j = 1 m ∑ i = 1 m [ σ ( w ⋅ x ( i ) + b ) − y ( i ) ] x j ( i )

\frac{\partial \operatorname{cost}(w, b)}{\partial w_{j}}=\frac{1}{m} \sum_{i=1}^{m}\left[\sigma\left(w \cdot x^{(i)}+b\right)-y^{(i)}\right] x_{j}^{(i)}

∂ w j ∂ cost ( w , b ) = m 1 i = 1 ∑ m [ σ ( w ⋅ x ( i ) + b ) − y ( i ) ] x j ( i )