Sigmoid

Sigmoid to Logistic Regression

Consider a single input observation

The classifier output can be

- : the observation is a member of the class

- : the observation is NOT a member of the class

We want to know the probability that this observation is a member of the class.

E.g.:

- The decision is “positive sentiment” versus “negative sentiment”

- the features represent counts of words in a document

- is the probability that the document has positive sentiment, while and is the probability that the document has negative sentiment.

Logistic regression solves this task by learning, from a training set, a vector of weights and a bias term.

Each weight is a real number, and is associated with one of the input features . The weight represents how important that input feature is to the classification decision, can be

- positive (meaning the feature is associated with the class)

- negative (meaning the feature is NOT associated with the class).

E.g.: we might expect in a sentiment task the word awesome to have a high positive weight, and abysmal to have a very negative weight.

Bias term , also called the intercept, is another real number that’s added to the weighted inputs.

To make a decision on a test instance, the resulting single number expresses the weighted sum of the evidence for the class:

(Note that is NOT a legal probability, since )

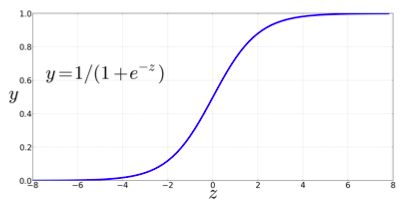

To create a probability, we’ll pass through the sigmoid function (also called logistic function):

👍 Advantages of sigmoid

- It takes a real-valued number and maps it into the range [0,1] (which is just what we want for a probability)

- It is nearly linear around 0 but has a sharp slope toward the ends, it tends to squash outlier values toward 0 or 1.

- Differentiable handy for learning

To make it a probability, we just need to make sure that the two cases, and , sum to 1:

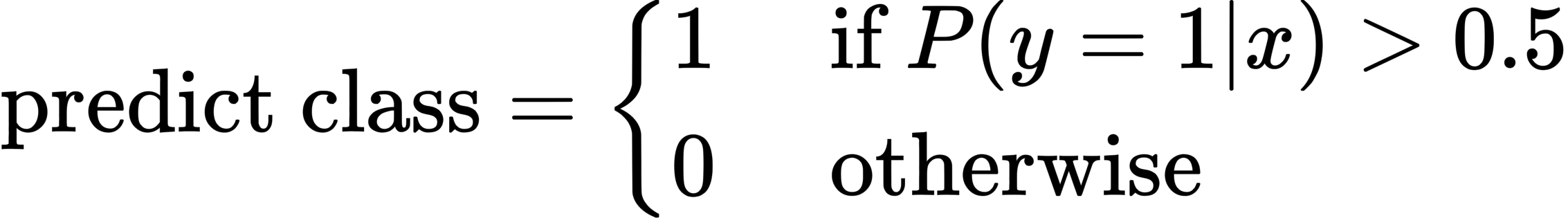

Now we have an algorithm that given an instance computes the probability . For a test instance , we say yes if the probability is more than 0.5, and no otherwise. We call 0.5 the decision boundary:

Example: sentiment classification

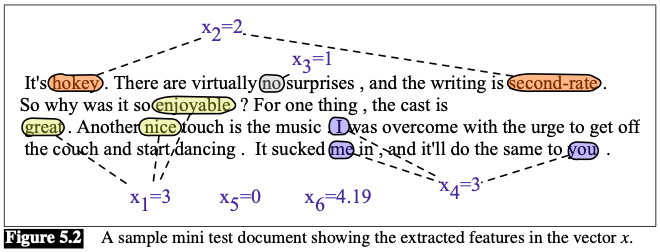

Suppose we are doing binary sentiment classification on movie review text, and we would like to know whether to assign the sentiment class + or − to a review document .

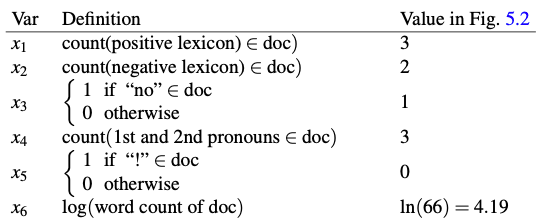

We’ll represent each input observation by the 6 features of the input shown in the following table

Assume that for the moment that we’ve already learned a real-valued weight for each of these features, and that the 6 weights corresponding to the 6 features are , while .

- The weight , for example indicates how important a feature the number of positive lexicon words (great, nice, enjoyable, etc.) is to a positive sentiment decision, while tells us the importance of negative lexicon words. Note that is positive, while , meaning that negative words are negatively associated with a positive sentiment decision, and are about twice as important as positive words.

Given these 6 features and the input review , and can be computed:

This sentiment is positive ().