Train Naive Bayes Classifiers

Maximum Likelihood Estimate (MLE)

In Naive Bayes calculation we have to learn the probabilities and . We use the Maximum Likelihood Estimate (MLE) to estimate them. We’ll simply use the frequencies in the data.

: document prior

- “what percentage of the documents in our training set are in each class ?”

- : the number of documents in our training data with class

- : the total number of documents

“The fraction of times the word appears among all words in all documents of topic ”

We first concatenate all documents with category into one big “category ” text.

Then we use the frequency of in this concatenated document to give a maximum likelihood estimate of the probability

- : vocabulary that consists of the union of all the word types in all classes, not just the words in one class

Avoid zero probablities in the likelihood term for any class: Laplace (add-one) smoothing

Why is this a problem?

Imagine we are trying to estimate the likelihood of the word “fantastic” given class positive, but suppose there are no training documents that both contain the word “fantastic” and are classified as positive. Perhaps the word “fantastic” happens to occur (sarcastically?) in the class negative. In such a case the probability for this feature will be zero:

But since naive Bayes naively multiplies all the feature likelihoods together, zero probabilities in the likelihood term for any class will cause the probability of the class to be zero, no matter the other evidence!

In addition to and , We should also deal with

- Unknown words

- Words that occur in our test data but are NOT in our vocabulary at all because they did not occur in any training document in any class

- 🔧 Solution: Ignore them

- Remove them from the test document and not include any probability for them at all

- Stop words

- Very frequent words like the and a

- Solution:

- Method 1:

- sorting the vocabulary by frequency in the training set

- defining the top 10–100 vocabulary entries as stop words

- Method 2:

- use one of the many pre-defined stop word list available online

- Then every instance of these stop words are simply removed from both training and test documents as if they had never occurred.

- Method 1:

- In most text classification applications, however, using a stop word list does NOT improve performance 🤪, and so it is more common to make use of the entire vocabulary and not use a stop word list.

Example

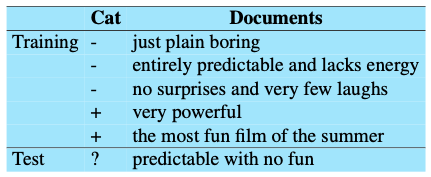

We’ll use a sentiment analysis domain with the two classes positive (+) and negative (-), and take the following miniature training and test documents simplified from actual movie reviews.

- The prior :

The word with doesn’t occur in the training set, so we drop it completely.

The remaining three words are “predictable”, “no”, and “fun”. Their likelihoods from the training set are (with Laplace smoothing):

The word “predictable” occurs in negative (-) once, therefore, with Laplace smoothing:

For the test sentence “predictable with no fun”, after removing the word “with”:

The model predicts the class negative for the test sentence .