HMM Part-of-Speech Tagging

Sequence model/classifier

Assign a label or class to each unit in a sequence

mapping a sequence of observation to a sequence of labels

Hidden Markov Model (HMM) is a probabilistic sequence model

given a sequence of units (words, letters, morphemes, sentences, whatever)

it computes a probability distribution over possible sequences of labels and chooses the best label sequence

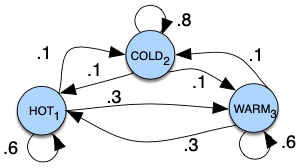

Markov Chains

A Markov chain is a model that tells us something about the probabilities of sequences of random variables, states, each of which can take on values from some set. These sets can be words, or tags, or symbols representing anything (E.g., the weather).

💡 A Markov chain makes a very strong assumption that

if we want to predict the future in the sequence, all that matters is the current state.

All the states before the current state have NO impact on the future except via the current state.

- It’s as if to predict tomorrow’s weather you could examine today’s weather but you weren’t allowed to look at yesterday’s weather.

👆 Markov assumption

Consider a sequence of state variables

When predicting the future, the past does NOT matter, only the present

Markov chain

embodies the Markov assumption on the probabilities of this sequence

specified by the following components

: a set of states

: transition probability matrix

- Each represents the probability of moving from state to state , s.t.

: an initial probability distribution over states

: probability that the Markov chain will start in state

Some states may have (meaning that the can NOT be initial states)

Example

Nodes: states

Edges: transitions, with their probabilities

- The values of arcs leaving a given state must sum to 1.

Setting start distribution would mean a probability 0.7 of starting in state 2 (cold), probability 0.1 of starting in state 1 (hot), and 0.2 of starting in state 3 (warm)

Hidden Markov Model (HMM)

A Markov chain is useful when we need to compute a probability for a sequence of observable events.

In many cases, however, the events we are interested in are hidden: we don’t observe them directly.

- We do NOT normally observe POS tags in a text

- Rather, we see words, and must infer the tags from the word sequence

We call the tags hidden because they are NOT observed.

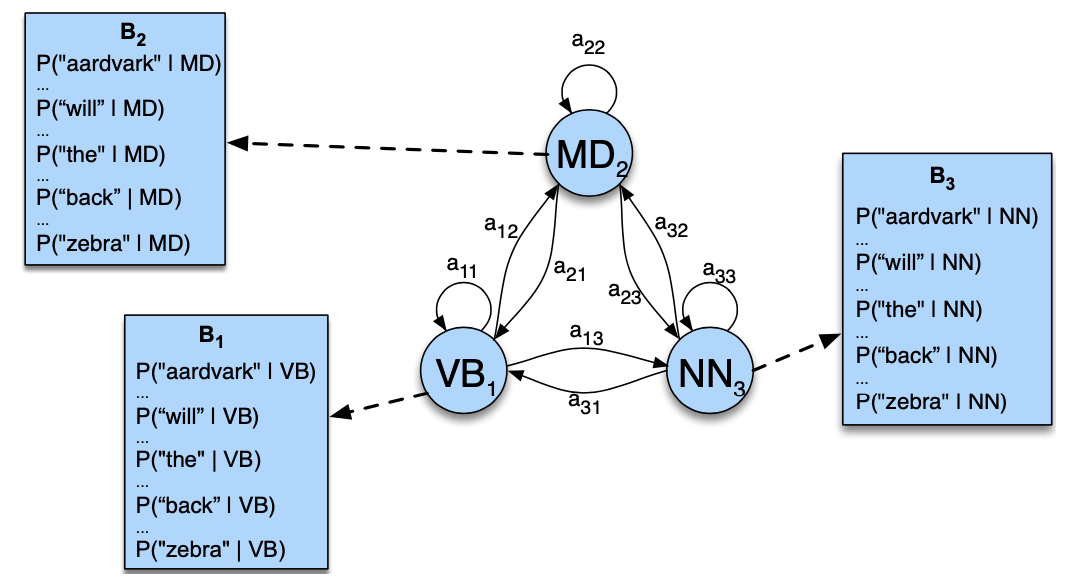

Hidden Markov Model (HMM)

allows us to talk about both observed events (like words that we see in the input) and hidden events (like part-of-speech tags) that we think of as causal factors in our probabilistic model

Specified by:

: a set of states

: transition probability matrix

- Each represents the probability of moving from state to state , s.t.

: a set of observations

- Each one drawn from a vocabulary

: a sequence of observation likelihoods (also called emission probabilities)

- Each expressing the probability of an observation being generated from a state

: an initial probability distribution over states

: probability that the Markov chain will start in state

Some states may have (meaning that the can NOT be initial states)

A first-order hidden Markov model instantiates two simplifying assumptions

Markov assumption: the probability of a particular state depends only on the previous state

Output independence: the probability of an output observation depends only on the state that produced the observation and NOT on any other states or any other observations

Components of HMM tagger

An HMM has two components, the and probabilities

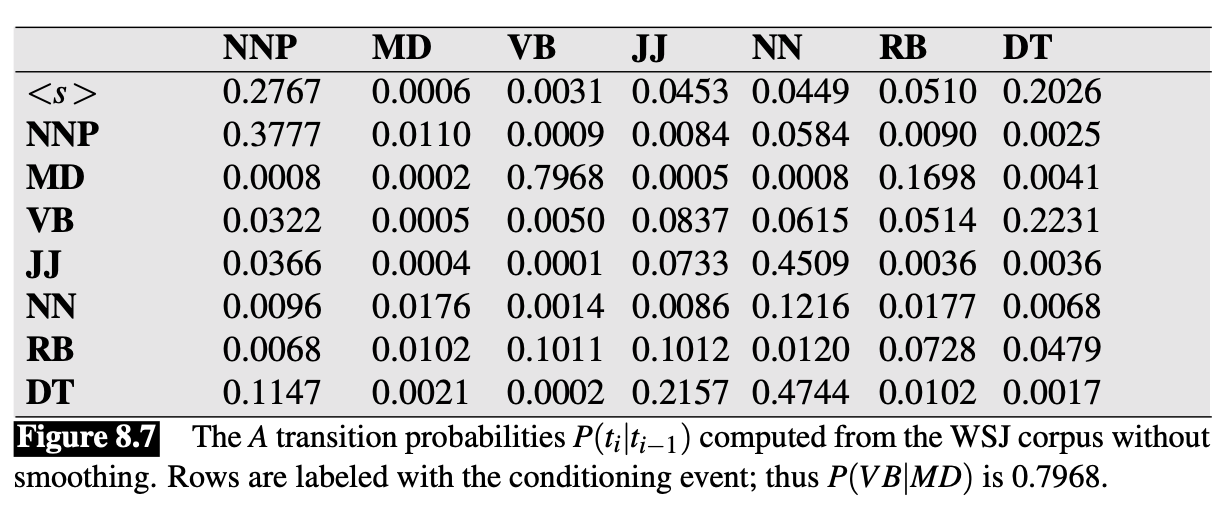

The A probabilities

The matrix contains the tag transition probabilities which represent the probability of a tag occurring given the previous tag.

- E.g., modal verbs like will are very likely to be followed by a verb in the base form, a VB, like race, so we expect this probability to be high.

We compute the maximum likelihood estimate of this transition probability by counting, out of the times we see the first tag in a labeled corpus, how often the first tag is followed by the second

- For example, in the WSJ corpus, MD occurs 13124 times of which it is followed by VB 10471. Therefore, for an MLE estimate of

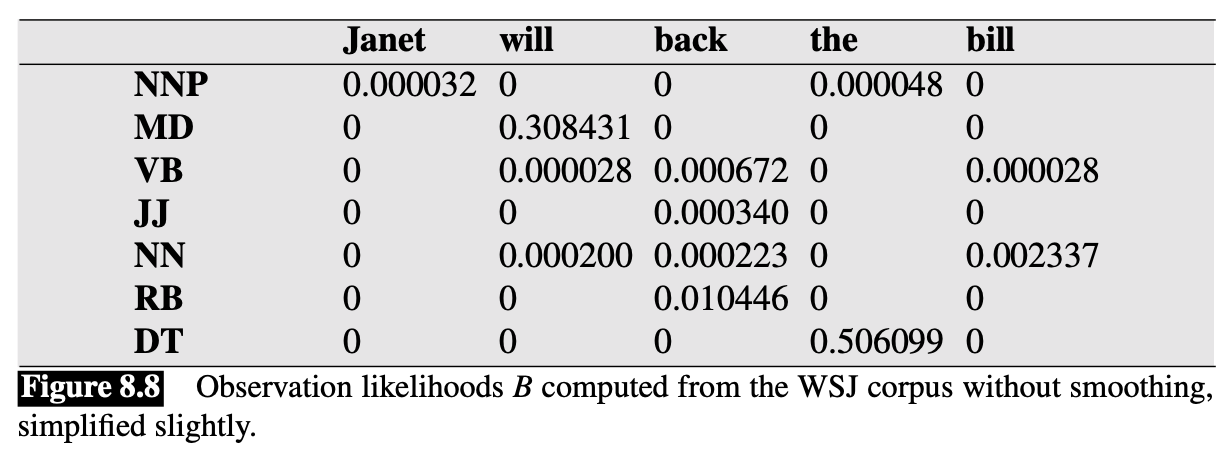

The B probabilities

The emission probabilities, , represent the probability, given a tag (say MD), that it will be associated with a given word (say will). The MLE of the emission probability is

- E.g.: Of the 13124 occurrences of MD in the WSJ corpus, it is associated with will 4046 times

Note that this likelihood term is NOT asking “which is the most likely tag for the word will?” That would be the posterior . Instead, answers the question “If we were going to generate a MD, how likely is it that this modal would be will?”

Example: three states HMM POS tagger

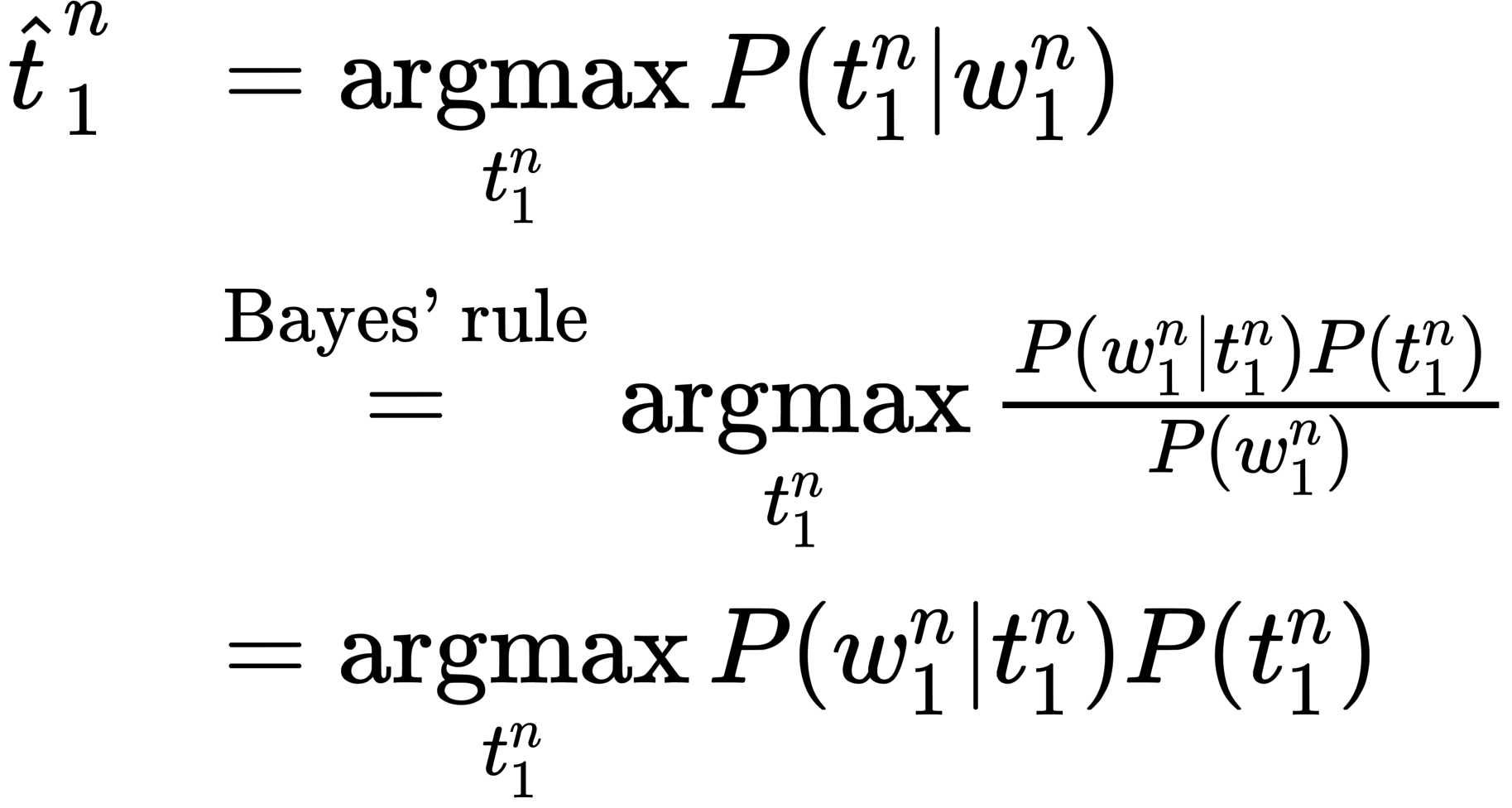

HMM tagging as decoding

Decoding: Given as input an HMM and a sequence of observations , find the most probable sequence of states

🎯 For part-of-speech tagging, the goal of HMM decoding is to choose the tag sequence that is most probable given the observation sequence of words

HMM taggers make two simplifying assumptions:

the probability of a word appearing depends only on its own tag and is independent of neighboring words and tags

the probability of a tag is dependent only on the previous tag, rather than the entire tag sequence (the bigram assumption)

Combing these two assumptions, the most probable tag sequence from a bigram tagger is:

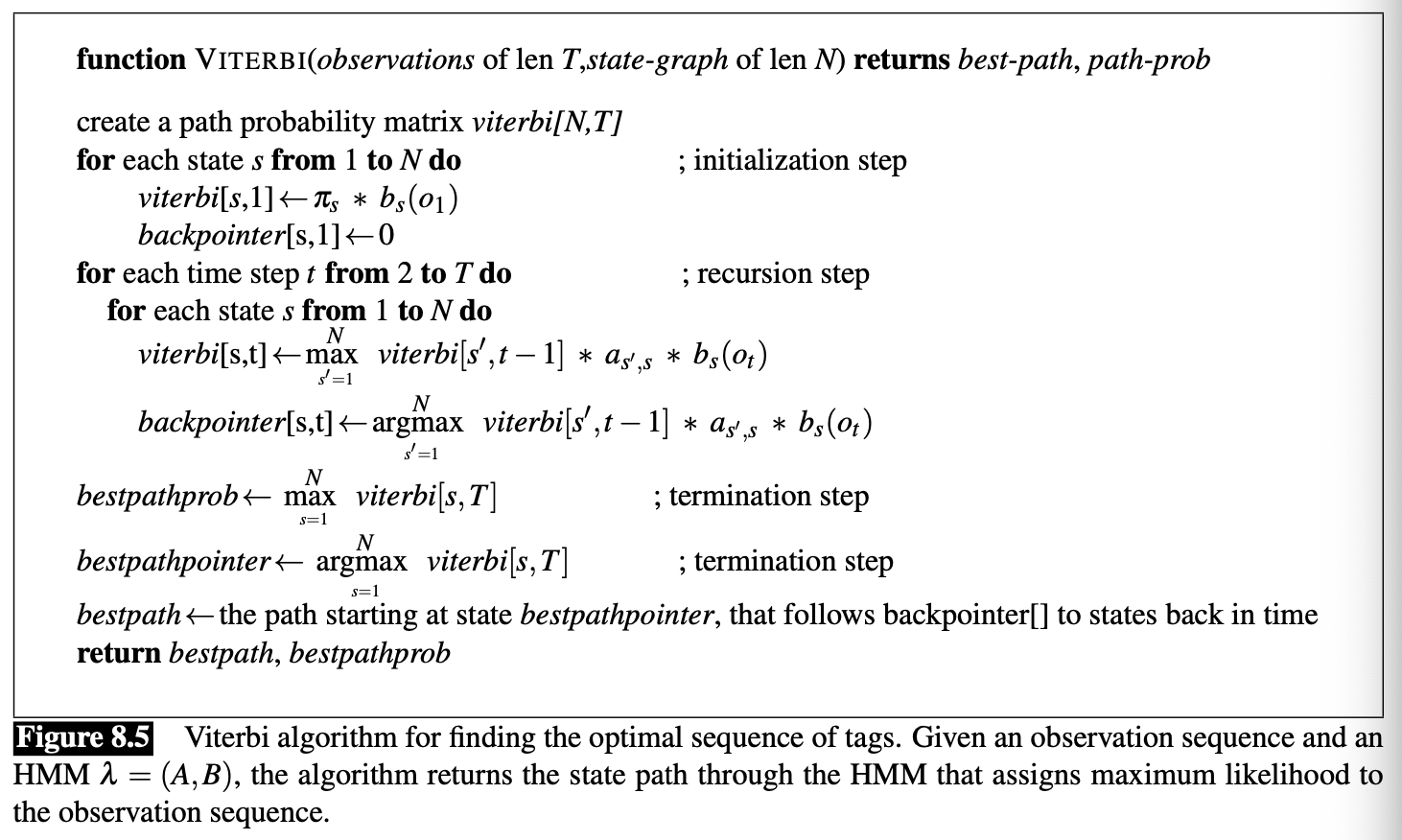

The Viterbi Algorithm

The Viterbi algorithm:

- Decoding algorithm for HMMs

- An instance of dynamic programming

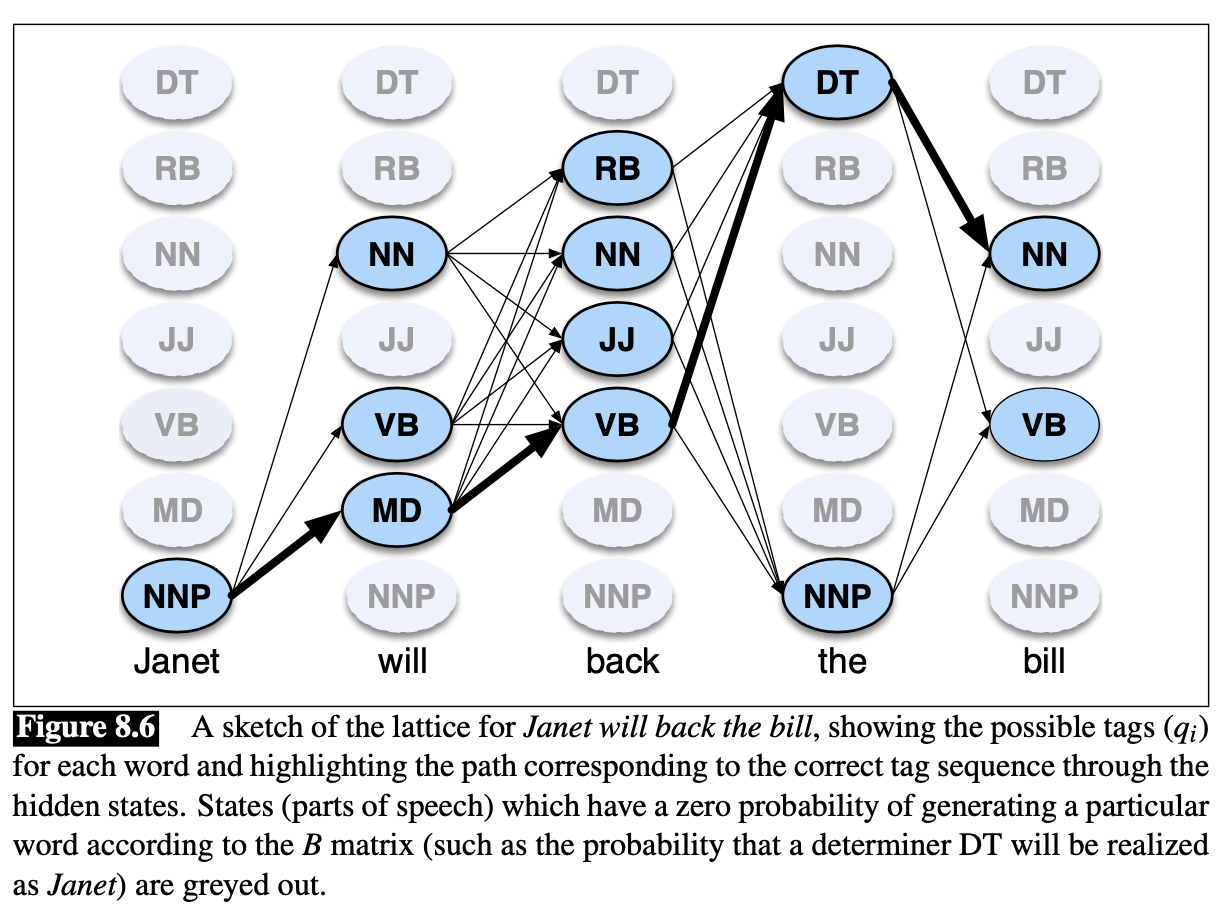

The Viterbi algorithm first sets up a probability matrix or lattice

One column for each observation

One row for each state in the state graph

Each column has a cell for each state in the single combined automation

Each cell of the lattice

represents the probability that the HMM is in state after seeing the first observatins and passing through the most probable state sequence , given the HMM

The value of each cell is computed by recursively taking the most probable path that could lead us to this cell

Represent the most probable path by taking the maximum over all possible previous state sequences

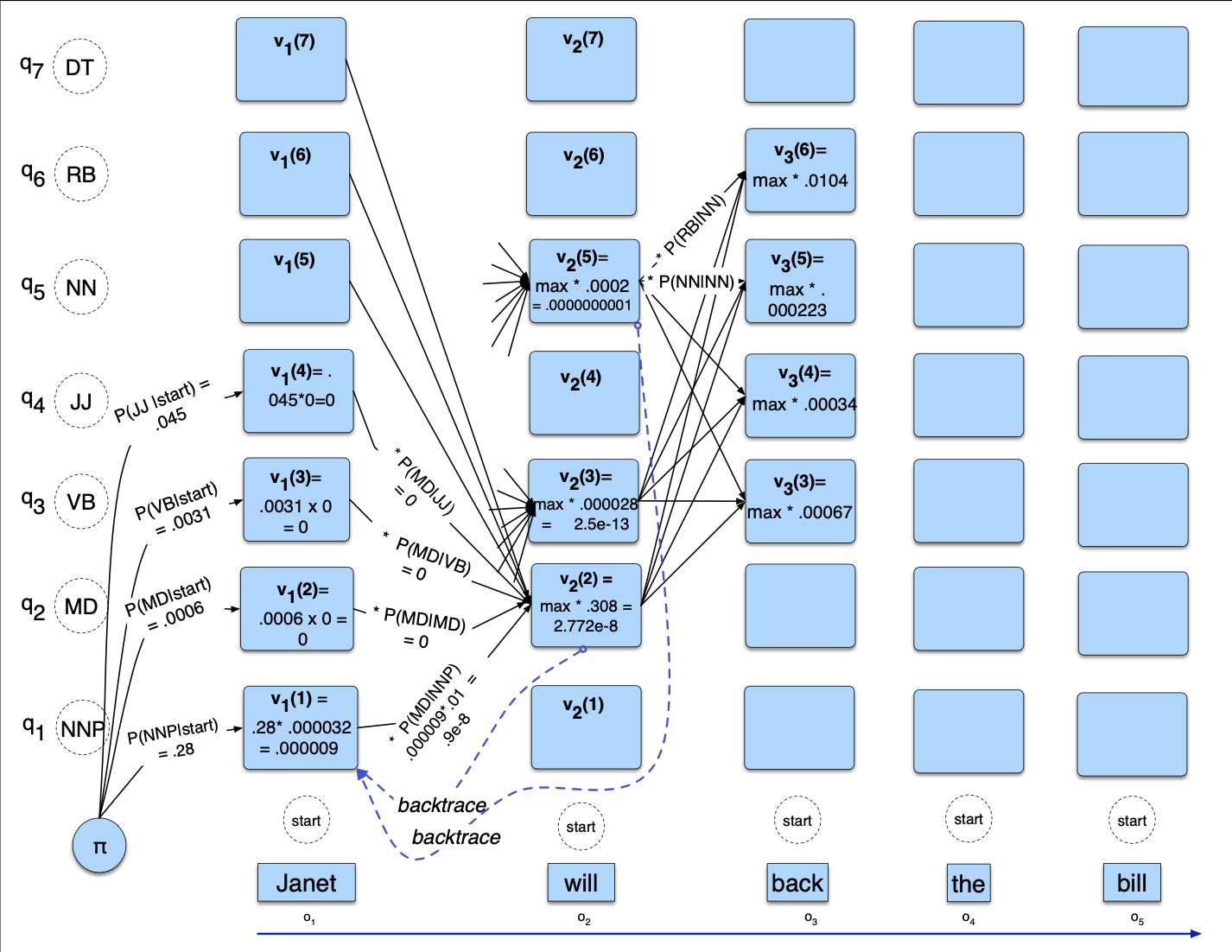

Viterbi fills each cell recursively (like other dynamic programming algorithms)

Given that we had already computed the probability of being in every state at time , we compute the Viterbi probability by taking the most probable of the extensions of the paths that lead to the current cell.

For a given state at time , the value is computed as

- : the previous Viterbi path probability from the previous time step

- : the transition probability from previous state to current state

- : the state observation likelihood of the observation symbol given the current state

Example 1

Tag the sentence “Janet will back the bill”

🎯 Goal: correct series of tags (Janet/NNP will/MD back/VB the/DT bill/NN)

HMM is defiend by two tables

- 👆 Lists the probabilities for transitioning betweeen the hidden states (POS tags)

- 👆 Expresses the probabilities, the observation likelihood s of words given tags

- This table is (slightly simplified) from counts in WSJ corpus

Computation:

- There’re state columns

- begin in column 1 (for the word Janet) by setting the Viterbi value in each cell to the product of

- the transistion probability (the start probability for that state , which we get from the <s> entry), and

- the observation likelihood of the word Janet givne the tag for that cell

- Most of the cells in the column are zero since the word Janet cannot be any of those tags (See Figure 8.8 above)

- Next, each cell in the will column gets update

- For each state, we compute the value by taking the maximum over the extensions of all the paths from the previous column that lead to the current cell

- Each cell gets the max of the 7 values from the previous column, multiplied by the appropriate transition probability

- Most of them are zero from the previous column in this case

- The remaining value is multiplied by the relevant observation probability, and the (trivial) max is taken. (In this case the final value, 2.772e-8, comes from the NNP state at the previous column. )

Example 2

HMM : Viterbi algorithm - a toy example 👍

Extending the HMM Algorithm to Trigrams

In simple HMM model described above, the probability of a tag depends only on the previous tag

In practice we use more of the history, letting the probability of a tag depend on the two previous tags

- Small increase in performance (perhaps a half point)

- But conditioning on two previous tags instead of one requires a significant change to the Viterbi algorithm 🤪

- For each cell, instead of taking a max over transitions from each cell in the previous column, we have to take a max over paths through the cells in the previous two columns

- thus considering rather than hidden states at every observation.

In addition to increasing the context window, HMM taggers have a number of other advanced features

let the tagger know the location of the end of the sentence by adding dependence on an end-of-sequence marker for

- Three of the tags () used in the context will fall off the edge of the sentence, and hence will not match regular words

- These tags can all be set to be a single special ‘sentence boundary’ tag that is added to the tagset, which assumes sentences boundaries have already been marked.

- Three of the tags () used in the context will fall off the edge of the sentence, and hence will not match regular words

🔴 Problem with trigram taggers: data sparsity

Any particular sequence of tags that occurs in the test set may simply never have occurred in the training set.

Therefore we can NOT compute the tag trigram probability just by the maximum likelihood estimate from counts, following

- Many of these counts will be zero in any training set, and we will incorrectly predict that a given tag sequence will never occur!

We need a way to estimate even if the sequence never occurs in the training data

Standard approach: estimate the probability by combining more robust, but weaker estimators.

E.g., if we’ve never seen the tag sequence PRP VB TO, and so can’t compute from this frequency, we still could rely on the bigram probability , or even the unigram probability .

The maximum likelihood estimation of each of these probabilities can be computed from a corpus with the following counts:

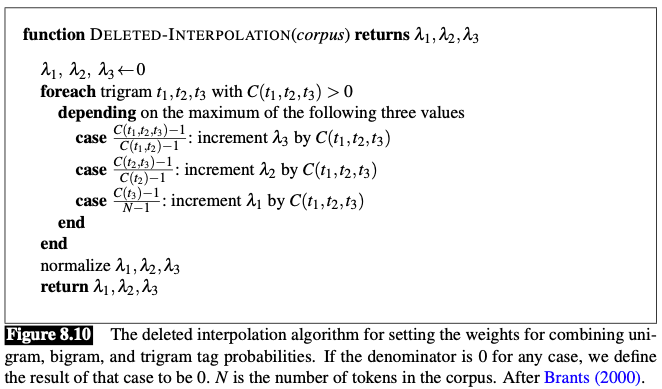

We use linear interpolation to combine these three estimators to estimate the trigram probability . We estimate the probablity by a weighted sum of the unigram, bigram, and trigram probabilities

- s are set by deleted interpolation

- successively delete each trigram from the training corpus and choose the λs so as to maximize the likelihood of the rest of the corpus.

- helps to set the λs in such a way as to generalize to unseen data and not overfit 👍

- successively delete each trigram from the training corpus and choose the λs so as to maximize the likelihood of the rest of the corpus.

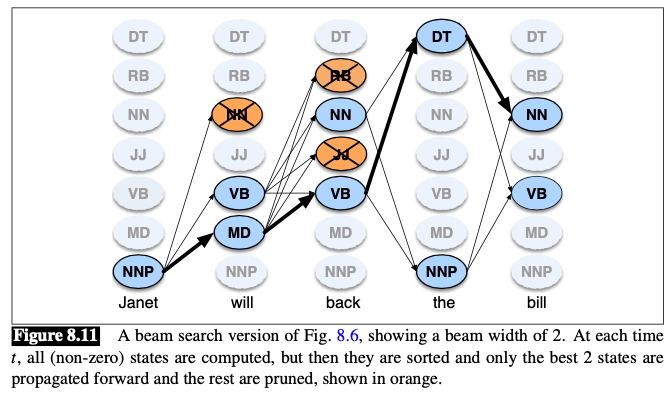

Beam Search

Problem of vanilla Viterbi algorithms

- Slow, when the number of states grows very large

- Complexity:

- : Number of states

- Can be large for trigram taggers

- E.g.: Considering very previous pair of the 45 tags resulting in computations per column!!! 😱

- can be even larger for other applications of Viterbi (E.g., decoding in neural networks)

- Can be large for trigram taggers

- : Number of states

🔧 Common solution: beam search decoding

💡 Instead of keeping the entire column of states at each time point , we just keep the best few hypothesis at that point.

At time :

- Compute the Viterbi score for each of the cells

- Sort the scores

- Keep only the best-scoring states. The rest are pruned out and NOT continued forward to time

Implementation

Keep a fixed number of states (beam width) instead of all current states

Alternatively can be modeled as a fixed percentage of the states, or as a probability threshold

Unknown Words

One useful feature for distinguishing parts of speech is word shape

- words starting with capital letters are likely to be proper nouns (NNP).

Strongest source of information for guessing the part-of-speech of unknown words: morphology

Words ending in -s plural nouns (NNS)

words ending with -ed past participles (VBN)

words ending with -able adjectives (JJ)

…

We store for each suffixes of up to 10 letters the statistics of the tag it was associated with in training. We are thus computing for each suffix of length the probability of the tag given the suffix letters

Back-off is used to smooth these probabilities with successively shorter suffixes.

Unknown words are unlikely to be closed-class words (like prepositions), suffix probabilites can be computed only for words whose training set frequency is , or only for open-class words.

As gives a posterior estimate , we can compute the likelihood tha HMMs require by using Bayesian inversion (i.e., using Bayes’ rule and computation of the two priors and ).