Big O Notation

Definition

In general, a better algorithm means a FASTER algorithm. In other words, it costs less runtime or it has lower time complexity.

Big O notation is one of the most fundamental tools to analyze the cost of an algorithm. It is about finding an asymptotic upper bound of the time complexity of an algorithm. I.e., Big O notation is about the worst-case scenario.

Basically, it answers the question: “How the runtime of an algorithm grows as the input grow?”

Rule of Thumbs

Different steps get added

Example

def func():

step1() # O(a)

step2() # O(b)

Overall complexity is

Constants do NOT matter

Constant are inconsequential, when input size is getting massively huge.

Example

Different inputs different variables

Example

def intersection_size(list_A, list_B):

# Assumes that len(list_A) is lenA, len(len_listB) is lenB

count = 0

for a in list_A:

for b in list_B:

if a == b:

count += 1

return count

Overall complexity is

Smaller terms do NOT matter

Smaller terms are non-dominant, when input size is getting massively huge.

Example

Shorthands

Arithmetric operations are constant

Variable assignment is constant

Accessing elements in an array (by index) or object (by key) is constant

In a loop:

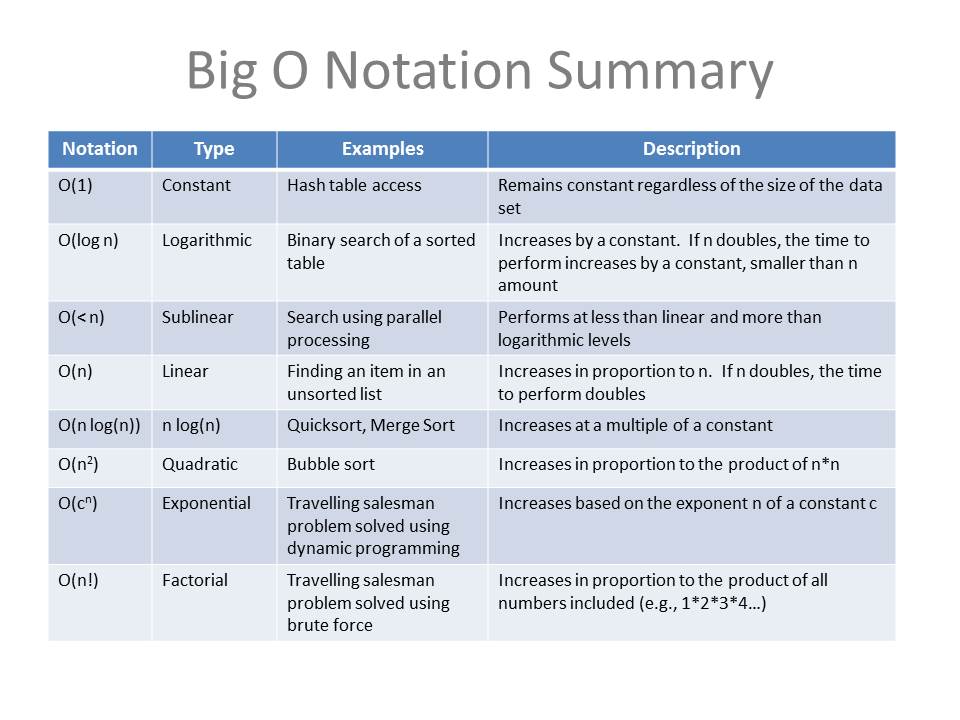

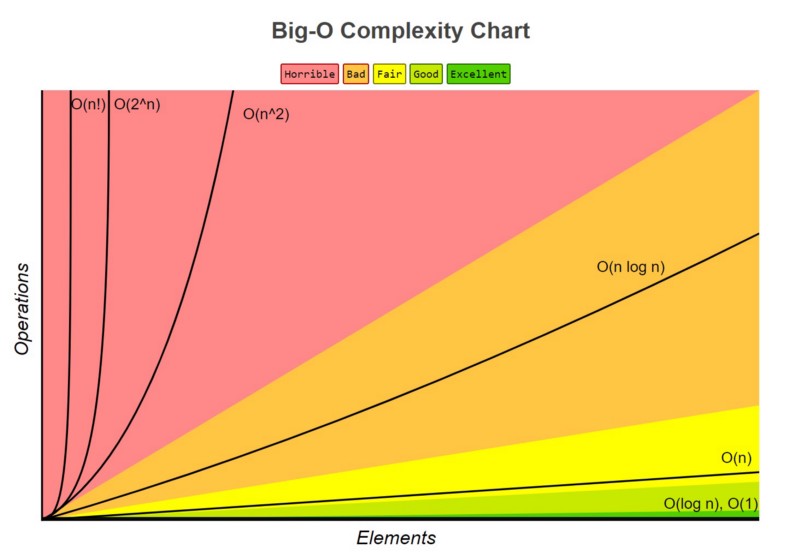

Summary of Complexity Classes