Extended Kalman Filter Motivation Linear systems do not exist in reality. We have to deal with nonlinear discrete-time systems

x k ⏟ current state = f k − 1 ( x k − 1 ⏟ previous state , u k − 1 ⏟ inputs , w k − 1 ⏟ process noise ) y k ⏟ measurement = h k ( x k , v k ⏟ measurement noise )

\begin{aligned}

\underbrace{\mathbf{x}_{k}}_{\text{current state}}&=\mathbf{f}_{k-1}(\underbrace{\mathbf{x}_{k-1}}_{\text{previous state}}, \underbrace{\mathbf{u}_{k-1}}_{\text{inputs}}, \underbrace{\mathbf{w}_{k-1}}_{\text{process noise}}) \\\\

\underbrace{\mathbf{y}_{k}}_{\text{measurement}}&=\mathbf{h}_{k}(\mathbf{x}_{k}, \underbrace{\mathbf{v}_{k}}_{\text{measurement noise}})

\end{aligned}

current state x k measurement y k = f k − 1 ( previous state x k − 1 , inputs u k − 1 , process noise w k − 1 ) = h k ( x k , measurement noise v k ) How can we adapt Kalman Filter to nonlinear discrete-time systems?

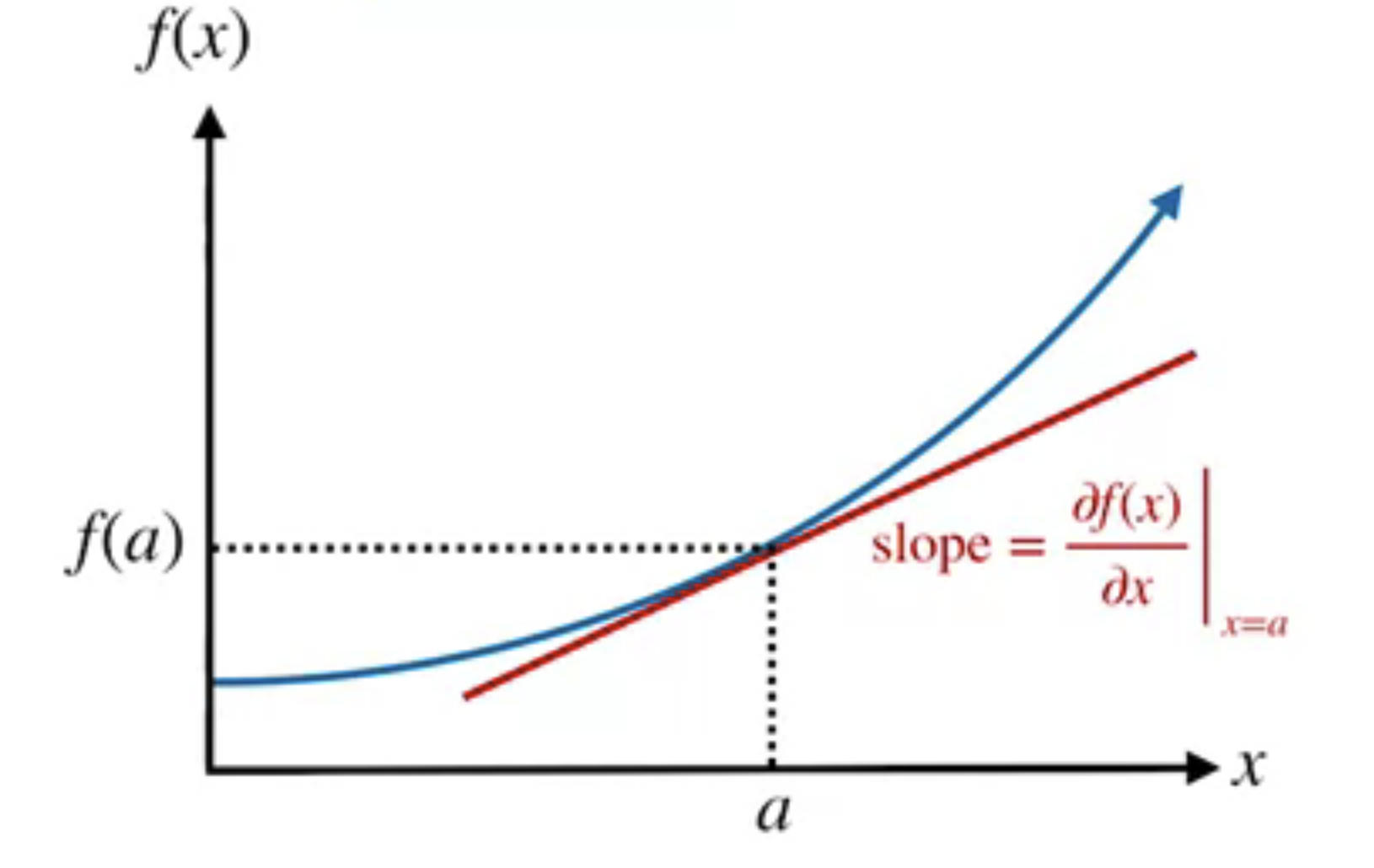

💡 Idea: Linearizing a Nonlinear System Choose an operating point a a a

We compute this linear approximation using a first-order Taylor expansion

f ( x ) ≈ f ( a ) + ∂ f ( x ) ∂ x ∣ x = a ( x − a )

f(x) \approx f(a)+\left.\frac{\partial f(x)}{\partial x}\right|_{x=a}(x-a)

f ( x ) ≈ f ( a ) + ∂ x ∂ f ( x ) x = a ( x − a ) Extended Kalman Filter For EKF, we choose the operationg point to be our most recent state estimate, our known input, and zero noise.

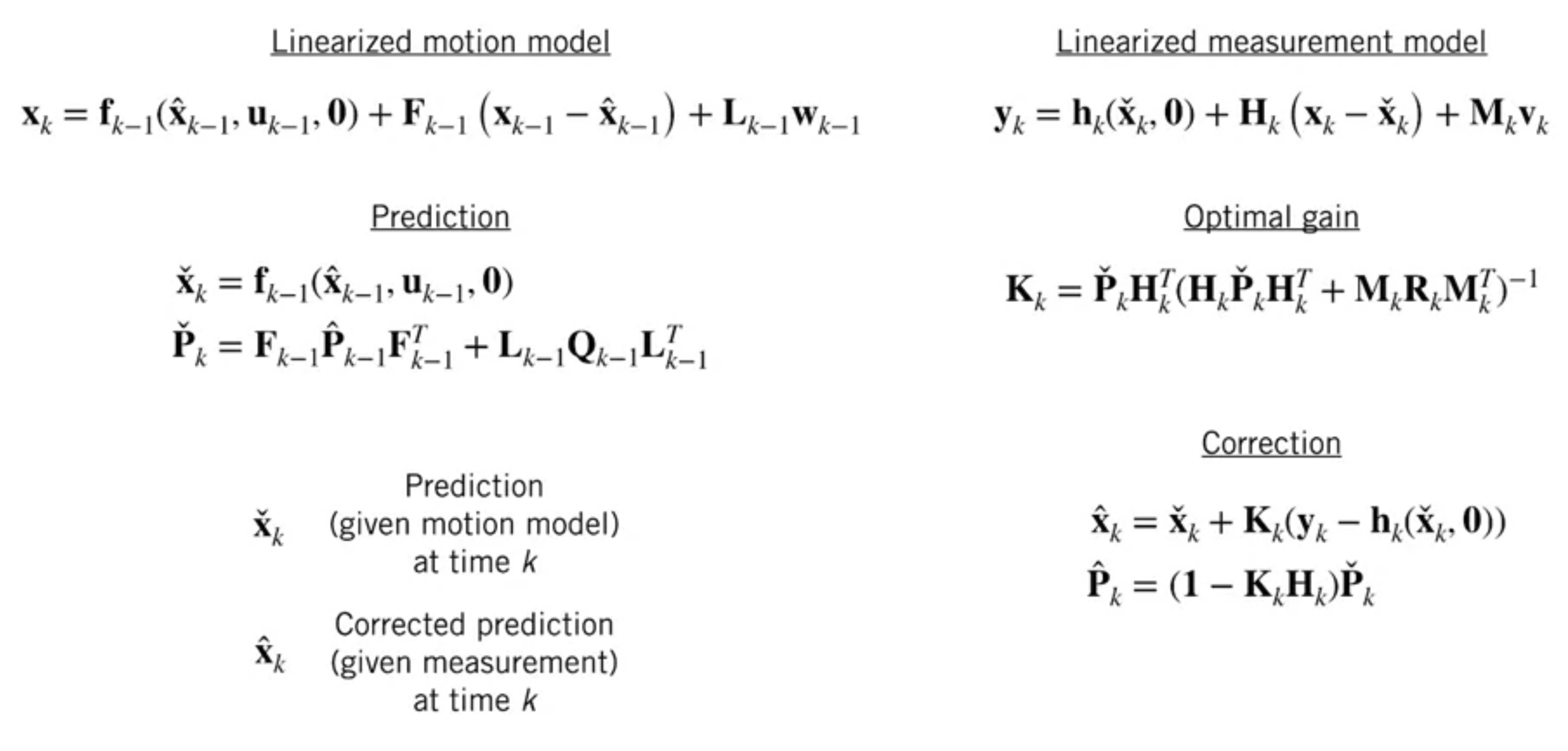

F k − 1 , L k − 1 , H k , M k \mathbf{F}_{k-1}, \mathbf{L}_{k-1}, \mathbf{H}_{k}, \mathbf{M}_{k} F k − 1 , L k − 1 , H k , M k

Intuitively, the Jacobian matrix tells you how fast each output of the function is changing along each input dimension.

With our linearized models and Jacobians, we can now use the Kalman Filter equations.

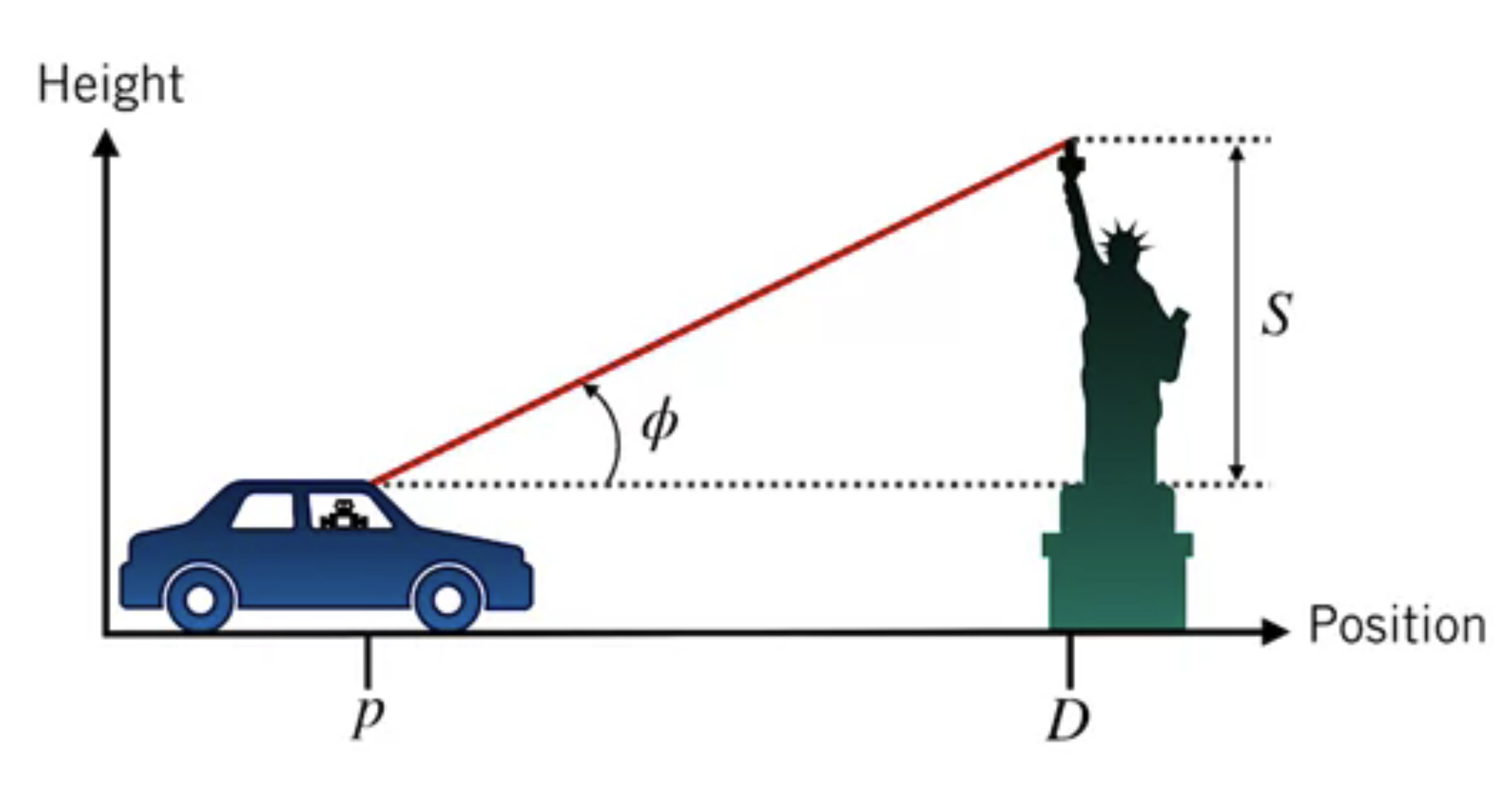

We still use the nonlinear model to propagate the mean of the state estimate in prediction step and compute the measurement residual innovation in correction step. Example Similar to the self-driving car localisation example in Linear Kalman Fitler, but this time we use an onboard sensor, a camera, to measure the altitude of distant landmarks relative to the horizon.

(S S S D D D

Sate:

x = [ p p ˙ ]

\mathbf{x}=\left[\begin{array}{l}

p \\

\dot{p}

\end{array}\right]

x = [ p p ˙ ] Input:

u = p ¨

\mathbf{u}=\ddot{p}

u = p ¨ Motion/Process model

x k = f ( x k − 1 , u k − 1 , w k − 1 ) = [ 1 Δ t 0 1 ] x k − 1 + [ 0 Δ t ] u k − 1 + w k − 1

\begin{aligned}

\mathbf{x}_{k} &=\mathbf{f}\left(\mathbf{x}_{k-1}, \mathbf{u}_{k-1}, \mathbf{w}_{k-1}\right) \\\\

&=\left[\begin{array}{cc}

1 & \Delta t \\

0 & 1

\end{array}\right] \mathbf{x}_{k-1}+\left[\begin{array}{c}

0 \\

\Delta t

\end{array}\right] \mathbf{u}_{k-1}+\mathbf{w}_{k-1}

\end{aligned}

x k = f ( x k − 1 , u k − 1 , w k − 1 ) = [ 1 0 Δ t 1 ] x k − 1 + [ 0 Δ t ] u k − 1 + w k − 1 Landmark measurement model (nonlinear!)

y k = ϕ k = h ( p k , v k ) = tan − 1 ( S D − p k ) + v k

\begin{aligned}

y_{k} &=\phi_{k}=h\left(p_{k}, v_{k}\right) \\\\

&=\tan ^{-1}\left(\frac{S}{D-p_{k}}\right)+v_{k}

\end{aligned}

y k = ϕ k = h ( p k , v k ) = tan − 1 ( D − p k S ) + v k Noise densities

v k ∼ N ( 0 , 0.01 ) w k ∼ N ( 0 , ( 0.1 ) 1 2 × 2 )

v_{k} \sim \mathcal{N}(0,0.01) \quad \mathbf{w}_{k} \sim \mathcal{N}\left(\mathbf{0},(0.1) \mathbf{1}_{2 \times 2}\right)

v k ∼ N ( 0 , 0.01 ) w k ∼ N ( 0 , ( 0.1 ) 1 2 × 2 ) The Jacobian matrices in this example are:

Given

x ^ 0 ∼ N ( [ 0 5 ] , [ 0.01 0 0 1 ] ) Δ t = 0.5 s u 0 = − 2 [ m / s 2 ] y 1 = π / 6 [ r a d ] S = 20 [ m ] D = 40 [ m ]

\begin{array}{l}

\hat{\mathbf{x}}_{0} \sim \mathcal{N}\left(\left[\begin{array}{l}

0 \\

5

\end{array}\right], \quad\left[\begin{array}{cc}

0.01 & 0 \\

0 & 1

\end{array}\right]\right)\\

\Delta t=0.5 \mathrm{~s}\\

u_{0}=-2\left[\mathrm{~m} / \mathrm{s}^{2}\right] \quad y_{1}=\pi / 6[\mathrm{rad}]\\

S=20[m] \quad D=40[m]

\end{array}

x ^ 0 ∼ N ( [ 0 5 ] , [ 0.01 0 0 1 ] ) Δ t = 0.5 s u 0 = − 2 [ m / s 2 ] y 1 = π /6 [ rad ] S = 20 [ m ] D = 40 [ m ] What is the position estimate p ^ 1 \hat{p}_1 p ^ 1

Prediction:

x ˇ 1 = f 0 ( x ^ 0 , u 0 , 0 ) [ p ˇ 1 p ˙ 1 ] = [ 1 0.5 0 1 ] [ 0 5 ] + [ 0 0.5 ] ( − 2 ) = [ 2.5 4 ] P ˇ 1 = F 0 P ^ 0 F 0 T + L 0 Q 0 L 0 T P ˇ 1 = [ 1 0.5 0 1 ] [ 0.01 0 0 1 ] [ 1 0 0.5 1 ] + [ 1 0 0 1 ] [ 0.1 0 0 0.1 ] [ 1 0 0 1 ] = [ 0.36 0.5 0.5 1.1 ]

\begin{array}{c}

\check{\mathbf{x}}_{1}=\mathbf{f}_{0}\left(\hat{\mathbf{x}}_{0}, \mathbf{u}_{0}, \mathbf{0}\right) \\

{\left[\begin{array}{c}

\check{p}_{1} \\

\dot{p}_{1}

\end{array}\right]=\left[\begin{array}{cc}

1 & 0.5 \\

0 & 1

\end{array}\right]\left[\begin{array}{l}

0 \\

5

\end{array}\right]+\left[\begin{array}{c}

0 \\

0.5

\end{array}\right](-2)=\left[\begin{array}{c}

2.5 \\

4

\end{array}\right]} \\\\

\check{\mathbf{P}}_{1}=\mathbf{F}_{0} \hat{\mathbf{P}}_{0} \mathbf{F}_{0}^{T}+\mathbf{L}_{0} \mathbf{Q}_{0} \mathbf{L}_{0}^{T} \\

\check{\mathbf{P}}_{1}=\left[\begin{array}{cc}

1 & 0.5 \\

0 & 1

\end{array}\right]\left[\begin{array}{cc}

0.01 & 0 \\

0 & 1

\end{array}\right]\left[\begin{array}{cc}

1 & 0 \\

0.5 & 1

\end{array}\right]+\left[\begin{array}{cc}

1 & 0 \\

0 & 1

\end{array}\right]\left[\begin{array}{cc}

0.1 & 0 \\

0 & 0.1

\end{array}\right]\left[\begin{array}{cc}

1 & 0 \\

0 & 1

\end{array}\right]=\left[\begin{array}{cc}

0.36 & 0.5 \\

0.5 & 1.1

\end{array}\right]

\end{array}

x ˇ 1 = f 0 ( x ^ 0 , u 0 , 0 ) [ p ˇ 1 p ˙ 1 ] = [ 1 0 0.5 1 ] [ 0 5 ] + [ 0 0.5 ] ( − 2 ) = [ 2.5 4 ] P ˇ 1 = F 0 P ^ 0 F 0 T + L 0 Q 0 L 0 T P ˇ 1 = [ 1 0 0.5 1 ] [ 0.01 0 0 1 ] [ 1 0.5 0 1 ] + [ 1 0 0 1 ] [ 0.1 0 0 0.1 ] [ 1 0 0 1 ] = [ 0.36 0.5 0.5 1.1 ] Correction:

K 1 = P ˇ 1 H 1 T ( H 1 P ˇ 1 H 1 T + M 1 R 1 M 1 T ) − 1 = [ 0.36 0.5 0.5 1.1 ] [ 0.011 0 ] ( [ 0.011 0 ] [ 0.36 0.5 0.5 1.1 ] [ 0.011 0 ] + 1 ( 0.01 ) ( 1 ) ) − 1 = [ 0.40 0.55 ]

\begin{aligned}

\mathbf{K}_{1} &=\check{\mathbf{P}}_{1} \mathbf{H}_{1}^{T}\left(\mathbf{H}_{1} \check{\mathbf{P}}_{1} \mathbf{H}_{1}^{T}+\mathbf{M}_{1} \mathbf{R}_{1} \mathbf{M}_{1}^{T}\right)^{-1} \\

&=\left[\begin{array}{cc}

0.36 & 0.5 \\

0.5 & 1.1

\end{array}\right]\left[\begin{array}{c}

0.011 \\

0

\end{array}\right]\left(\left[\begin{array}{ll}

0.011 & 0

\end{array}\right]\left[\begin{array}{cc}

0.36 & 0.5 \\

0.5 & 1.1

\end{array}\right]\left[\begin{array}{c}

0.011 \\

0

\end{array}\right]+1(0.01)(1)\right)^{-1} \\

&=\left[\begin{array}{c}

0.40 \\

0.55

\end{array}\right]

\end{aligned}

K 1 = P ˇ 1 H 1 T ( H 1 P ˇ 1 H 1 T + M 1 R 1 M 1 T ) − 1 = [ 0.36 0.5 0.5 1.1 ] [ 0.011 0 ] ( [ 0.011 0 ] [ 0.36 0.5 0.5 1.1 ] [ 0.011 0 ] + 1 ( 0.01 ) ( 1 ) ) − 1 = [ 0.40 0.55 ] x ^ 1 = x ˇ 1 + K 1 ( y 1 − h 1 ( x ˇ 1 , 0 ) ) [ p ^ 1 p ˙ ^ 1 ] = [ 2.5 4 ] + [ 0.40 0.55 ] ( 0.52 − 0.49 ) = [ 2.51 4.02 ]

\begin{aligned}

\hat{\mathbf{x}}_{1} &=\check{\mathbf{x}}_{1}+\mathbf{K}_{1}\left(\mathbf{y}_{1}-\mathbf{h}_{1}\left(\check{\mathbf{x}}_{1}, \mathbf{0}\right)\right) \\

{\left[\begin{array}{c}

\hat{p}_{1} \\

\hat{\dot{p}}_{1}

\end{array}\right]}&={\left[\begin{array}{c}

2.5 \\

4

\end{array}\right]+\left[\begin{array}{c}

0.40 \\

0.55

\end{array}\right](0.52-0.49)=\left[\begin{array}{c}

2.51 \\

4.02

\end{array}\right] }

\end{aligned}

x ^ 1 [ p ^ 1 p ˙ ^ 1 ] = x ˇ 1 + K 1 ( y 1 − h 1 ( x ˇ 1 , 0 ) ) = [ 2.5 4 ] + [ 0.40 0.55 ] ( 0.52 − 0.49 ) = [ 2.51 4.02 ] P ^ 1 = ( 1 − K 1 H 1 ) P ˇ 1 = [ 0.36 0.50 0.50 1.1 ]

\begin{aligned}

\hat{\mathbf{P}}_{1} &=\left(\mathbf{1}-\mathbf{K}_{1} \mathbf{H}_{1}\right) \check{\mathbf{P}}_{1} \\

&=\left[\begin{array}{cc}

0.36 & 0.50 \\

0.50 & 1.1

\end{array}\right]

\end{aligned}

P ^ 1 = ( 1 − K 1 H 1 ) P ˇ 1 = [ 0.36 0.50 0.50 1.1 ] Summary The EKF uses linearization to adapt the Kalman Filter to nonlinear systems

Linearization works by computing a local linear apporximation to a nonlinear function using the first-order Taylor expansion on a chosen operating point (in this case, the last state estimate)

Reference