Sampling Reapproximation von Dichten Approximate original continuous density with discrete Dirac Mixture

f ( x ‾ ) = ∑ i = 1 L w i ⋅ δ ( x ‾ − x ^ ‾ i )

f(\underline{x})=\sum_{i=1}^{L} w_{i} \cdot \delta\left(\underline{x}-\underline{\hat{x}}_{i}\right)

f ( x ) = i = 1 ∑ L w i ⋅ δ ( x − x ^ i ) Weights w i > 0 , ∑ i = 1 L w i = 1 w_{i}>0, \displaystyle \sum_{i=1}^{L} w_{i}=1 w i > 0 , i = 1 ∑ L w i = 1 x ‾ i \underline{x}_i x i In univariate case (1D), compare cumulative distribution functions (CDFs) F ~ ( x ) , F ( x ) \tilde{F}(x), F(x) F ~ ( x ) , F ( x ) Cramér–von Mises distance :

D ( x ^ ‾ ) = ∫ R ( F ~ ( x ) − F ( x , x ^ ‾ ) ) 2 d x

D(\underline{\hat{x}})=\int_{\mathbb{R}}(\tilde{F}(x)-F\left(x, \underline{\hat{x}})\right)^{2} \mathrm{~d} x

D ( x ^ ) = ∫ R ( F ~ ( x ) − F ( x , x ^ ) ) 2 d x F ( x , x ^ ‾ ) F(x, \underline{\hat{x}}) F ( x , x ^ )

F ( x , x ^ ‾ ) = ∑ i = 1 L w i H ( x − x ^ i ) with H ( x ) = ∫ − ∞ x δ ( t ) d t = { 0 x < 0 1 2 x = 0 1 x > 0

F(x, \underline{\hat{x}})=\sum_{i=1}^{L} w_{i} \mathrm{H}\left(x-\hat{x}_{i}\right) \text { with } \mathrm{H}(x)=\int_{-\infty}^{x} \delta(t) \mathrm{d} t= \begin{cases}0 & x<0 \\ \frac{1}{2} & x=0 \\ 1 & x>0\end{cases}

F ( x , x ^ ) = i = 1 ∑ L w i H ( x − x ^ i ) with H ( x ) = ∫ − ∞ x δ ( t ) d t = ⎩ ⎨ ⎧ 0 2 1 1 x < 0 x = 0 x > 0 with the Dirac position

x ^ ‾ = [ x ^ 1 , x ^ 2 , … , x ^ L ] ⊤

\underline{\hat{x}}=\left[\hat{x}_{1}, \hat{x}_{2}, \ldots, \hat{x}_{L}\right]^{\top}

x ^ = [ x ^ 1 , x ^ 2 , … , x ^ L ] ⊤ We minimize the Cramér–von Mises distance D ( x ^ ‾ ) D(\underline{\hat{x}}) D ( x ^ )

Generalization of concept of CDF Localized Cumulative Distribution (LCD) F ( m ‾ , b ) = ∫ R N f ( x ‾ ) K ( x ‾ − m ‾ , b ) d x ‾

F(\underline{m}, b)=\int_{\mathbb{R}^{N}} f(\underline{x}) K(\underline{x}-\underline{m}, b) \mathrm{d} \underline{x}

F ( m , b ) = ∫ R N f ( x ) K ( x − m , b ) d x K ( ⋅ , ⋅ ) K(\cdot, \cdot) K ( ⋅ , ⋅ )

K ( x ‾ − m ‾ , b ) = ∏ k = 1 N exp ( − 1 2 ( x k − m k ) 2 b 2 )

K(\underline{x}-\underline{m}, b)=\prod_{k=1}^{N} \exp \left(-\frac{1}{2} \frac{\left(x_{k}-m_{k}\right)^{2}}{b^{2}}\right)

K ( x − m , b ) = k = 1 ∏ N exp ( − 2 1 b 2 ( x k − m k ) 2 ) m ‾ \underline{m} m

b ‾ \underline{b} b

Properties of LCD:

Symmetric Unique Multivariate Generalized Cramér–von Mises Distance (GCvD)

D = ∫ R + w ( b ) ∫ R N ( F ~ ( m ‾ , b ) − F ( m ‾ , b ) ) 2 d m ‾ d b

D=\int_{\mathbb{R}_{+}} w(b) \int_{\mathbb{R}^{N}}(\tilde{F}(\underline{m}, b)-F(\underline{m}, b))^{2} \mathrm{~d} \underline{m} \mathrm{~d} b

D = ∫ R + w ( b ) ∫ R N ( F ~ ( m , b ) − F ( m , b ) ) 2 d m d b F ~ ( m ‾ , b ) \tilde{F}(\underline{m}, b) F ~ ( m , b ) F ( m ‾ , b ) F(\underline{m}, b) F ( m , b ) Minimization of GCvD: Quasi-Newton method (L-BFGS)

Projected Cumulative Distribution (PCD) Use reapproximation methods for univariate case in multivariate case.

Radon Transform

Represent general N N N

Linear projection of random vector x ‾ ∈ R N \underline{\boldsymbol{x}} \in \mathbb{R}^{N} x ∈ R N r ∈ R \boldsymbol{r} \in \mathbb{R} r ∈ R u ‾ ∈ S N − 1 \underline{u} \in \mathbb{S}^{N-1} u ∈ S N − 1

r = u ‾ ⊤ x ‾

\boldsymbol{r} = \underline{u}^\top \underline{\boldsymbol{x}}

r = u ⊤ x Given probability density function f ( x ‾ ) f(\underline{x}) f ( x ) x ‾ \underline{\boldsymbol{x}} x f r ( r ∣ u ‾ ) f_r(r \mid \underline{u}) f r ( r ∣ u ) f ( x ‾ ) f(\underline{x}) f ( x ) u ‾ ∈ S N − 1 \underline{u} \in \mathbb{S}^{N-1} u ∈ S N − 1

f r ( r ∣ u ‾ ) = ∫ R N f ( t ‾ ) δ ( r − u ‾ ⊤ t ‾ ) d t ‾

f_{r}(r \mid \underline{u})=\int_{\mathbb{R}^{N}} f(\underline{t}) \delta\left(r-\underline{u}^{\top} \underline{t}\right) \mathrm{d} \underline{t}

f r ( r ∣ u ) = ∫ R N f ( t ) δ ( r − u ⊤ t ) d t Representing PDFs by all one-dimensional projections

Represent the two densities f ~ ( x ‾ ) \tilde{f}(\underline{x}) f ~ ( x ) f ( x ‾ ) f(\underline{x}) f ( x ) f ~ ( r ∣ u ‾ ) \tilde{f}(r \mid \underline{u}) f ~ ( r ∣ u ) f ( r ∣ u ) f(r \mid u) f ( r ∣ u )

Compare the sets of projections f ~ ( r ∣ u ‾ ) \tilde{f}(r \mid \underline{u}) f ~ ( r ∣ u ) f ( r ∣ u ) f(r \mid u) f ( r ∣ u ) u ‾ ∈ S N − 1 \underline{u} \in \mathbb{S}^{N-1} u ∈ S N − 1

D 1 ( u ‾ ) = D ( f ~ ( r ∣ u ‾ ) , f ( r ∣ u ‾ ) )

D_{1}(\underline{u})=D(\tilde{f}(r \mid \underline{u}), f(r \mid \underline{u}))

D 1 ( u ) = D ( f ~ ( r ∣ u ) , f ( r ∣ u )) Integrate these one-dimensional distance measures D 1 ( u ‾ ) D_1(\underline{u}) D 1 ( u ) u ‾ ∈ S N − 1 \underline{u} \in \mathbb{S}^{N-1} u ∈ S N − 1 D ( f ~ ( x ‾ ) , f ( x ‾ ) ) D(\tilde{f}(\underline{x}), f(\underline{x})) D ( f ~ ( x ) , f ( x ))

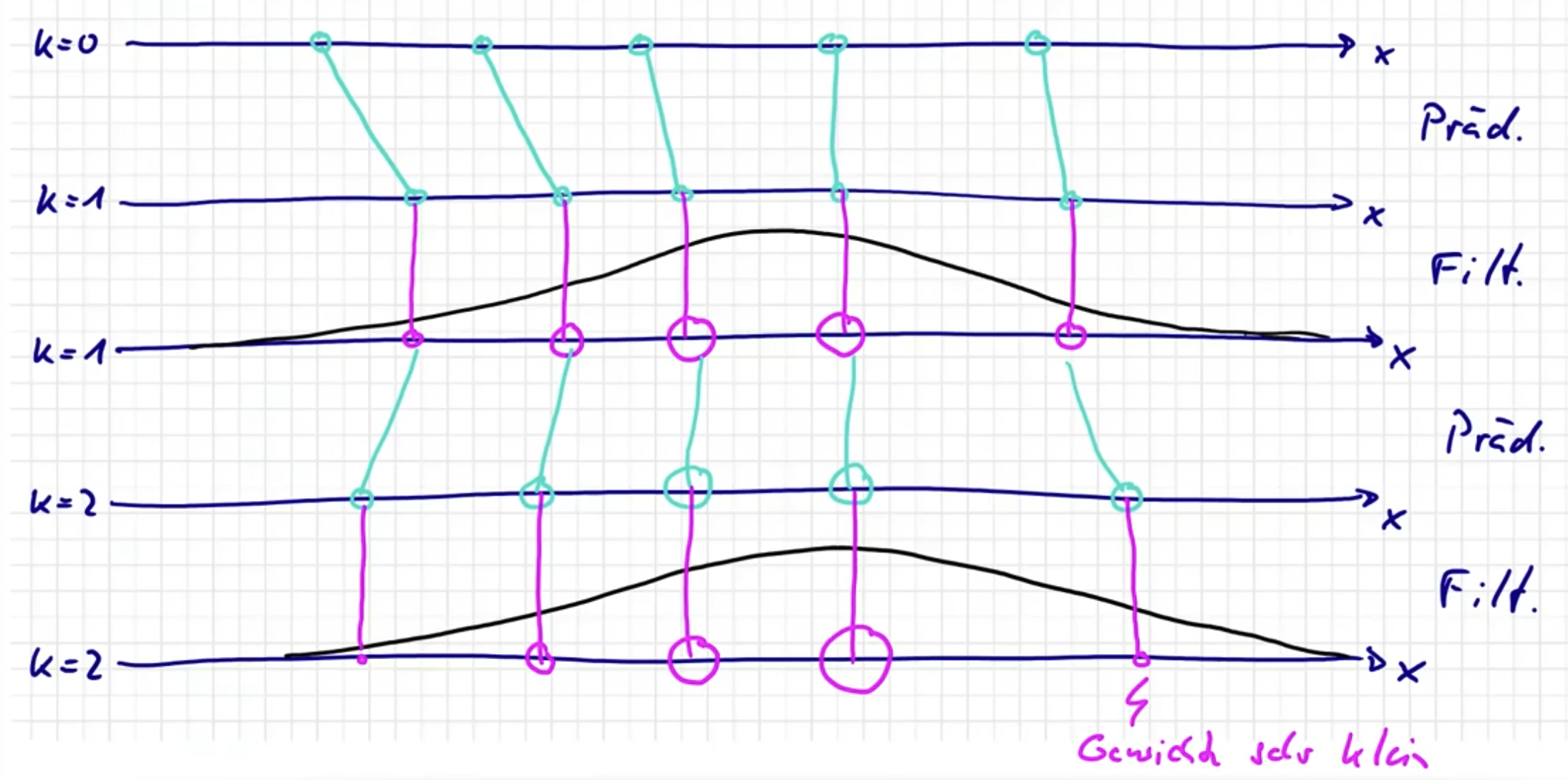

Navies Partikel Filter Prädiktion 💡Update Sample Positionen. Gewichte bleiben gleich.

f k e ( x ‾ k ) f_{k}^{e}\left(\underline{x}_{k}\right) f k e ( x k )

f k e ( x ‾ k ) = ∑ i = 1 L w k e , i ⋅ δ ( x ‾ k − x ^ ‾ k e , i ) w k e , i = 1 L , i ∈ { 1 , … , L }

f_{k}^{e}\left(\underline{x}_{k}\right)=\sum_{i=1}^{L} w_{k}^{e, i} \cdot \delta\left(\underline{x}_{k}-\underline{\hat{x}}_{k}^{e, i}\right) \qquad w_{k}^{e, i}=\frac{1}{L}, i \in\left\{1, \ldots, L\right\}

f k e ( x k ) = i = 1 ∑ L w k e , i ⋅ δ ( x k − x ^ k e , i ) w k e , i = L 1 , i ∈ { 1 , … , L } Ziehe Samples zum Zeitpunkt k + 1 k+1 k + 1

x ^ ‾ k + 1 p , i ∼ f ( x ‾ k + 1 ∣ x ^ k e , i )

\underline{\hat{x}}_{k+1}^{p, i} \sim f\left(\underline{x}_{k+1} \mid \hat{x}_{k}^{e, i}\right)

x ^ k + 1 p , i ∼ f ( x k + 1 ∣ x ^ k e , i ) Gewichte bleiben gleich

w k + 1 p , i = w k e , i

w_{k+1}^{p, i} = w_{k}^{e, i}

w k + 1 p , i = w k e , i f k + 1 p ( x ‾ k ) f_{k+1}^{p}\left(\underline{x}_{k}\right) f k + 1 p ( x k )

f k + 1 p ( x ‾ k + 1 ) = ∑ i = 1 L w k + 1 p , i δ ( x ‾ k + 1 − x ^ ‾ k + 1 p , i )

f_{k+1}^{p}\left(\underline{x}_{k+1}\right)=\sum_{i=1}^{L} w_{k+1}^{p, i} \delta\left(\underline{x}_{k+1}-\underline{\hat{x}}_{k+1}^{p, i}\right)

f k + 1 p ( x k + 1 ) = i = 1 ∑ L w k + 1 p , i δ ( x k + 1 − x ^ k + 1 p , i ) Filterung 💡Update Gewichte. Sample Positionen bleiben gleich.

f k e ( x ‾ k ) ∝ f ( y ‾ k ∣ x ‾ k ) ⋅ f k p ( x ‾ k ) = f ( y ‾ k ∣ x ‾ k ) ⋅ ∑ i = 1 L w k p , i ⋅ δ ( x ‾ k − x ^ ‾ k p , i ) = ∑ i = 1 L w k p , i ⋅ f ( y ‾ k ∣ x ‾ ^ k p , i ) ⏟ ∝ w k e , i ⋅ δ ( x ‾ k − x ^ ‾ k p , i ⏟ x ^ ‾ k e , i )

\begin{aligned}

f_{k}^{e}\left(\underline{x}_{k}\right) &\propto f\left(\underline{y}_{k} \mid \underline{x}_{k}\right) \cdot f_{k}^{p}\left(\underline{x}_{k}\right)\\

&=f\left(\underline{y}_{k} \mid \underline{x}_{k}\right) \cdot \sum_{i=1}^{L} w_{k}^{p, i} \cdot \delta\left(\underline{x}_{k}-\underline{\hat{x}}_{k}^{p, i}\right)\\

&=\sum_{i=1}^{L} \underbrace{w_{k}^{p, i} \cdot f\left(\underline{y}_{k} \mid \hat{\underline{x}}_{k}^{p, i}\right)}_{\propto w_{k}^{e, i}} \cdot \delta(\underline{x}_{k}-\underbrace{\underline{\hat{x}}_{k}^{p, i}}_{\underline{\hat{x}}_{k}^{e, i}})

\end{aligned}

f k e ( x k ) ∝ f ( y k ∣ x k ) ⋅ f k p ( x k ) = f ( y k ∣ x k ) ⋅ i = 1 ∑ L w k p , i ⋅ δ ( x k − x ^ k p , i ) = i = 1 ∑ L ∝ w k e , i w k p , i ⋅ f ( y k ∣ x ^ k p , i ) ⋅ δ ( x k − x ^ k e , i x ^ k p , i ) Positionen bleiben gleich

x ^ ‾ k e , i = x ^ ‾ k p , i

\underline{\hat{x}}_{k}^{e, i} = \underline{\hat{x}}_{k}^{p, i}

x ^ k e , i = x ^ k p , i Gewichte adaptieren

w k e , i ∝ w k p , i ⋅ f ( y ‾ k ∣ x ‾ ^ k p , i )

w_{k}^{e, i} \propto w_{k}^{p, i} \cdot f\left(\underline{y}_{k} \mid \hat{\underline{x}}_{k}^{p, i}\right)

w k e , i ∝ w k p , i ⋅ f ( y k ∣ x ^ k p , i ) und Normalisieren

w k e , i : = w k e , i ∑ i w k e , i

w_{k}^{e, i}:=\frac{w_{k}^{e, i}}{\displaystyle \sum_{i} w_{k}^{e,i}}

w k e , i := i ∑ w k e , i w k e , i Problem Varianz der Samples erhöht sich mit Filterschritten

Partikel sterben aus → \rightarrow →

Aussterben schneller, je genauer die Messung, da Likelihood schmaler (Paradox!)

Resampling Approximation der gewichteter Samples durch ungewichtete

f k e ( x ‾ k ) = ∑ i = 1 L w k e , i ⋅ δ ( x ‾ k − x ^ ‾ k e , i ) ≈ ∑ i = 1 L 1 L δ ( x ‾ k − x ^ ‾ k e , i )

f_{k}^{e}\left(\underline{x}_{k}\right)=\sum_{i=1}^{L} w_{k}^{e, i} \cdot \delta\left(\underline{x}_{k}-\underline{\hat{x}}_{k}^{e, i}\right) \approx \sum_{i=1}^{L} \frac{1}{L} \delta\left(\underline{x}_{k}-\underline{\hat{x}}_{k}^{e, i}\right)

f k e ( x k ) = i = 1 ∑ L w k e , i ⋅ δ ( x k − x ^ k e , i ) ≈ i = 1 ∑ L L 1 δ ( x k − x ^ k e , i ) Gegeben: L L L w i w_i w i

Gesucht: L L L 1 L \frac{1}{L} L 1

Sequential Importance Sampling f k e ( x ‾ k ) = f ( x ‾ k ∣ y ‾ 1 : k ) f_{k}^{e}\left(\underline{x}_{k}\right)=f\left(\underline{x}_{k} \mid \underline{y}_{1: k}\right) f k e ( x k ) = f ( x k ∣ y 1 : k ) x ‾ 1 : k − 1 \underline{x}_{1: k-1} x 1 : k − 1

f k e ( x ‾ k ) = f ( x ‾ k ∣ y ‾ 1 : k ) = ∫ R N ⋯ ∫ R N f ( x ‾ 1 : k ∣ y ‾ 1 : k ) d x ‾ 1 : k − 1

f_{k}^{e}\left(\underline{x}_{k}\right)=f\left(\underline{x}_{k} \mid \underline{y}_{1: k}\right)=\int_{\mathbb{R}^{N}} \cdots \int_{\mathbb{R}^{N}} f\left(\underline{x}_{1: k} \mid \underline{y}_{1 : k}\right) d \underline{x}_{1: k-1}

f k e ( x k ) = f ( x k ∣ y 1 : k ) = ∫ R N ⋯ ∫ R N f ( x 1 : k ∣ y 1 : k ) d x 1 : k − 1 Importance Sampling für f ( x ‾ k , x ‾ k − 1 ∣ y ‾ 1 : k ) f(\underline{x}_k, \underline{x}_{k-1} \mid \underline{y}_{1:k}) f ( x k , x k − 1 ∣ y 1 : k )

f k e ( x ‾ k ) = ∫ R N ⋯ ∫ R N f ( x ‾ 1 : k ∣ y ‾ 1 : k ) p ( x ‾ 1 : k ∣ y ‾ 1 : k ) ⏟ = : w k e , i p ( x ‾ 1 : k ∣ y ‾ 1 : k ) d x ‾ 1 : k − 1

f_{k}^{e}\left(\underline{x}_{k}\right) = \int_{\mathbb{R}^{N}} \cdots \int_{\mathbb{R}^{N}} \underbrace{\frac{f\left(\underline{x}_{1: k} \mid \underline{y}_{1 : k}\right)}{p\left(\underline{x}_{1: k} \mid \underline{y}_{1 : k}\right)}}_{=: w_k^{e, i}} p\left(\underline{x}_{1: k} \mid \underline{y}_{1 : k}\right) d \underline{x}_{1: k-1}

f k e ( x k ) = ∫ R N ⋯ ∫ R N =: w k e , i p ( x 1 : k ∣ y 1 : k ) f ( x 1 : k ∣ y 1 : k ) p ( x 1 : k ∣ y 1 : k ) d x 1 : k − 1 f ( x ‾ 1 : k ∣ y ‾ 1 : k ) p ( x ‾ 1 : k ∣ y ‾ 1 : k ) \frac{f\left(\underline{x}_{1: k} \mid \underline{y}_{1 : k}\right)}{p\left(\underline{x}_{1: k} \mid \underline{y}_{1 : k}\right)} p ( x 1 : k ∣ y 1 : k ) f ( x 1 : k ∣ y 1 : k )

Zähler

f ( x ‾ 1 : k ∣ y ‾ 1 : k ) ∝ f ( y ‾ k ∣ x ‾ 1 : k , y ‾ 1 : k − 1 ) ⋅ f ( x ‾ 1 : k ∣ y ‾ 1 : k − 1 ) = f ( y ‾ k ∣ x ‾ k ) ⋅ f ( x ‾ k ∣ x ‾ 1 : k − 1 , y ‾ 1 : k − 1 ) ⋅ f ( x ‾ 1 : k − 1 ∣ y ‾ 1 : k − 1 ) = f ( y ‾ k ∣ x ‾ k ) ⋅ f ( x ‾ k ∣ x ‾ k − 1 ) ⋅ f ( x ‾ 1 : k − 1 ∣ y ‾ 1 : k ⋅ 1 )

\begin{aligned}

f\left(\underline{x}_{1: k} \mid \underline{y}_{1: k}\right) &\propto f\left(\underline{y}_{k} \mid \underline{x}_{1: k}, \underline{y}_{1: k - 1}\right) \cdot f\left(\underline{x}_{1: k} \mid \underline{y}_{1: k-1}\right)\\

&=f\left(\underline{y}_{k} \mid \underline{x}_{k}\right) \cdot f\left(\underline{x}_{k} \mid \underline{x}_{1:k-1}, \underline{y}_{1:k-1}\right) \cdot f\left(\underline{x}_{1:k-1} \mid \underline{y}_{1: k-1}\right)\\

&=f\left(\underline{y}_{k} \mid \underline{x}_{k}\right) \cdot f\left(\underline{x}_{k} \mid \underline{x}_{k-1}\right) \cdot f\left(\underline{x}_{1: k-1} \mid \underline{y}_{1: k \cdot 1}\right)

\end{aligned}

f ( x 1 : k ∣ y 1 : k ) ∝ f ( y k ∣ x 1 : k , y 1 : k − 1 ) ⋅ f ( x 1 : k ∣ y 1 : k − 1 ) = f ( y k ∣ x k ) ⋅ f ( x k ∣ x 1 : k − 1 , y 1 : k − 1 ) ⋅ f ( x 1 : k − 1 ∣ y 1 : k − 1 ) = f ( y k ∣ x k ) ⋅ f ( x k ∣ x k − 1 ) ⋅ f ( x 1 : k − 1 ∣ y 1 : k ⋅ 1 ) Nenner

p ( x ‾ 1 : k ∣ y ‾ 1 : k ) = p ( x ‾ k ∣ x ‾ 1 : k − 1 , y ‾ 1 : k ) ⋅ p ( x ‾ 1 : k − 1 ∣ y ‾ 1 : k − 1 )

p\left(\underline{x}_{1: k} \mid \underline{y}_{1: k}\right)=p\left(\underline{x}_{k} \mid \underline{x}_{1: k - 1}, \underline{y}_{1: k}\right) \cdot p\left(\underline{x}_{1: k -1} \mid \underline{y}_{1: k - 1}\right)

p ( x 1 : k ∣ y 1 : k ) = p ( x k ∣ x 1 : k − 1 , y 1 : k ) ⋅ p ( x 1 : k − 1 ∣ y 1 : k − 1 ) Einsetzen, w k e , i w_k^{e, i} w k e , i

w k e , i = f ( x ^ ‾ 1 : k ∣ y ‾ 1 : k ) p ( x ^ ‾ 1 : k ∣ y ‾ 1 : k ) ∝ f ( y ‾ k ∣ x ‾ k i ) ⋅ f ( x ‾ k i ∣ x ‾ k − 1 i ) p ( x ‾ k i ∣ x ‾ 1 : k − 1 i , y ‾ 1 : k ) ⋅ f ( x ‾ 1 : k − 1 i ∣ y ‾ 1 : k ⋅ 1 ) p ( x ‾ 1 : k − 1 i ∣ y ‾ 1 : k − 1 ) ⏟ = w k − 1 e , i

w_k^{e, i} = \frac{f\left(\underline{\hat{x}}_{1: k} \mid \underline{y}_{1 : k}\right)}{p\left(\underline{\hat{x}}_{1: k} \mid \underline{y}_{1 : k}\right)} \propto \frac{f\left(\underline{y}_{k} \mid \underline{x}_{k}^i\right) \cdot f\left(\underline{x}_{k}^i\mid \underline{x}_{k-1}^i\right)}{p\left(\underline{x}_{k}^i \mid \underline{x}_{1: k - 1}^i, \underline{y}_{1: k}\right)} \cdot \underbrace{\frac{f\left(\underline{x}_{1: k-1}^i \mid \underline{y}_{1: k \cdot 1}\right)}{p\left(\underline{x}_{1: k -1}^i \mid \underline{y}_{1: k - 1}\right)}}_{=w_{k-1}^{e, i}}

w k e , i = p ( x ^ 1 : k ∣ y 1 : k ) f ( x ^ 1 : k ∣ y 1 : k ) ∝ p ( x k i ∣ x 1 : k − 1 i , y 1 : k ) f ( y k ∣ x k i ) ⋅ f ( x k i ∣ x k − 1 i ) ⋅ = w k − 1 e , i p ( x 1 : k − 1 i ∣ y 1 : k − 1 ) f ( x 1 : k − 1 i ∣ y 1 : k ⋅ 1 ) und Normalisieren.

Spezielle Proposals