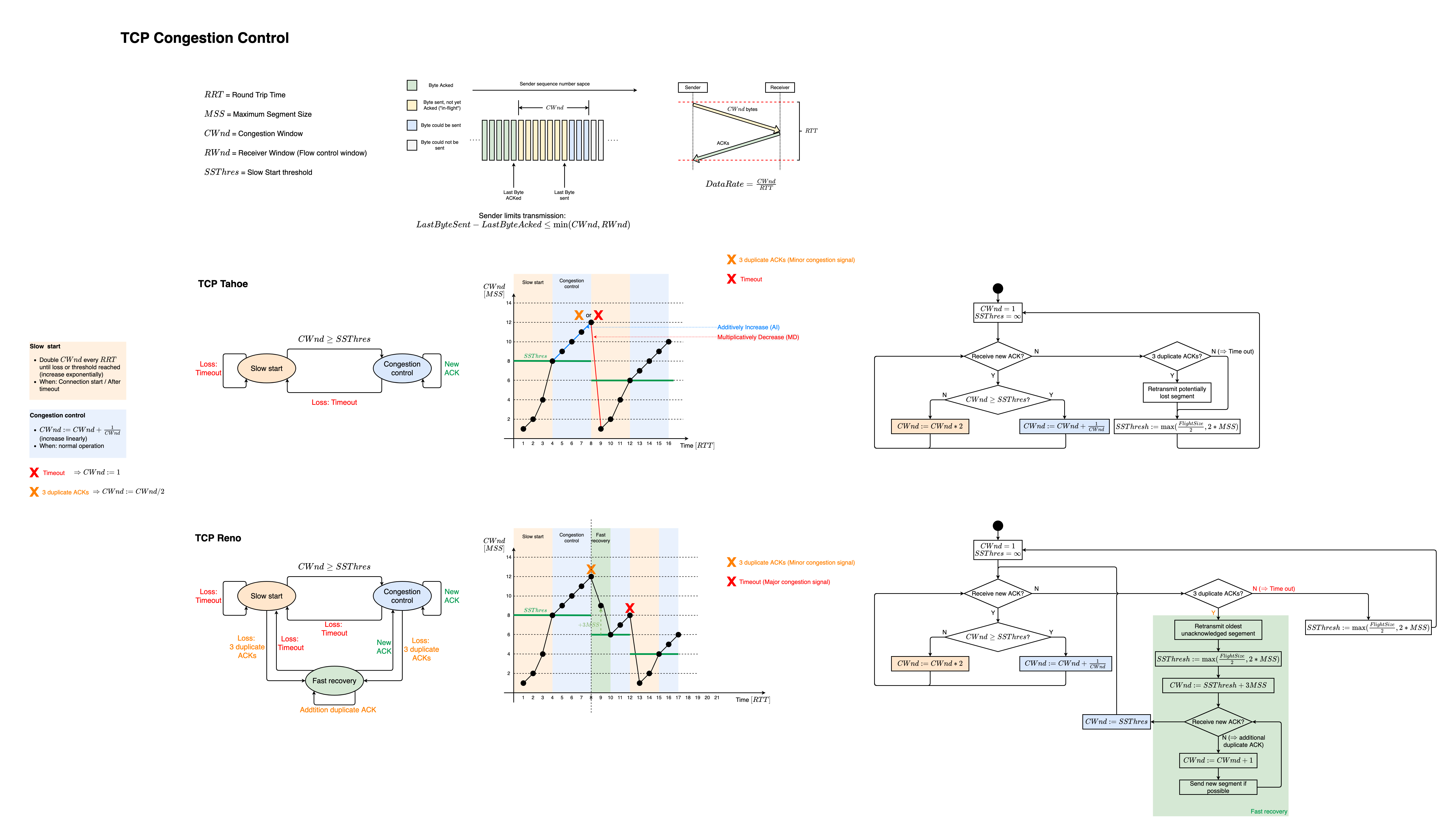

Internet Congestion Control

TCP congestion control summary

Focus on

- congestion control in the context of the Internet and its transport protocol TCP

- implicit window-based congestion control unless explicitly stated differently

Basics

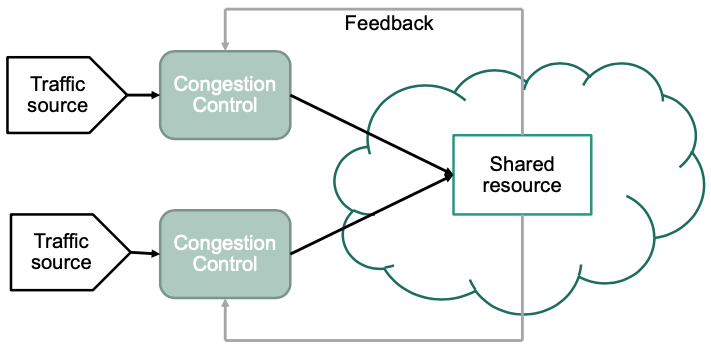

Shared (Network) Resources

- General problem: Multiple users use same resource

- E.g., multiple video streams use same network link

- 🎯 High level objective with respect to networks

- Provide good utilization of network resources

- Provide acceptable performance for users

- Provide fairness among users / data streams

- Mechanisms that deal with shared resources

- Scheduling

- Medium access control

- Congestion control

- …

Congestion Control Problem

- Adjusts load introduced to shared resource in order to avoid overload situations

- Utilizes feedback information (implicit or explicit)

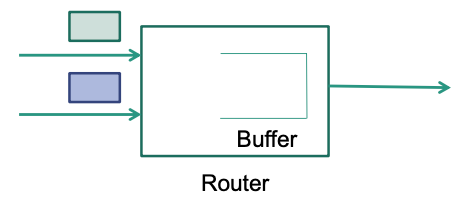

“Critical” Situations

Example 1

Router concurrently receives two packets from different input interfaces which are directed towards the same output interface. Only one of these packets can be sent at a time.

What to do with the other packet?

Buffer or

Drop

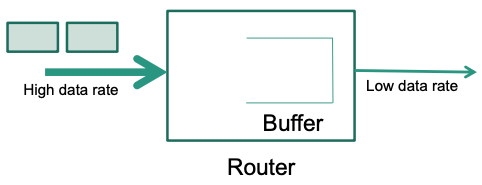

Example 2

Router has interfaces with different data rates

- Input interface has high data rate

- Output interface has low data rate

Two successive packets of a same or different senders arrive at input interface.

What to do with the second packet? The output interface is still busy sending the first packet while the second arrives.

- Buffer or

- Drop

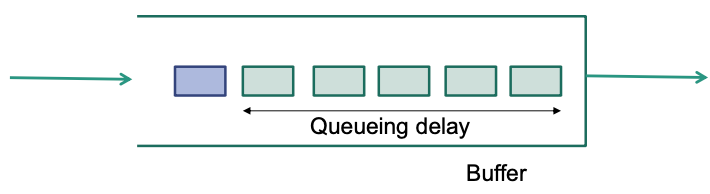

Buffer

Routers need buffers (queues) to cope with temporary traffic bursts

Packets that can NOT be transmitted immediately are placed in the buffer

If buffer is filled up, packets need to be dropped 🤪

Buffers add latency

Typically implemented as FIFO queues

Router can only start sending a queued packet after all packets in front of it have been sent

Five green packets introduce queueing delay for blue packet

End-to-end latency of a packet includes

- Propagation delay

- Transmission delay

- Queueing delay

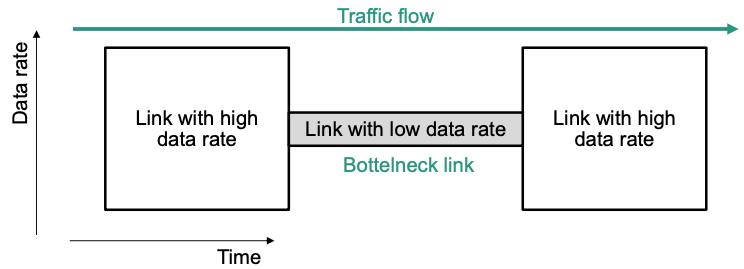

General Problem

Sender wants to send data through the network to the receiver

On every network path, the link with the lowest available data rate limits the maximum data rate that can be achieved end-to-end

- This link is called bottleneck link

- The maximum data rate of a link is called link capacity

🔴 Problem: sender can send more data than bottleneck link can handle

Sender can overload bottleneck link! 🤪

Sender has to adjust its sending rate

How to find the “optimal” sending rate?

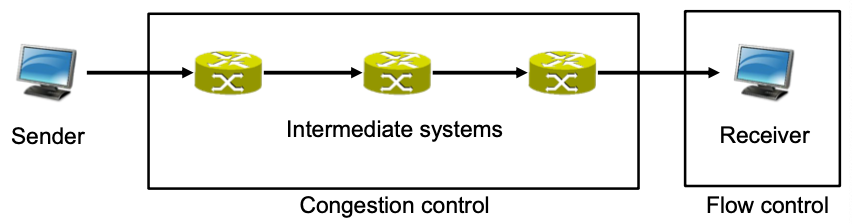

Congestion Control vs. Flow Control

- Flow control

- Bottleneck is located at receiver side

- Receiver can not cope with desired data rate of sender

- Congestion control

- Bottleneck is located in the network

- Bottleneck link does not provide sufficient available data rate

- Leads to congested router / intermediate system

Congestion Collapse

Throughput vs. Goodput

Throughput: Amount of network layer data delivered in a time interval

- E.g., 1 Gbit/s

- Counts everything including retransmissions

the aggregated amount of data that flows through the router/link

Goodput: „Application-level“ throughput

- Amount of application data delivered in a time interval

- Retransmissions at the transport layer do NOT count

- Packets dropped in transmission do NOT count

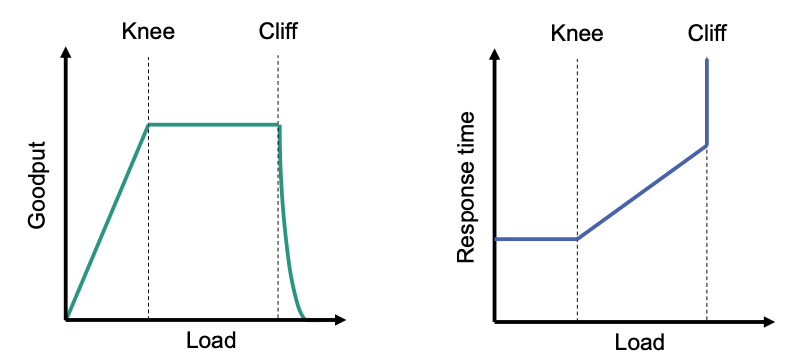

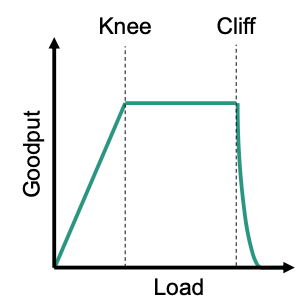

Observation

Load is small (below network capacity) network keeps up with load

Load reaches network capacity (knee)

Goodput stops increasing, buffers build up, end-to-end latency increases

Network is congested!

Load increases beyond cliff

- Packets start to be dropped, goodput drastically decreases Congestion collapse

- Load refers to aggregated network layer traffic that is introduced by all active data streams. This includes TCP retransmissions.

- Network capacity refers to maximum load that network can handle.

How Could Congestion Collapse Happen?

Congestion due to

- Single TCP connection

- Exceeds available capacity at bottleneck link

- Prerequisite: flow control window is large enough

- Multiple TCP connections

- Aggregated load exceeds available capacity

- Single TCP connection has no knowledge about other TCP connections

Knee and Cliff

Keep traffic load around knee

- Good utilization of network capacity

- Low latencies

- Stable goodput

Prevent traffic from going over the cliff

- High latencies

- High packet losses

- Highly decreased goodput

Challenge of Congestion Control

- Challenge: Find “optimal” sending rate

- Usually, sender has NO global view of the network

- NO trivial answer

- Lots of algorithms for congestion control

Types of Congestion Control

Window-based Congestion Control

Congestion Control Window (𝐶𝑊𝑛𝑑)

- Determines maximum number of unacknowledged packets allowed per TCP connection

- Assumes that packets are acknowledged by receiver

- Basic window mechanism is similar to sliding window as applied for flow control purposes

- Adjusts sending rate of source to bottleneck capacity self-clocking

Rate-based Congestion Control

Controls sending rate, no congestion control window

- Implemented by timers that determine inter packet intervals

- High precision required

- 🔴 Problem: NO comparable cut-off mechanism, such as missing acknowledgements

- Sender keeps sending even in case of congestion

- Needed in case no acknowledgements are used

- E.g., UDP

Implicit vs. Explicit Congestion Signals

Inplicit

- Without dedicated support of the network

- Implicit congestion signals

- Timeout of retransmission timer

- Receipt of duplicate acknowledgements

- Round-Trip Time (RTT) variation

Explicit

- Nodes inside the network indicate congestion

On the internet

- Usually NO support for explicit congestion signals

- Congestion control must work with implicit congestion signals only

End-to-end vs. Hop-by-hop

End-to-end

- Congestion control operates on an end system basis

- Nodes inside the network are NOT involved

Hop-by-hop

- Congestion control operates on a per hop basis

- Nodes inside the network are actively involved

Improved Versions of TCP

🎯 Goal

- Estimate available network capacity in order to avoid overload situations

- Provide feedback (congestion signal)

- Limit the traffic introduced into the network accordingly

- Apply congestion control

TCP Tahoe

TCP Recap

- Connection establishment

- 3 way handshake Full duplex connection

- Connection termination

- Separately for each direction of transmission

- 4 way handshake

- Data transfer

- Byte-oriented sequence numbers

- Go-back-N

- Positive cumulative acknowledgements

- Timeout

- Flow control (sliding window)

TCP Tahoe in a Nutshell

Mechanisms used for congestion control

- Slow start

- Timeout

- Congestion avoidance

- Fast retransmit

Congestion signal

- Retransmission timeout or

- Receipt of duplicate acknowledgements (𝑑𝑢𝑝𝑎𝑐𝑘)

In case of congestion signal: slow start

The following must always be valid

- : Congestion Control Window

- : Flow Control Window

Variables

- : Convestion window

- : Slow Start Threshold

- Value of at which TCP instance switches from slow start to congestion avoidance

Baisc approach: AIMD (additive increase, multiplicative decrease)

- Additive increase of after receipt of an acknowledgement

- Multiplicative decrease of if packet loss is assumed (congestion signal)

Initial values

- : Maximum Segment Size

- Since RFC 2581: Initial Window and

- initially set to “infinite”

- Number of duplicate ACKs (congestion signal): 3

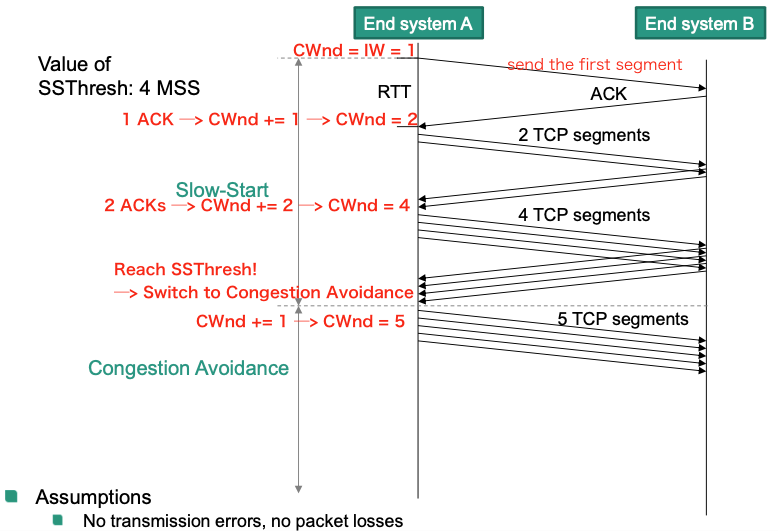

Algorithm

and ACKs are being received: slow start

- Exponential increase of congestion window

- Upon receipt of an ACK:

- Exponential increase of congestion window

and ACKs are being received: congestion avoidance

- Linear increase of congestion window

- Upon receipt of an ACK :

- Linear increase of congestion window

Congestion signal: timeout or 3 duplicate acknowledgements: slow start

Congestion is assumed

Set

- : amount of data that has been sent but not yet acknowledged

- This amount is currently in transit

- Might also be limited due to flow control

- : amount of data that has been sent but not yet acknowledged

Set or

On 3 duplicate ACKs: retransmission of potentially lost TCP segment

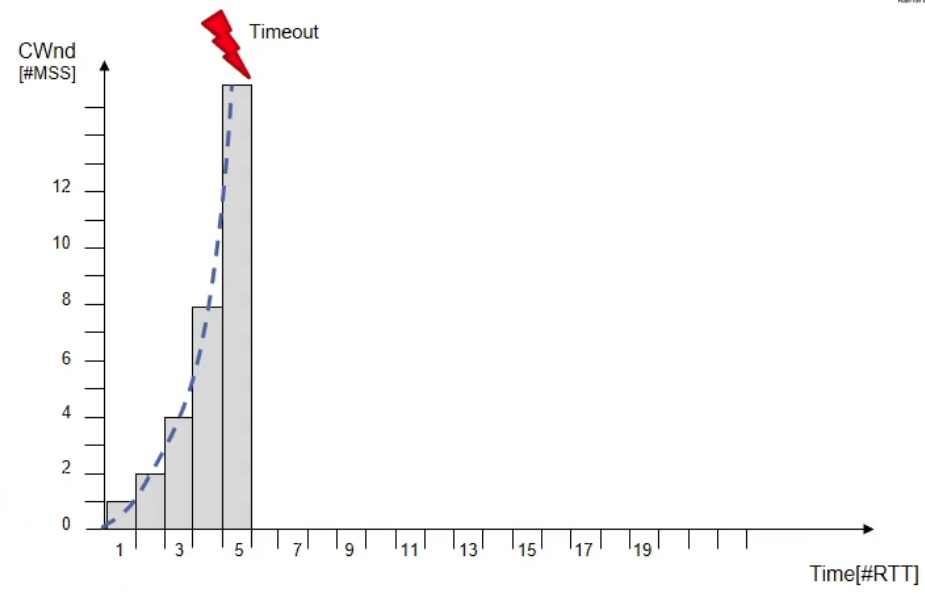

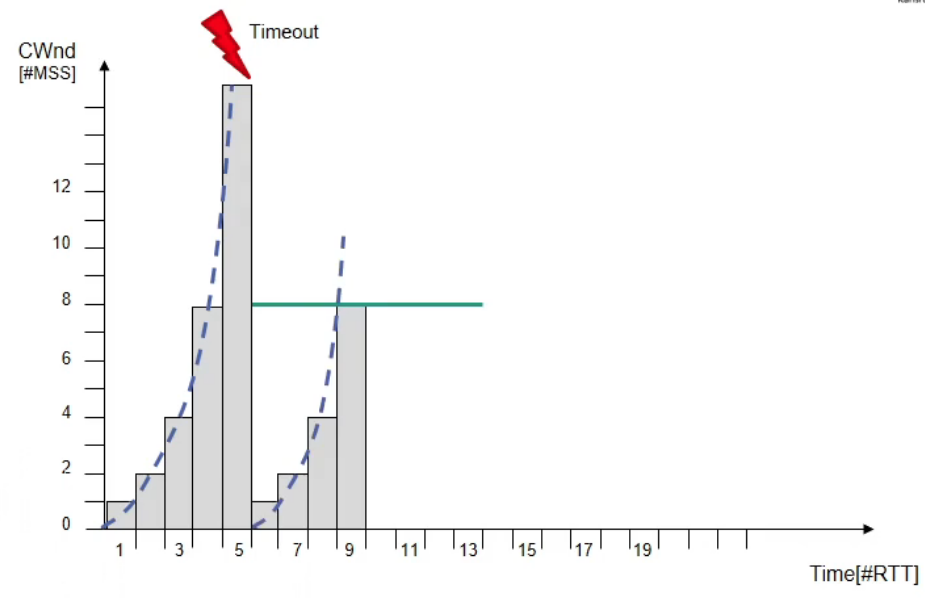

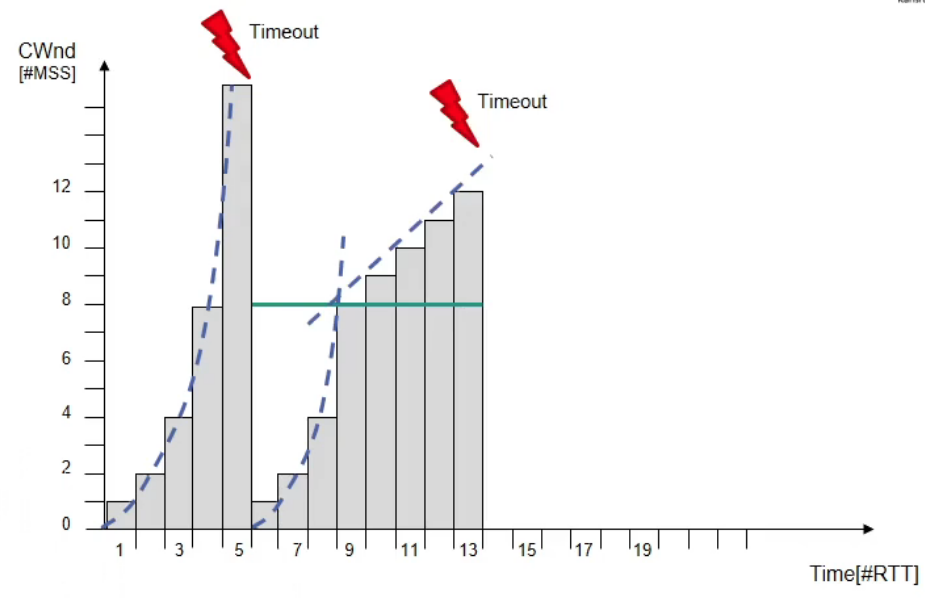

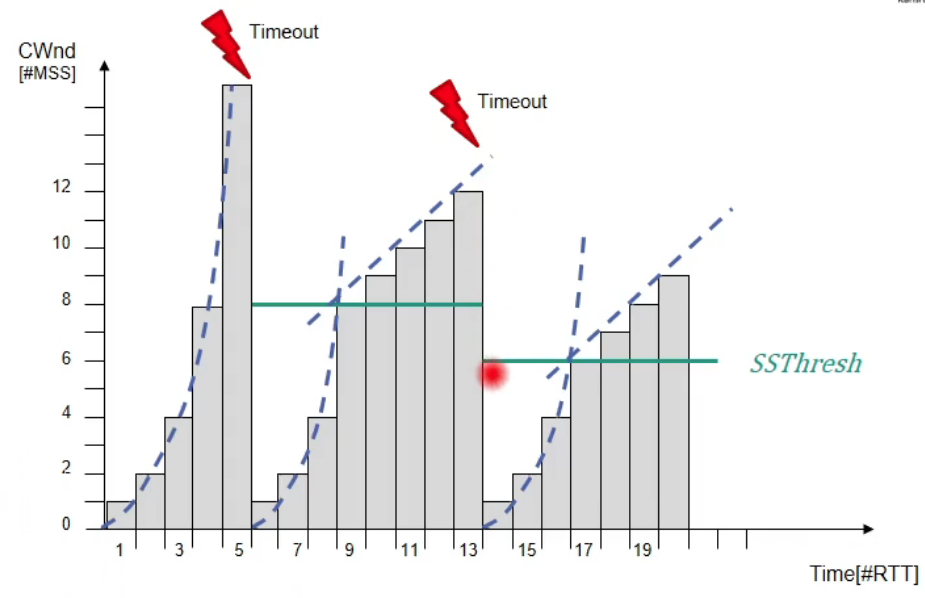

Example

Evolution of Congestion Window

Assumptions

- No transmission errors, no packet losses

- All TCP segments and acknowledgements are transmitted/received within single RTT

- Flight-size equals CWnd

- Congestion signal occurs during RTT

Initialize

The grows in “slow start” mode. When , a timeout error occurs.

This is a congestion signal. So we go back to “slow start”

Set

In this case, .

So

Set or

Now Switch to “congestion avoidance”!

When , a timeout error occurs.

We just perform the same handling as above.

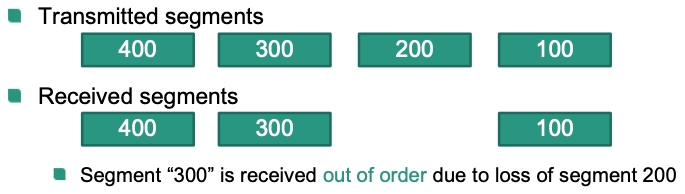

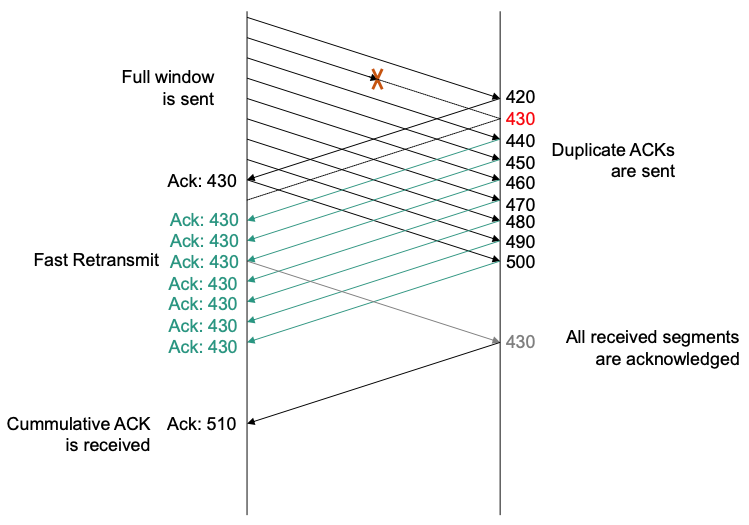

Fast Retransmit

Assume the following scenario

(Note: Not every segment that is received out of order indicates congestion. E.g., only one segment is dropped, otherwise data transfer is ok)

What would happen? Wait until retransmission timer expires, then retransmission

Waiting time is longer than a round trip time (RTT) It will take a long time!🤪

Our goal is faster reaction

Retransmission after receipt of a pre-defined number of duplicate ACK

Much faster than waiting for expiration of retransmission timer

Example: suppose pre-defined number of duplicate ACK is 3

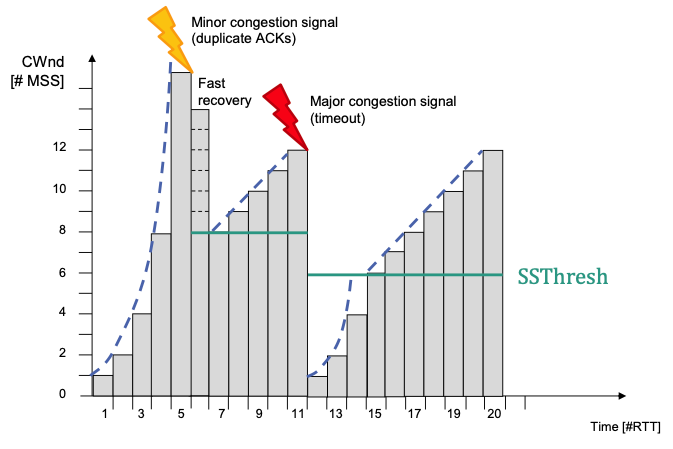

TCP Reno

Differentiation between

- Major congestion signal: Timeout of retransmission timer

- Minor congestion signal: Receipt of duplicate ACKs

In case of a major congestion signal

- Reset to slow start as in TCP Tahoe

In case of minor congestion signal

No reset to slow start

- Receipt of duplicate ACK implies successful delivery of new segments, i.e., packets have left the network

- New packets can also be injected in the network

In addition to the mechanisms of TCP Tahoe: fast recovery

- Controls sending of new segments until receipt of a non-duplicate ACK

Fast Recovery

- Starting condition: Receipt of a specified number of duplicate ACKs

- Usually set to 3 duplicate ACKs

- 💡 Idea: New segments should continue to be sent, even if packet loss is not yet recovered

- Self clocking continuous

- Reaction

- Reduce network load by halving the congestion window Retransmit first missing segment (fast retransmit)

- Consider continuous activity, i.e., further received segments while no new data is acknowledged

- Increase congestion window by number of duplicate ACKs (usually 3)

- Further increase after receipt of each additional duplicate ACK

- Receipt of new ACK (new data is acknowledged)

- Set congestion window to its value at the beginning of fast recovery

In Congestion Avoidance

- If timeout: slow start

- Set

- If 3 duplicate ACKs: fast recovery

- Retransmission of oldest unacknowledged segment (fast retransmit)

- Set

- Set

- Receipt of additional duplicate ACK

- Send new, i.e., not yet sent segments (if available)

- Receipt of a “new” ACK: congestion avoidance

Evolution of Congestion Window with TCP Reno

Analysis of Improvements

- After observing congestion collapses, the following mechanisms (among others) were introduced to the original TCP (RFC 793)

Slow-Start

Round-trip time variance estimation

Exponential retransmission timer backoff

Dynamic window sizing on congestion

More aggressive receiver acknowledgement policy

- 🎯 Goal: Enforce packet conservation in order to achieve network stability

Self Clocking

Recap: TCP uses window-based flow control

Basic assumption

- Complete flow control window in transit

- In TCP: receive window

- Bottleneck link with low data rate on the path to the receiver

- Complete flow control window in transit

Basic scenario

Conservation of Packets

🎯 Goal: get TCP connection in equilibrium

Full window of data in transit

“Conservative”: NO new segment is injected into the network before an old segment leaves the network

A system with this property should be robust in the face of congestion

Three ways for packet conservation to fail

Slow Start

🎯 Goal: bring TCP connection into equilibrium

Connection has just started or

Restart after assumption of (major) congestion

🔴 Problem: get the „clock“ started (At the beginning of a connection there is no „clock“ available.)

💡 Basic idea (per TCP connection)

- Do not send complete receive window (flow control) immediately

- Gradually increase number of segments that can be sent without receiving an ACK

- Increase the amount of data that can be in transit (“in-flight”)

Approach

Apply congestion window, in addition to receive window

- Minimum of congestion and receive window can be sent

- Congestion Window:

- Receive Window:

- Minimum of congestion and receive window can be sent

New connection or congestion assumed

Reset of congestion window:

Incoming ACK for sent (not retransmitted) segment

- Increase congestion window by one:

Leads to exponential growth of 𝐶𝑊𝑛𝑑

- Sending rate is at most twice as high as the bottleneck capacity!

Retransmission Timer

Assumption: Complete receive window in transit

Alternative 1: ACK received

- A segment was delivered and, thus, exited the network conservation of packets is fulfilled

Alternative 2: retransmission timer expired

Segment is dropped in the network: conservation of packets is fulfilled

Segment is delayed but not dropped: conservation of packets NOT fulfilled

Too short retransmission timeout causes connection to leave equilibrium

Good estimation of Round Trip Time (RTT) essential for a good timer value!

Value too small: unnecessary retransmissions

Value too large: slow reaction to packet losses

Estimation of Round Trip Time

Timer-based RTT measurement

- Timer resolution varies (up to 500 ms)

- Requirements regarding timer resolutions vary

SampleRTT

- Time interval between transmission of a segment and reception of corresponding acknowledgement

- Single measurement

- Retransmissions are ignored

EstimatedRTT

- Smoothed value across a number of measurements

- Observation: measured values can fluctuate heavily

Apply exponential weighted moving average (EWMA)

- Influence of each value becomes gradually less as it ages

- Unbiased estimator for average value

(Typical value for : 0.125)

Derive value for retransmission timeout (RTO)

- Recommended value for : 2

Estimation of Deviation

🎯 Goal: Avoid the observed occasional retransmissions

Observation: Variation of RTT can greatly increase in higher loaded networks

- Consequently, requires higher “safety margin”

- Estimation error: difference between measured/sampled and estimated RTT

Computation

- Recommended values:

Multiple Retransmissions

How large should the time interval be between two subsequent retransmissions of the same segment?

Approach: Exponential backoff

After each new retransmission RTO doubles:

- Maximal value should be applied. It should be $$ 60 seconds

To which segment does the received ACK belong – to the original segment or to the retransmission?

- Approach: Karn‘s Algorithm

- ACKs for retransmitted segments are not included into the calculation of and

- Backoff is calculated as before

- Timeout value is set to the value calculated by backoff algorithm until an ACK to a non-retransmitted segment is received

- Then original algorithm is reactivated

- Approach: Karn‘s Algorithm

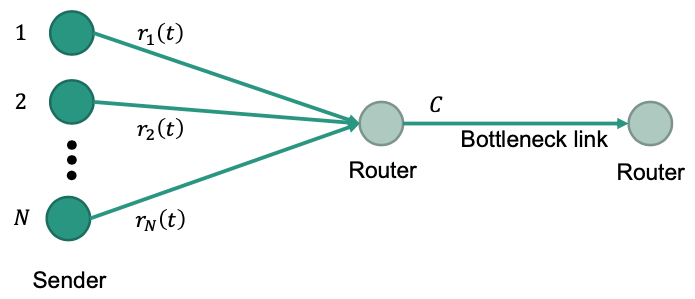

Congestion Avoidance

Consider multiple concurrent TCP connections

Assumption: TCP connection operates in equilibrium

Packet loss is with a high probability caused by a newly started TCP connection

- New connection requires resources on bottleneck router/link

Load of already existing TCP connection(s) needs to be reduced

Basic components

Implicit congestion signals

- Retransmission timeout

- Duplicate acknowledgements

Strategy to adjust traffic load: AIMD

- Additively increase load if no congestion signal is experienced

On acknowledgement received:

Multiplicatively decrease load in case a congestion signal was experienced

- On retransmission timeout $$ CWnd = \gamma * CWnd, \quad 0< \gamma < 1$$

- In TCP Tahoe: $\gamma = 1/2

Optimization Criteria

Basic Scenario

- sender that use same bottleneck link

Data rate of sender :

Capacity of bottleneck link:

- Bottleneck link: Link with lowest available data rate on the path to the receiver

Network-limited sender

- Assume that the sender always has data to send and data are sent as quickly as possible

- Sender can send a full window of data

- Congestion control limits the data rate of such a sender to the available capacity at the bottleneck link

Application-limited sender

- Data rate of the sender is limited by the application and not by the network

- Sender sends less data as allowed by the current window

Efficiency

- Closeness of the total load on the bottleneck link to its link capacity

- should be as close to 𝐶 as possible, i.e., close to the knee

- Overload and underload are not desirable

Fairness

All senders that share the bottleneck link get a fair allocation of the bottleneck link capacity

Examples

Jain ́s fairness index

Quantify „amount“ of unfairness

Fairness index

- Totally fair allocation has fairness index of (i.e., all are equal)

- Totally unfair allocation has fairness index of (i.e., one user gets entire capacity)

Max-min fairness

Situation

- Users share resource. Each user has an equal right to the resource

- But: some users intrinsically demand fewer resources than others (E.g., in case of application-limited senders)

Intuitive allocation of fair share

- Allocates users with a “small” demand what they want

- Equally distributes unused resources to “big” users

💡 Max-min fair allocation

Resources are allocated in order of increasing demand

No source gets a resource share larger than its demand

Sources with unsatisfied demands get an equal share of the resource

Implementation

- Senders with demanded sending rates

- Without loss of generality:

- : capacity

- Give to sender with smallest demand

- In case this is more than demanded, then is still available to others

- equally distributed to others each gets

- Senders with demanded sending rates

Example

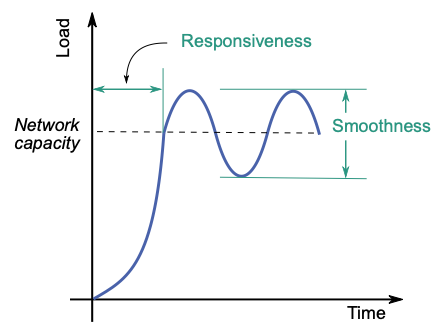

Convergence

- Responsiveness: Speed with which gets to equilibrium rate at knee after starting from any starting state

- May oscillate around goal (= network capacity)

- Smoothness: Size of oscillations around network capacity at steady state

(Smaller is better in both cases)

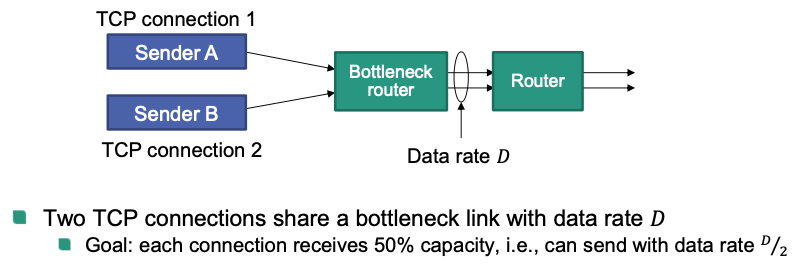

On Fairness

How to divide resources among TCP connections?

Strive for fair allocation 💪

🎯 Goal: all TCP connections receive equal share of bottleneck resource

- the share should be non-zero

- equal share is not ideal for all applications 🤔

Example: TCP connections share same bottleneck, Each TCP connection receives -th of bottleneck capacity

Observation

“Greedy” user: opens multiple TCP connections concurrently

Example

Link with capacity , two users, one connection per user

Each user gets capacity

Link with capacity , two users, user 1 with a single connection, user 2

with nine connections

User 1 can use , user 2 can use

“Greedy” receiver

- Can send several ACKs per received segment

- Can send ACKs faster than it receives segments

Additive Increase Multiplicative Decrease

General feedback control algorithm

Applied to congestion control

Additive increase of data rate until congestion

Multiplicative decrease of data rate in case of congestion signal

Converges to equal share of capacity at bottleneck link

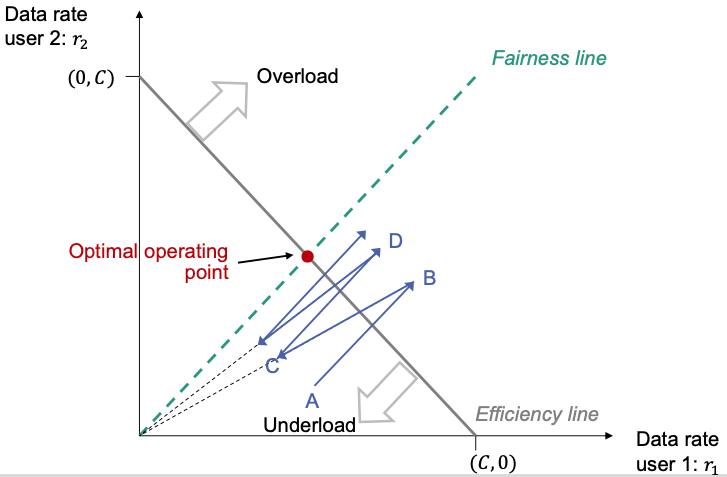

AIMD: Fairness

- Network with two sources that share a bottleneck link with capacity

- 🎯 Goal: bring system close to optimal point

Efficiency line

- holds for all points on the line

- Points under the line means underloaded Control decision: increase rate

- Points above the line means overloaded Control decision: decrease rate

Fairness line

- All allocations with fair allocation, i.e.

- Multiplying with does not change fair allocation:

Optimal operating point

- Intersection of efficiency line and fairness line: point

Optimality of AIMD

Additive increase

- Resource allocation of both users increased by

- In the graph: moving up along a 45-degree line

Multiplicative decrease

Move down along the line that connects to the origin

Point of operation iteratively moves closer to optimal operating point 👏

Periodic Model

Performance metrics of interest

- Throughput How much data can be transferred in which time interval?

- Latency How high is the experienced delay?

- Completion time How long until the transfer of an object/file is finished?

Variables

- : Sending rate measured in segments per time interval

- : Round trip time [seconds]

- : Loss probability of a segment

- : Maximum segment size [bit]

- : Value of a congestion window [MSS]

- : Data rate measured in bit per second [bit/s]

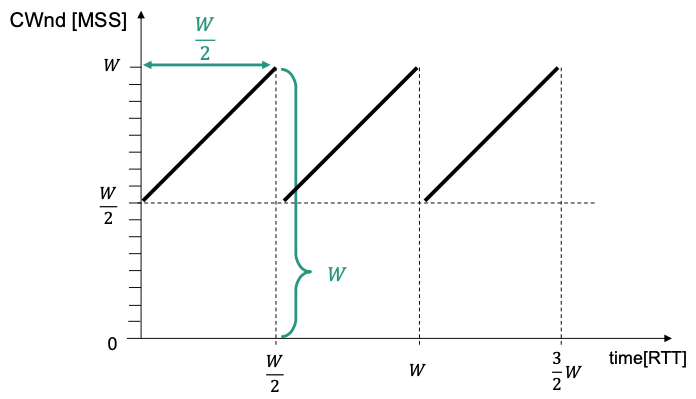

Periodic Model

Simple model – strong simplifications

🎯 Goals

Model long-term steady state behavior of TCP

Evaluate achievable throughput of a TCP connection under certain network conditions

Basic assumptions

- Network has constant loss probability

- Observed TCP connection does not influence

Further simplification: periodic losses

For an individual connection segment losses are equally spaced

Link delivers segments followed by a segment loss

Additional simplifications / model assumptions

Slow start is ignored

Congestion window increases linearly (congestion avoidance)

RTT is constant

Losses are detected using duplicate ACKs (No timeouts)

Retransmissions are not modelled

- Go-Back-N is not modelled

Connection only limited by

- Flow control (receive window) is never a limiting factor

Always sized segments are sent

Under given assumptions we have the diagram:

- Progress of CWnd: Perfect periodic saw tooth curve Note: Here is unitless.

Data rate when segment loss occurs?

How long until congestion window reaches 𝑊 again?

Average data rate of a TCP connection?

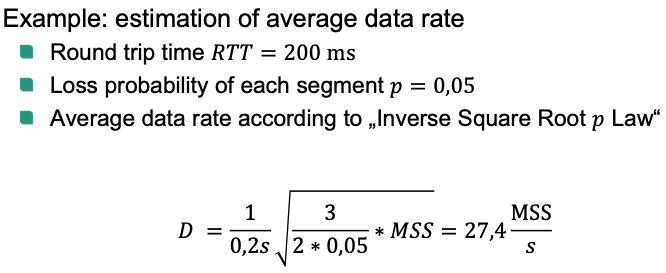

Step 1: Determine as a function of

Minimal value of congestion window:

Congestion window opens by one segment per RTT

- Duration of a period:

Number of delivered segments within one period

Corresponds to the area under the saw tooth curve

According to the assumptions

Step 2: Determine data rate as a function of

Average data rate

We have assumption and period duration is [s]

In step 1 we have

This is called “Inverse Square-Root Law”

Example

Active Queue Management (AQM)

Simple Queue Management

- Buffer in the router is full

- Next segment must be dropped Tail drop

- TCP detects congestion and backs off

- 🔴 Problems

- Synchronization: Segments of several TCP connections are dropped (almost) at the same time

- Nearly full buffer cannot absorb short bursts

Active Queue Management

Basic approach

Detect arising congestion within the network

Give early feedback to senders

- Intentionally trigger implicit congestion signal: packet loss

- Alternative: Send explicit congestion notification (ECN)

Routers drop (or mark) segments, before queue completely filled up

- Randomization: random decision on which segment to be dropped

Observations at the receiver on layer 4 Typically only a single segment is missing

AQM algorithms

- Random Early Detection (RED)

- Newer algorithms: CoDel, FQ-CoDel, PIE …

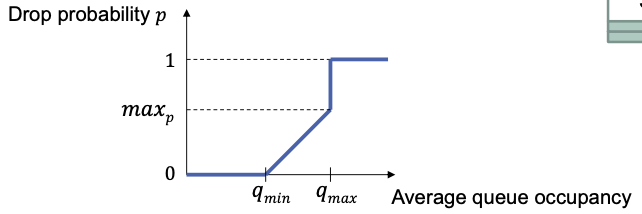

Random Early Detection

Approach

Average queue occupancy

- No drop of segments ()

average queue occupancy

- Probability of dropping an incoming packet is linearly increased with average queue occupancy

Average queue occupancy

- Drop all segments ()

Explicit Congestion Notification

🎯 Goal: Send explicit congestion signal, avoid unnecessary packet drops

Approach

Enable AQM to explicitly notify about congestion

AQM does not have to drop packets to create implicit congestion signal

How to notify?

- Mark IP datagram, but do not drop it

- Marked IP datagram is forwarded to receiver

How to react?

- Marked IP datagram is delivered to receiver instance of IP

- Information must be passed to corresponding receiver instance of TCP

- TCP sender must be notified