Logistic Regression in NLP

Logistic Regression (in NLP)

In natural language processing, logistic regression is the base-line supervised machine learning algorithm for classification, and also has a very close relationship with neural networks.

Generative and Discriminative Classifier

The most important difference between naive Bayes and logistic regression is that

- logistic regression is a discriminative classifier while

- naive Bayes is a generative classifier.

Consider a visual metaphor: imagine we’re trying to distinguish dog images from cat images.

- Generative model

- Try to understand what dogs look like and what cats look like

- You might literally ask such a model to ‘generate’, i.e. draw, a dog

- Given a test image, the system then asks whether it’s the cat model or the dog model that better fits (is less surprised by) the image, and chooses that as its label.

- disciminative model

- only trying to learn to distinguish the classes

- So maybe all the dogs in the training data are wearing collars and the cats aren’t. If that one feature neatly separates the classes, the model is satisfied. If you ask such a model what it knows about cats all it can say is that they don’t wear collars.

More formally, recall that the naive Bayes assigns a class to a document NOT by directly computing but by computing a likelihood and a prior

Generative model (like naive Bayes)

- Makes use of the likelihood term

- Expresses how to generate the features of a document if we knew it was of class

- Makes use of the likelihood term

Discriminative model

- attempts to directly compute

- It will learn to assign a high weight to document features that directly improve its ability to discriminate between possible classes, even if it couldn’t generate an example of one of the classes.

Components of a probabilistic machine learning classifier

Training corpus of input/output pairs

A feature representation of the input

- For each input observation , this will be a vector of features

- : feature for input

- For each input observation , this will be a vector of features

A classification function that computes , the estimated class, via

An objective function for learning, usually involving minimizing error on

training examples

An algorithm for optimizing the objective function.

Logistic regression has two phases:

training: we train the system (specifically the weights and ) using stochastic gradient descent and the cross-entropy loss.

test: Given a test example we compute and return the higher probability label or .

Classification: the sigmoid

Consider a single input observation

The classifier output can be

- : the observation is a member of the class

- : the observation is NOT a member of the class

We want to know the probability that this observation is a member of the class.

E.g.:

- The decision is “positive sentiment” versus “negative sentiment”

- the features represent counts of words in a document

- is the probability that the document has positive sentiment, while and is the probability that the document has negative sentiment.

Logistic regression solves this task by learning, from a training set, a vector of weights and a bias term.

Each weight is a real number, and is associated with one of the input features . The weight represents how important that input feature is to the classification decision, can be

- positive (meaning the feature is associated with the class)

- negative (meaning the feature is NOT associated with the class).

E.g.: we might expect in a sentiment task the word awesome to have a high positive weight, and abysmal to have a very negative weight.

Bias term , also called the intercept, is another real number that’s added to the weighted inputs.

To make a decision on a test instance, the resulting single number expresses the weighted sum of the evidence for the class:

(Note that is NOT a legal probability, since )

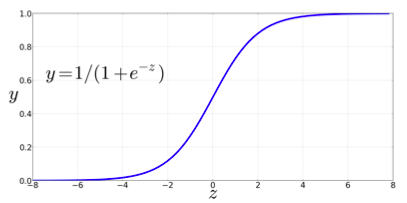

To create a probability, we’ll pass through the sigmoid function (also called logistic function):

👍 Advantages of sigmoid:

- It takes a real-valued number and maps it into the range [0,1] (which is just what we want for a probability)

- It is nearly linear around 0 but has a sharp slope toward the ends, it tends to squash outlier values toward 0 or 1.

- Differentiable handy for learning

To make it a probability, we just need to make sure that the two cases, and , sum to 1:

Now we have an algorithm that given an instance computes the probability . For a test instance , we say yes if the probability is more than 0.5, and no otherwise. We call 0.5 the decision boundary:

Example: sentiment classification

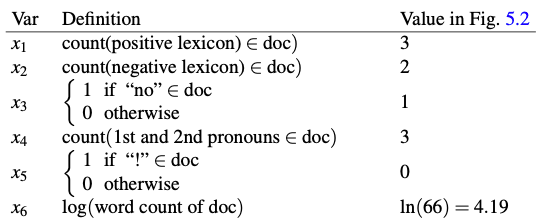

Suppose we are doing binary sentiment classification on movie review text, and we would like to know whether to assign the sentiment class + or − to a review document .

We’ll represent each input observation by the 6 features of the input shown in the following table

Assume that for the moment that we’ve already learned a real-valued weight for each of these features, and that the 6 weights corresponding to the 6 features are , while .

- The weight , for example indicates how important a feature the number of positive lexicon words (great, nice, enjoyable, etc.) is to a positive sentiment decision, while tells us the importance of negative lexicon words. Note that is positive, while , meaning that negative words are negatively associated with a positive sentiment decision, and are about twice as important as positive words.

Given these 6 features and the input review , and can be computed:

This sentiment is positive ().

Learning in Logistic Regression

Logistic regression is an instance of supervised classification in which we know the correct label (either 0 or 1) for each observation .

The system produces/predicts , the estimate for the true . We want to learn parameters ( and ) that make for each training observation as close as possible to the true . 💪

This requires two components:

- loss function: also called cost function, a metric measures the distance between the system output and the gold output

- The loss function that is commonly used for logistic regression and also for neural networks is cross-entropy loss

- Optimization algorithm for iteratively updating the weights so as to minimize this loss function

- Standard algorithm: gradient descent

The Cross-Entropy Loss Function

We need a loss function that expresses, for an observation , how close the classifier output () is to the correct output (, which is or ):

This loss function should prefer the correct class labels of the training examples to be more likely.

👆 This is called conditional maximum likelihood estimation: we choose the parameters that maximize the log probability of the true labels in the training data given the observations . The resulting loss function is the negative log likelihood loss, generally called the cross-entropy loss.

Derivation

Task: for a single observation , learn weights that maximize , the probability of the correct label

There’re only two discretions outcomes ( or )

This is a Bernoulli distribution. The probability for one observation can be expressed as:

Now we take the log of both sides. This will turn out to be handy mathematically, and doesn’t hurt us (whatever values maximize a probability will also maximize the log of the probability):

👆 This is the log likelihood that should be maximized.

In order to turn this into loss function (something that we need to minimize), we’ll just flip the sign. The result is the cross-entropy loss:

Recall that :

Example

Let’s see if this loss function does the right thing for example above.

We want the loss to be

smaller if the model’s estimate is close to correct, and

bigger if the model is confused.

Let’s suppose the correct gold label for the sentiment example above is positive, i.e.: .

In this case our model is doing well 👏, since it gave the example a a higher probability of being positive () than negative ().

If we plug and into the cross-entropy loss, we get

By contrast, let’s pretend instead that the example was negative, i.e.: .

In this case our model is confused 🤪, and we’d want the loss to be higher.

If we plug and into the cross-entropy loss, we get

It’s obvious that the lost for the first classifier () is less than the loss for the second classifier ().

Why minimizing this negative log probability works?

A perfect classifier would assign probability to the correct outcome and probability to the incorrect outcome. That means:

- the higher (the closer it is to 1), the better the classifier;

- the lower is (the closer it is to 0), the worse the classifier.

The negative log of this probability is a convenient loss metric since it goes from 0 (negative log of 1, no loss) to infinity (negative log of 0, infinite loss). This loss function also ensures that as the probability of the correct answer is maximized, the probability of the incorrect answer is minimized; since the two sum to one, any increase in the probability of the correct answer is coming at the expense of the incorrect answer.

Gradient Descent

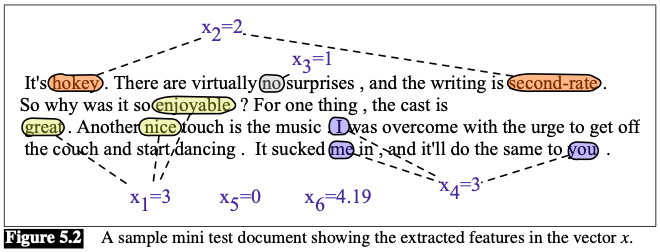

Goal with gradient descent: find the optimal weights that minimize the loss function we’ve defined for the model. From now on, we’ll explicitly represent the fact that the loss function is parameterized by the weights (in the case of logistic regression ):

Gradient descent finds a minimum of a function by figuring out in which direction (in the space of the parameters ) the function’s slope is rising the most steeply, and moving in the opposite direction.

💡 Intuition

if you are hiking in a canyon and trying to descend most quickly down to the river at the bottom, you might look around yourself 360 degrees, find the direction where the ground is sloping the steepest, and walk downhill in that direction.

For logistic regression, this loss function is conveniently convex

- Just one minimum

- No local minima to get stuck in

Gradient descent starting from any point is guaranteed to find the minimum.

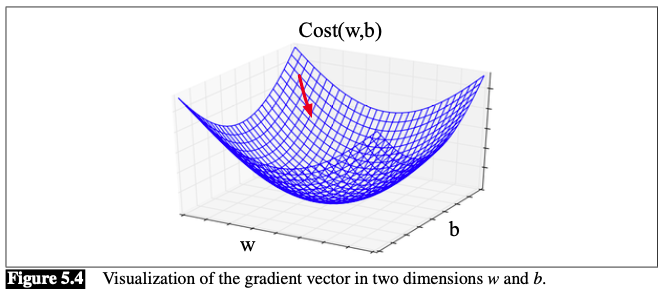

Visualization:

The magnitude of the amount to move in gradient descent is the value of the slope weighted by a learning rate . A higher (faster) learning rate means that we should move w more on each step.

In the single-variable example above, The change we make in our parameter is

In -dimensional space, the gradient is a vector that expresses the directinal components of the sharpest slope along each of those dimensions.

Visualizaion (E.g., ):

In each dimension , we express the slope as a partial derivative of the loss function. The gradient is defined as a vector of these partials:

Thus, the change of is:

The gradient for Logistic Regression

For logistic regression, the cross-entropy loss function is

The derivative of this loss function is:

For derivation of the derivative above we need:

derivative of :

derivative of the sigmoid:

Chain rule of derivative: for ,

Now compute the derivative:

Now plug in the derivative of the sigmoid, and use the chain rule one more time:

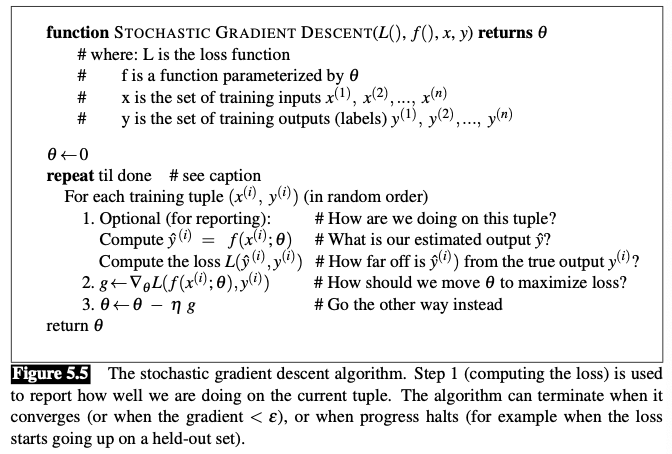

Stochastic Gradient descent

Stochastic gradient descent is an online algorithm that minimizes the loss function by

- computing its gradient after each training example, and

- nudging in the right direction (the opposite direction of the gradient).

The learning rate η is a (hyper-)parameter that must be adjusted.

- If it’s too high, the learner will take steps that are too large, overshooting the minimum of the loss function.

- If it’s too low, the learner will take steps that are too small, and take too long to get to the minimum.

It is common to begin the learning rate at a higher value, and then slowly decrease it, so that it is a function of the iteration of training.

Mini-batch training

Stochastic gradient descent: chooses a single random example at a time, moving the weights so as to improve performance on that single example.

- Can result in very choppy movements

Batch gradient descent: compute the gradient over the entire dataset.

- Offers a superb estimate of which direction to move the weights

- Spends a lot of time processing every single example in the training set to compute this perfect direction.

Mini-batch gradient descent

- we train on a group of examples (perhaps 512, or 1024) that is less than the whole dataset.

- Has the advantage of computational efficiency

- The mini-batches can easily be vectorized, choosing the size of the mini-batch based on the computational resources.

- This allows us to process all the exam- ples in one mini-batch in parallel and then accumulate the loss

Define the mini-batch version of the cross-entropy loss function (assuming the training examples are independent):

The cost function for the mini-batch of examples is the average loss for each example:

The mini-batch gradient is the average of the individual gradients:

Regularization

🔴 There is a problem with learning weights that make the model perfectly match the training data:

- If a feature is perfectly predictive of the outcome because it happens to only occur in one class, it will be assigned a very high weight. The weights for features will attempt to perfectly fit details of the training set, in fact too perfectly, modeling noisy factors that just accidentally correlate with the class. 🤪

This problem is called overfitting.

A good model should be able to generalize well from the training data to the unseen test set, but a model that overfits will have poor generalization.

🔧 Solution: Add a regularization term to the objective function:

- : penalize large weights

- a setting of the weights that matches the training data perfectly— but uses many weights with high values to do so—will be penalized more than a setting that matches the data a little less well, but does so using smaller weights.

Two common regularization terms:

L2 regularization (Ridge regression)

quadratic function of the weight values

: L2 Norm, is the same as the Euclidean distance of the vector from the origin

L2 regularized objective function:

L1 regularization (Lasso regression)

linear function of the weight values

: L1 Norm, is the sum of the absolute values of the weights.

- Also called Manhattan distance (the Manhattan distance is the distance you’d have to walk between two points in a city with a street grid like New York)

L1 regularized objective function

L1 Vs. L2

- L2 regularization is easier to optimize because of its simple derivative (the derivative of is just ), while L1 regularization is more complex ((the derivative of is non-continuous at zero)

- Where L2 prefers weight vectors with many small weights, L1 prefers sparse solutions with some larger weights but many more weights set to zero.

- Thus L1 regularization leads to much sparser weight vectors (far fewer features).

Both L1 and L2 regularization have Bayesian interpretations as constraints on the prior of how weights should look.

L1 regularization can be viewed as a Laplace prior on the weights.

L2 regularization corresponds to assuming that weights are distributed according to a gaussian distribution with mean .

In a gaussian or normal distribution, the further away a value is from the mean, the lower its probability (scaled by the variance )

By using a gaussian prior on the weights, we are saying that weights prefer to have the value 0.

A gaussian for a weight is:

If we multiply each weight by a gaussian prior on the weight, we are thus maximizing the following constraint:

In log space, with , and assuming , we get:

Multinomial Logistic Regression

More than two classes?

Use multinomial logistic regression (also called softmax regression, or maxent classifier). The target is a variable that ranges over more than two classes; we want to know the probability of being in each potential class .

We use the softmax function to compute :

- Takes a vector of arbitrary values

- Maps them to a probability distribution

- Each value

- All the values summing to

For a vector of dimensionality , the softmax is:

The softmax of an input vector is thus:

- The denominator is used to normalize all the values into probabilities.

Like the sigmoid, the input to the softmax will be the dot product between a weight vector and an input vector (plus a bias). But now we’ll need separate weight vectors (and bias) for each of the classes.

Features in Multinomial Logistic Regression

For multiclass classification, input features are:

- observation

- candidate output class

When we are discussing features we will use the notation : feature for a particular class for a given observation

Example

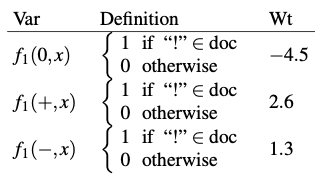

Suppose we are doing text classification, and instead of binary classification our task is to assign one of the 3 classes +, −, or 0 (neutral) to a document. Now a feature related to exclamation marks might have a negative weight for 0 documents, and a positive weight for + or − documents:

Learning in Multinomial Logistic Regression

The loss function for a single example is the sum of the logs of the output classes:

- : evaluates to if the condition in the brackets is true and to otherwise.

Gradient: