Use GPU within a Docker Container

Nvidia container toolkit

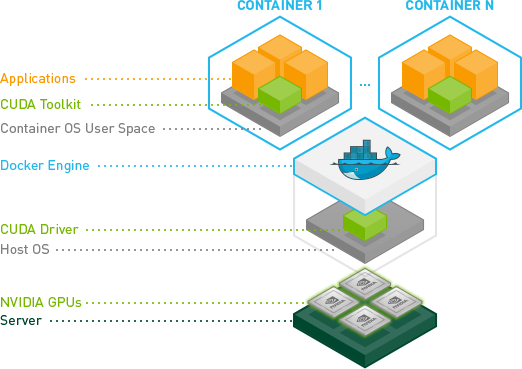

The NVIDIA Container Toolkit allows users to build and run GPU accelerated containers. The toolkit includes a container runtime library and utilities to automatically configure containers to leverage NVIDIA GPUs.

Essentially, the NVIDIA Container Toolkit is a docker image that provides support to automatically recognize GPU drivers on your base machine and pass those same drivers to your Docker container when it runs. So if you are able to run nvidia-smi on your base machine, you will also be able to run it in your Docker container (and all of your programs will be able to reference the GPU).

In order to use the NVIDIA Container Toolkit, simply pull the desired NVIDIA Container Toolkit image at the top of Dockerfile as the base image using command FROM. From this base state, you can develop the Dockerfile and add further layers as in normal Dockerfile.

Example

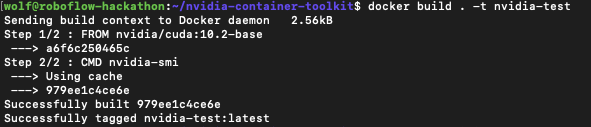

FROM nvidia/cuda:10.2-base

CMD nvidia-smi

In that Dockerfile we have imported the NVIDIA Container Toolkit image for 10.2 drivers and then we have specified a command to run when we run the container to check for the drivers.

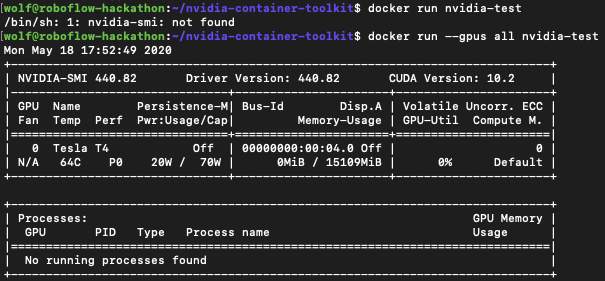

Now we build the image like so with docker build . -t nvidia-test

Source: [How to Use the GPU within a Docker Container](https://blog.roboflow.com/use-the-gpu-in-docker/)

Now we run the container from the image by using the command docker run –gpus all nvidia-test. Keep in mind, we need the --gpus all, otherwise the GPU will not be exposed to the running container. For more details about specifying GPU(s), see GPU Enumeration.